Welcome to the second part of this blog series diving into Red Hat OpenShift Lightspeed and its performance in real-world OpenShift certification scenarios like the Red Hat Certified OpenShift Administrator exam. If you haven't read the first post, you can find Part 1 here.

Here, we're starting fresh with a new hands-on exercise. In Part 1, we tested OpenShift Lightspeed against a sample exam exercise, with promising results. Now it's time to see how well it handles a new set of questions focused on application security and advanced configurations.

Reviewing our benchmark

As a reminder, we're working with the latest version of OpenShift Lightspeed 1.0, running the Azure OpenAI's gpt-4 model for inference. Before diving into the exercise, let's take a moment to review the evaluation criteria we're using to score each response:

- Correct (100%): When OpenShift Lightspeed delivers a complete and accurate response with specific steps and commands that fully solve the task.

- Partially Correct (50%): When the response is well structured, addresses the question, and points in the right direction, but misses little details.

- Incorrect (0%): When the answer is unclear, irrelevant, or fails to address the question at all.

To pass the exam, OpenShift Lightspeed needs to achieve an average score of 70%, just like in the official certification exam.

Note

Before we proceed, it's worth restating that while OpenShift Lightspeed shows great promise in handling certification-level scenarios, its role is best understood as a powerful augmentation tool—not a substitute for human expertise. Red Hat Certified Professionals bring essential real-world knowledge, context, and decision-making skills that are especially vital in high-stakes environments like healthcare, finance, energy, transportation, and government, or in complex configurations. OpenShift Lightspeed helps accelerate workflows and reduce cognitive load, but it's not a replacement for a Certified Professional who ensures that its outputs are appropriate and production-ready.

Exercise 2: Secure applications

In this second exercise, we'll revisit some of the areas we covered in Part 1, but we'll also explore new tasks and resources essential for deploying applications more securely in our OpenShift cluster. Here is an index summarizing the main topics we'll cover:

- Creating namespaces and applying labels

- Granting cluster-admin roles to user groups

- Enforcing resource quotas for namespaces

- Setting default resource limits for workloads

- Deploying applications via CronJob

- Working with service accounts

- Deploying database resources in the cluster

- Deploying application workloads in namespaces

- Configuring TLS encryption

- Configuring networking for external traffic

- Implementing network policies to restrict traffic

- Configuring cluster network ingress restrictions

As in Part 1, we'll start from an empty chat and progressively ask the questions, always keeping the same session to ensure OpenShift Lightspeed retains the necessary context.

1. Creating namespaces and applying labels

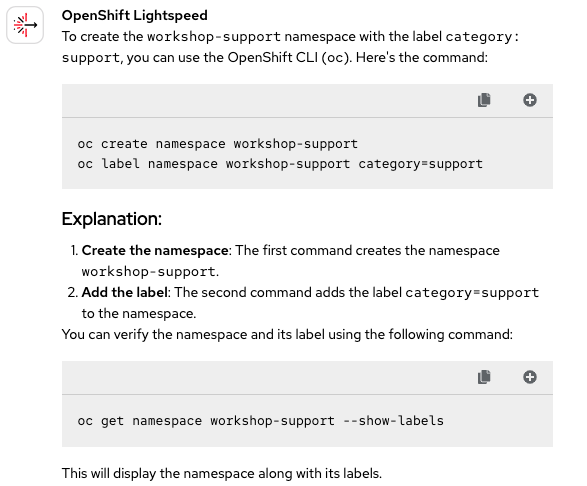

Question 1: Create the workshop-support namespace with the category: support label.

We're kicking off with the first question of this new exercise. To make sure there is no interference from the previous session, we cleared OpenShift Lightspeed's conversation and started a fresh chat. We'll begin with a simple question and see how it performs (Figure 1).

A strong start: OpenShift Lightspeed provides the correct steps to create the namespace and apply the label as specified in the question. It even adds a final step to verify that the resource was created successfully. So here we have our first Correct 🟢 score.

2. Granting cluster-admin roles to user groups

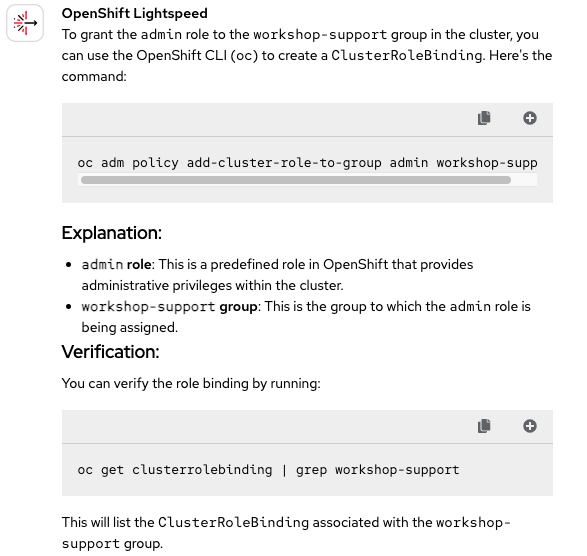

Question 2: Grant to the workshop-support group the admin role in the cluster.

Now we move on to a question related to permissions. We saw a similar one in the previous blog, and OpenShift Lightspeed answered it successfully. Let's see if it responds just as effectively this time (Figure 2).

No surprises here. OpenShift Lightspeed has no trouble with these kinds of questions. Once again, it earns a Correct 🟢 mark.

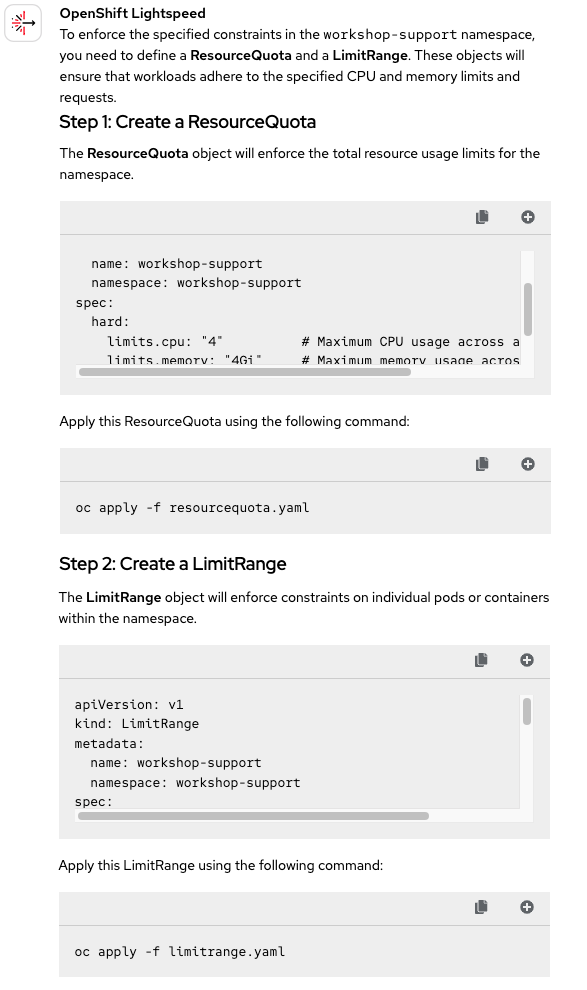

3. Enforcing resource quotas for namespaces

Question 3: Workloads from the workshop-support namespace must enforce the following constraints. Any quota or limit range must have the workshop-support name for grading purposes:

- The project uses up to 4 CPUs.

- The project uses up to 4 Gi of RAM.

- The project requests up to 3.5 CPUs.

- The project requests up to 3 Gi of RAM.

Now, let's review how well OpenShift Lightspeed enforces constraints within a namespace. In this case, we haven't attached any quota template to the question, so it will be a good opportunity to check whether it correctly identifies the required resource and configures it properly. See Figure 3.

Since we didn't attach any template to the query this time, OpenShift Lightspeed interpreted that the constraints could be enforced in two different ways: using ResourceQuotas or LimitRanges. In this case, we were specifically looking for the first option.

Looking at the YAML file provided in the answer, we can confirm that it correctly fills in the required fields with the specified values. It also uses the resource name mentioned in the prompt (workshop-support). On the other hand, the LimitRange suggested in the second step wasn't necessary, but it doesn't interfere with the exercise either. For all these reasons, we're assigning another Partially Correct 🟡 mark.

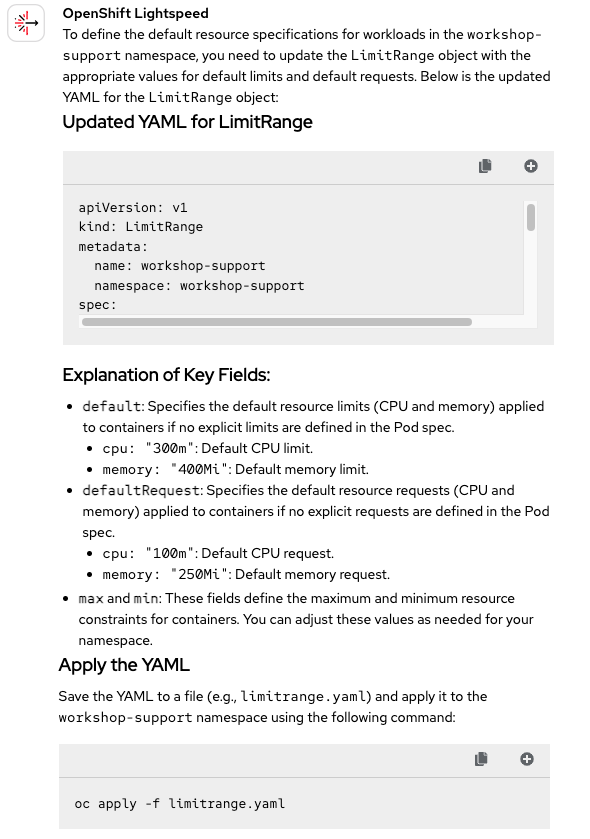

4. Setting default resource limits for workloads

Question 4: Define the default resource specification for workloads:

- A default limit of 300m CPUs.

- A default limit of 400 Mi of RAM.

- A default request of 100m CPUs.

- A default request of 250 Mi of RAM.

This time, we want to configure a LimitRange. And unlike the previous question, we do have a file we can attach to the query (limitrange2.yaml). As we saw in Part 1, OpenShift Lightspeed tends to perform well when attachments are provided together with the question. Let's see if the response shown in Figure 4 confirms that trend.

As expected, another correct response. OpenShift Lightspeed was able to modify the attached file to meet the requirements. It's true that, as we saw in the previous blog, OpenShift Lightspeed doesn't retain the original filename (limitrange2.yaml) when applying the resource. Instead, it generates its own name (limitrange.yaml). According to the evaluation guidelines we established at the beginning, this is considered a possible improvement, but from a technical standpoint the response is still Correct 🟢.

5. Deploying applications via CronJob

Question 5: As the do280-support user, deploy the project-cleaner application from the example-pod.yaml file to the workshop-support namespace by using a project-cleaner CronJob that runs every minute.

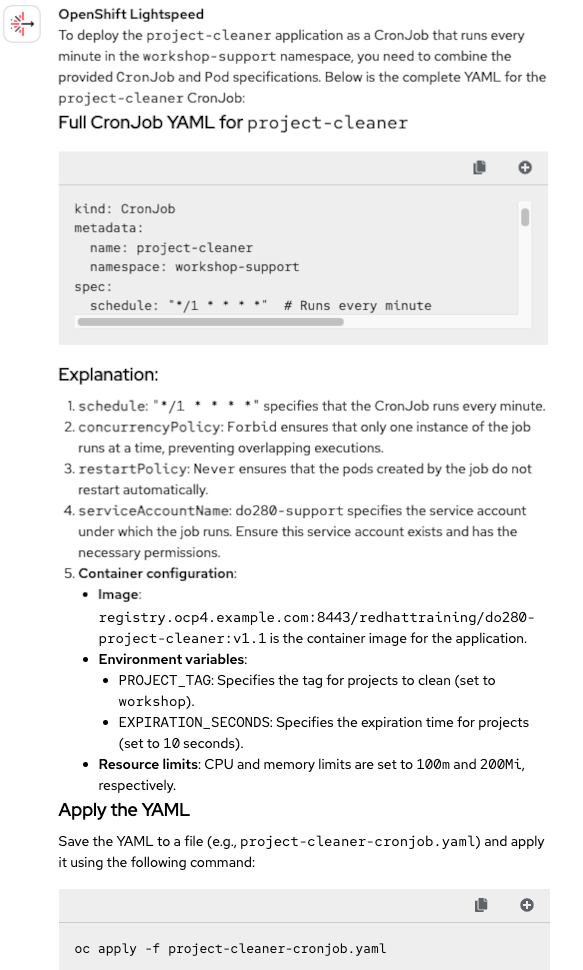

In Part 1, we saw that OpenShift Lightspeed is capable of providing the correct commands to deploy applications directly from a container image. However, this time the deployment will be done through a CronJob, making things a bit more complex. Let's see in Figure 5 how well it handles this scenario, attaching both the CronJob and the application to the question.

Wow! That's definitely impressive. The CronJob file was correctly modified to include the schedule specifications to run every minute. That alone is great, but even more remarkable is that OpenShift Lightspeed also managed to insert and adapt the application workload content into the CronJob definition so it can deploy the app as expected.

Although everything looks perfect, the solution doesn't work when applied yet. Why? This is not OpenShift Lightspeed's fault. The original template contained a serviceAccountName field that needed to be updated. However, we haven't specified which service account should be used, so OpenShift Lightspeed simply assigned one at random. This was a reasonable assumption given the lack of context. So how will we evaluate its job? Our AI has accurately solved the task in question, providing a clear and detailed explanation regarding the implementation of a CronJob running the desired application. All in all, we won't take the service account detail into consideration as we will be addressing it in the next question and mark this as a Correct 🟢 response!

6. Working with service accounts

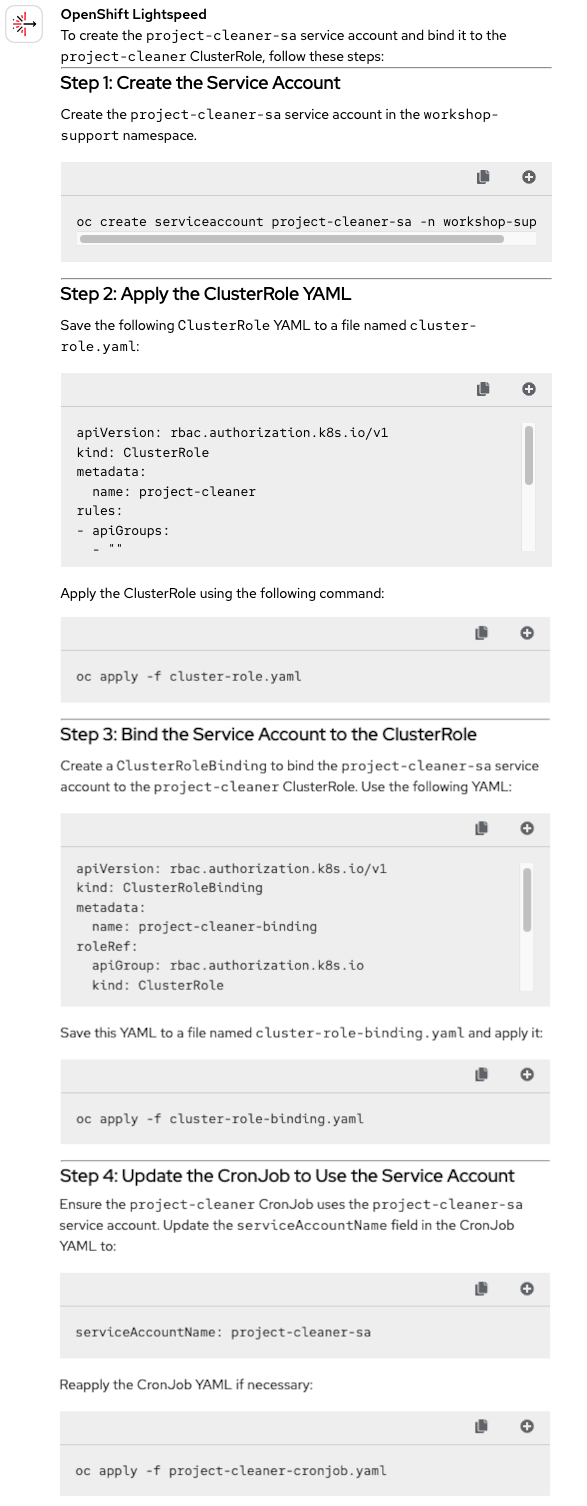

Question 6: You must create a project-cleaner-sa service account to use in the project cleaner application. The role that the project cleaner needs is defined in the cluster-role.yaml file.

This was the missing piece to our CronJob issue. This question asks us to create a service account and use it in the application. If we had asked this question earlier, it could have helped OpenShift Lightspeed apply the correct service account in the previous step. However, one of the requirements was to ask the questions exactly as they appear, and in the given order, and that's what we did.

That leads us to a very interesting scenario: will OpenShift Lightspeed be able to go back and reconfigure the CronJob without us telling it to do so explicitly? Let's attach the cluster-role.yaml file and find out. See Figure 6.

Every step described is spot-on, from creating the service account in the correct namespace, applying and binding the role, to updating the CronJob. Without us having to explicitly ask for it, OpenShift Lightspeed correctly understood that the service account value within the CronJob needed to be updated in order for the application to deploy properly. All thanks to staying in the same chat session and the context OpenShift Lightspeed retains. No doubt this time: another 100% Correct 🟢 answer.

7. Deploying database resources in the cluster

Question 7: Deploy the beeper-db database in the beeper-db.yaml file to the workshop-support namespace.

We have already seen that OpenShift Lightspeed has no trouble creating resources in the cluster when we provide the corresponding YAML file. Let's try once again by attaching the resource for the database. See Figure 7.

Given what OpenShift Lightspeed has previously accurately answered more complex questions, it's no surprise that it handled this simpler one with ease. In fact, in the second step, it even reminds us to switch to the workshop-support namespace, clearly showing it understands this namespace was already created earlier. Still, it provides a command to create it just in case. Finally, it deploys the database in the namespace without any issues. All Correct 🟢 once again.

8. Deploying application workloads in namespaces

Question 8: Deploy the beeper-api application in the deployment.yaml file to the workshop-support namespace.

This question is exactly the same as the previous one, with the only difference being the attached file, which is now deployment.yaml. Therefore, we can expect it to be able to create the resource correctly, but let's see how it goes (Figure 8).

As we can see, this response is quite similar to the previous one. The only difference is the type of resource being applied. Not much more to add here: the answer is spot on. That makes it 7 Correct 🟢 marks so far!

9. Configuring TLS encryption

Question 9: You must configure this application to use TLS end-to-end encryption by using the following specification:

- Use the

beeper-api.pemcertificate and thebeeper-api.keyin thecertsdirectory. - Configure the

/etc/pki/beeper-api/path as the mount point for the certificate and key. - Set the

TLS_ENABLEDenvironment variable to thetruevalue.

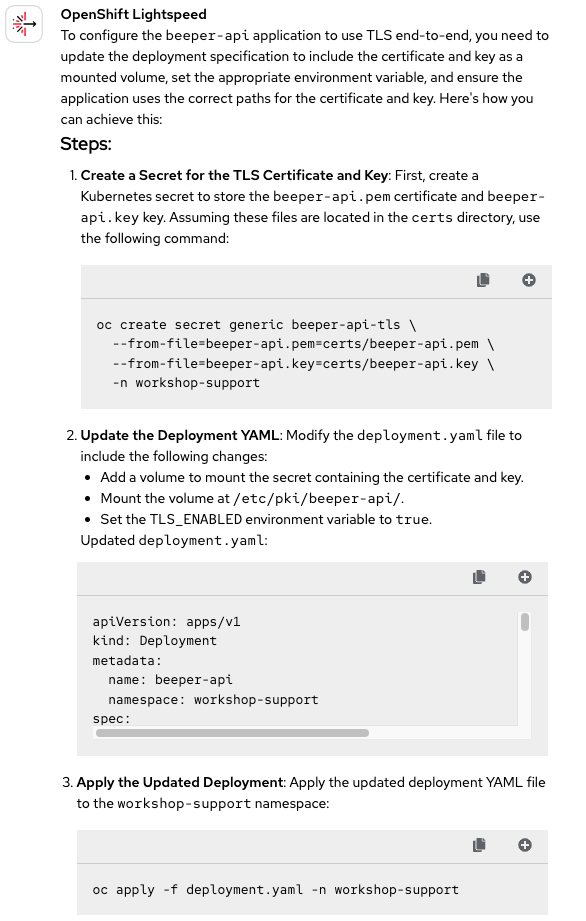

Here, we continue configuring the networking for our application (Figure 9). This type of question hasn't come up in either of the two blogs yet. OpenShift Lightspeed will now need to understand and handle certificates in order to configure TLS traffic. This time, we won't include any attachment in the query.

The response includes several aspects to review, so let's break down the analysis step by step. First, the task required creating a secret to configure TLS. While the provided command does inject the certificate and key into a secret, the secret is created as a generic type when it should have been of type tls.

One thing it does get right is recognizing that the deployment also needs to be modified to configure the mount point. It's surprising that although this task was the most complex to infer, OpenShift Lightspeed handled it with no problem, and a seemingly simpler task like the one mentioned above ended up being incorrect. Here we can see how the path is mounted:

volumeMounts:

- name: beeper-api-tls

mountPath: /etc/pki/beeper-api/ # Mount point for the certificate and the key

readOnly: true

volumes:

- name: beeper-api-tls

secret:

secretName: beeper-api-tls # Secret that contains the certificate and the keyThe third requirement was to set a variable to true, and this was done correctly as we can see here:

env:

- name: TLS_ENABLED

value: "true" # Enable TLSHowever, there was one final step OpenShift Lightspeed forgot to complete: configuring TLS for the startup, readiness, and liveness probes by changing scheme: HTTP to scheme: HTTPS. For this reason, and everything mentioned above, we can't consider the answer accurate, as it didn't achieve the intended result. Therefore, we'll have to mark it as Incorrect 🔴.

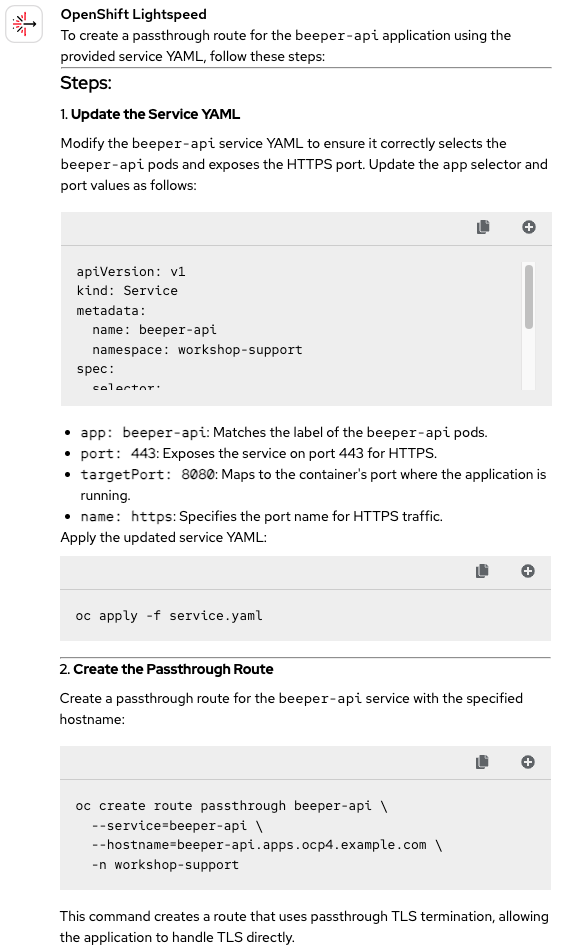

10. Configuring networking for external traffic

Question 10: Create a passthrough route with the hostname beeper-api.apps.ocp4.example.com, and use the URL beeper-api.apps.ocp4.example.com/api/beeps with the CA certificate to verify the beeper API is accessible from outside the cluster.

Let's move on from that mistake asking OpenShift Lightspeed a new question (though we're still staying in the networking area). This time, we'll see if OpenShift Lightspeed is able to create the route as expected. To do this, we're going to attach the service.yaml file to the query so it can make proper use of it. See Figure 10.

Well, we're back on track. This response is perfect. OpenShift Lightspeed correctly modified the service as expected and then deployed it. In step two, it used that service to create a passthrough route with the specified URL. No complaints about the process, so this one definitely earns another Correct 🟢 mark.

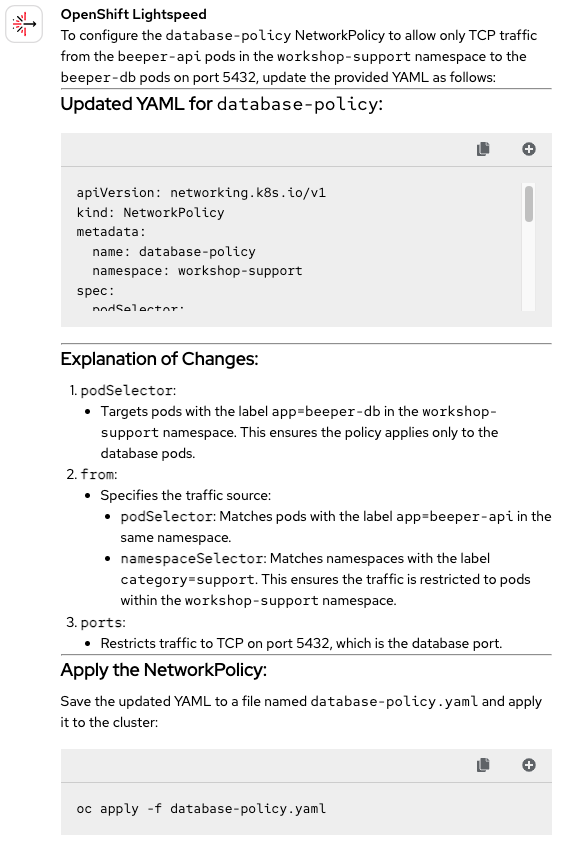

11. Implementing network policies to restrict traffic

Question 11: The database pods, which are pods in the workshop-support namespace with the app=beeper-db label, must accept only TCP traffic from the beeper-api pods in the workshop-support namespace on the 5432 port. You can use the category=support label to identify the pods that belong to the workshop-support namespace.

We've reached what was OpenShift Lightspeed's Achilles' heel in the previous blog: configuring network policies to restrict traffic. To give it a hand this time, we're attaching the db-networkpolicy.yaml file to the query (Figure 11). Fingers crossed!

What a surprise! This time, it managed to solve it without any issues. Let's try to analyze why.

Compared to question 8 from Part 1, this new question is much clearer and more concise while the other mixed several bullet points in the prompt. Additionally, in this new question, we restrict traffic using labels both on the pods and the namespace, whereas in question 8 of Part 1, the restriction applied to all pods in the namespace. Overall, the combination of a clearer question and a simpler scenario allows us to assign OpenShift Lightspeed another Correct 🟢 score.

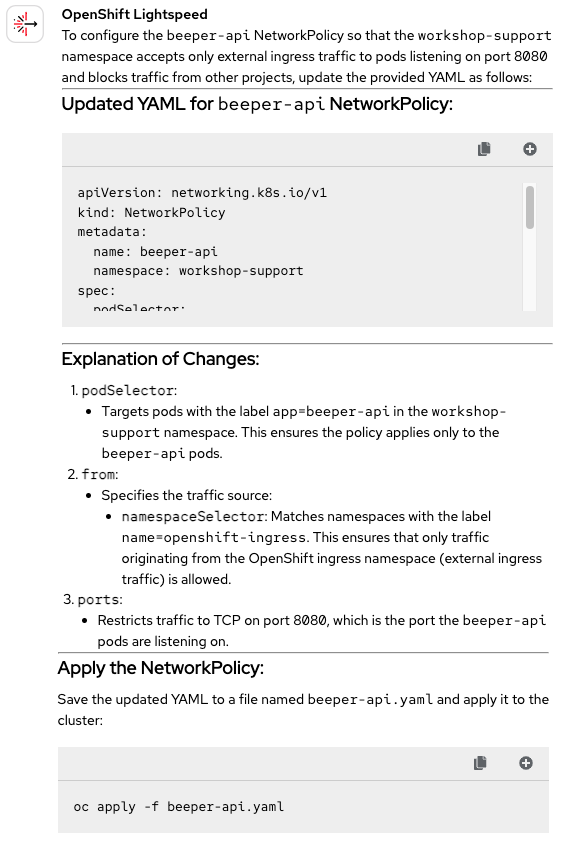

12. Configuring cluster network ingress restrictions

Question 12: Configure the cluster network so that the workshop-support namespace accepts only external ingress traffic to pods that listen on the 8080 port, and blocks traffic from other projects.

And with this, we reach the final question. We're still working on networking, one of the topics that tends to challenge OpenShift Lightspeed the most. This time, it needs to restrict traffic from other projects, while allowing external traffic to the pods' port 8080. As before, the beeper-api-ingresspolicy.yaml template will be attached to the query. Let’s see if the response shown in Figure 12 is accurate.

This time, it didn't quite work out. It seems like OpenShift Lightspeed got a bit confused when trying to restrict traffic. We were expecting this new policy to apply to every pod in the namespace using podSelector: {}. Instead, it targeted only the pods with the beeper-api label, which is something that wasn't mentioned in the prompt. It looks like it pulled context from the previous question and applied it into this one.

It would've been nice to end on a high note, but that's how it goes. In the end, we'll have to mark this one as Incorrect 🔴. Now the big question is: can OpenShift Lightspeed still pass the exam despite these last-minute setbacks? Let's find out in the final conclusions.

Final conclusions

Here we come to the end of our investigation. In these two blogs, we tested how well OpenShift Lightspeed performs when answering OpenShift-related questions, simulating how a regular user might approach the Red Hat exams. The two selected exercises have helped us cover most of the topics covered in the exam and have given us a good sense of how much OpenShift knowledge OpenShift Lightspeed actually has.

As we have seen, OpenShift Lightspeed is capable of answering the vast majority of questions, regardless of the resource and area involved. However, as expected, the more complex and less concise a question is, the harder it becomes to provide a 100% accurate response. OpenShift Lightspeed has no trouble handling users, permissions, namespaces, applications, limits, and quotas. However, other tasks such as working with templates and configuring networking can present more challenges.

We've also observed the importance of context. By keeping the same chat session throughout the exercise, OpenShift Lightspeed retains previous information and is able to recall earlier requirements, adapting them to meet new needs. Additionally, we've noticed that attaching the YAML to the query yields better results, as otherwise, OpenShift Lightspeed tends to generate more generic files.

Does OpenShift Lightspeed pass the test?

We've seen that OpenShift Lightspeed is quite good at solving OpenShift tasks, but there's probably one question still on your mind: Was it good enough for OpenShift Lightspeed to pass our test? Let's build a table with the results from both exercises to find out.

| Incorrect 🔴 | Partially Correct 🟡 | Correct 🟢 | |

| Exercise 1 | 1 | 3 | 6 |

| Exercise 2 | 2 | 1 | 9 |

And now, the final result: As we saw in the first blog, the average score obtained was 75%, which was already enough to pass the exercise. Now, for this second blog, the average score comes out to 79%! This means both exercises were successfully completed—and combining the two results, we get an overall average of 77%.

Of course, while OpenShift Lightspeed's performance is impressive, it's important to remember that knowledge checks, like an exam, are not equivalent to operating successfully in complex, real-world production environments. Red Hat Certified Professionals offer critical expertise, practical experience, and the contextual judgment needed to apply AI-driven suggestions safely and effectively. OpenShift Lightspeed complements the skills of an expert, helping accelerate delivery, reduce toil, and improve consistency while maintaining the high standards required for enterprise-grade operations.

So to conclude this blog investigation, let's go back to the question it all started with: Can AI master OpenShift? We've seen OpenShift Lightspeed tackle several OpenShift administrative tasks, ranging from a variety of topics such as authentication and authoritation, networking or application security. And it has more than surpassed the expectations, achieving a passing score of over 70%!

Now it's your turn to bring OpenShift Lightspeed into your day-to-day OpenShift operations. Whether you are managing clusters or deploying applications, Red Hat OpenShift Lightspeed is ready to support you-helping you work more efficiently and boosting your confidence in complex environments. If you're interested in learning more, meeting with us to plan or learn or just want to schedule a demo, just let us know!