The evolution of large language models (LLMs) is reaching a pivotal point: the ability to reason. This breakthrough in AI allows models to dissect complex challenges, understand nuanced contexts, and deliver more insightful conclusions. The recent emergence of open source reasoning models like DeepSeek-R1, demonstrating capabilities comparable to proprietary offerings, signals a significant opportunity for enterprises seeking to leverage advanced AI without being locked into closed ecosystems.

But what sets reasoning models apart?

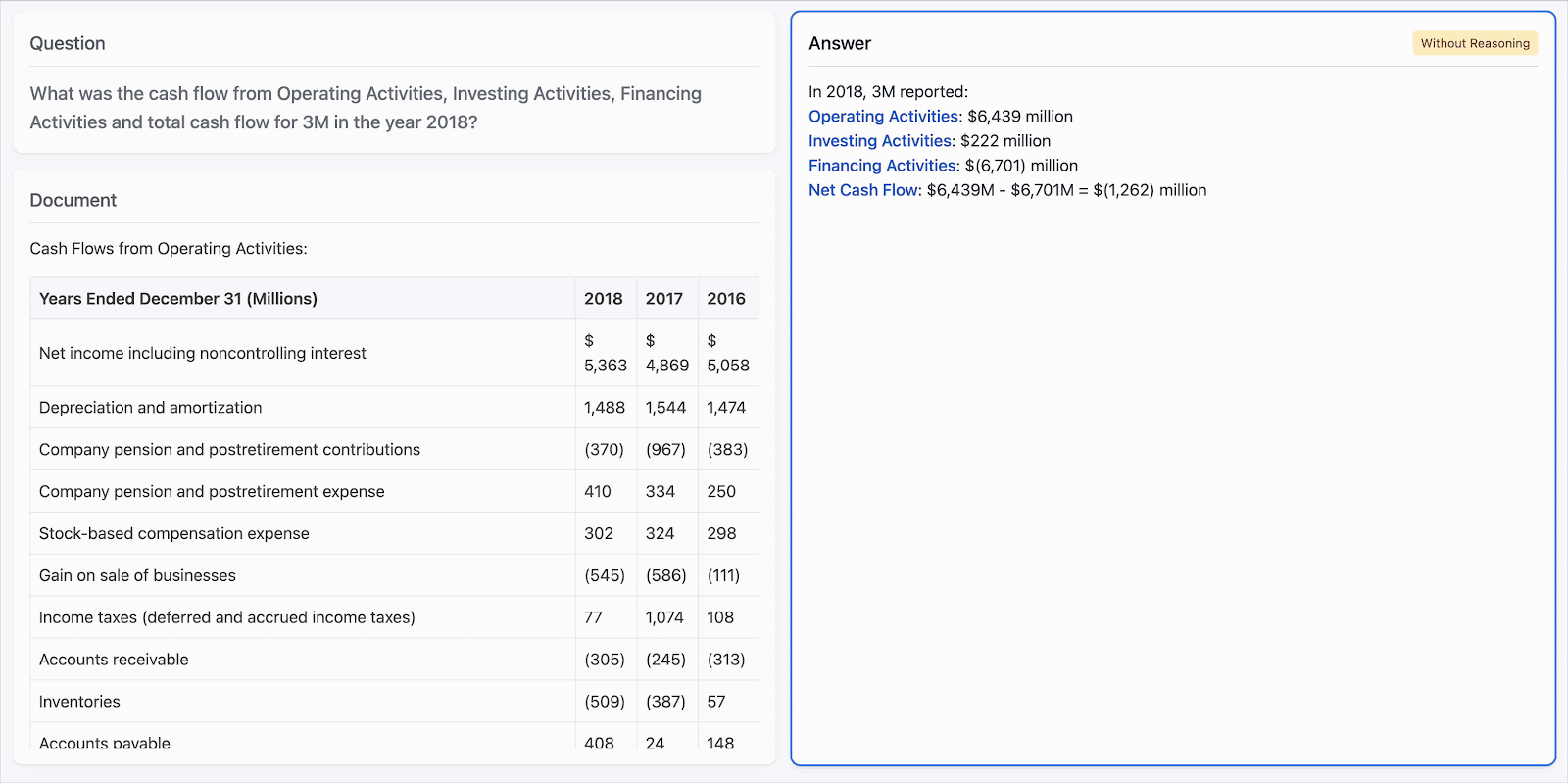

Unlike traditional LLMs that rely on surface-level pattern matching, reasoning models can decompose complex queries into logical steps, interpret intent, and synthesize answers grounded in multiple pieces of information. As Figure 1 shows, non-reasoning models often falter when asked to process intricate business questions across large knowledge bases. Reasoning models, by contrast, are better equipped to handle such complexity with structured thinking and multi-step inference.

Today, reasoning models are becoming the decision-making core of enterprise AI agents—orchestrating tools, coordinating subtasks, and delivering coherent, context-aware outputs. However, the best reasoning models are restricted by API paywalls, making it costly and impractical to scale them across enterprise workflows. This reliance on closed systems contrasts with the open source ethos that drives innovation and accessibility in enterprise technology.

Entrusting proprietary knowledge to external, black-box APIs introduces significant compliance, security, and data sovereignty risks for enterprises. Therefore, the ability to adapt reasoning models to internal data and specific business contexts is not merely an advantage, but a fundamental strategic imperative for organizations aiming to maintain control and foster innovation.

In this blog, we’ll discuss how to make reasoning models enterprise-ready using synthetic data generation (SDG) and fine-tuning. You’ll learn how to convert your proprietary data into structured training examples and use them to build private, domain-specific reasoning engines tailored to your business needs.

Our approach to designing effective reasoning models

Customizing LLMs for domain-specific reasoning begins with the right training data.

While LLMs are capable of general reasoning, the real value emerges when they’re customized to reason over your business data. Imagine a model that can identify root causes of material shortages or production yield issues for your supply chain managers, or one that helps CPAs spot tax discrepancies by analyzing financial documents.

Red Hat AI offers powerful SDG capabilities that transform unstructured enterprise data into high-quality synthetic datasets using advanced agentic pipelines. These pipelines leverage LLMs to generate samples based on user-provided seed examples, then iteratively validate, refine, and filter the knowledge to ensure quality.

With these tailored datasets, Red Hat AI enables you to create custom models designed to capture an organization's unique context. The result: business users can interact with your internal knowledge directly through natural language, asking questions and receiving grounded, domain-specific answers.

Let's now outline a structured approach to customizing reasoning models.

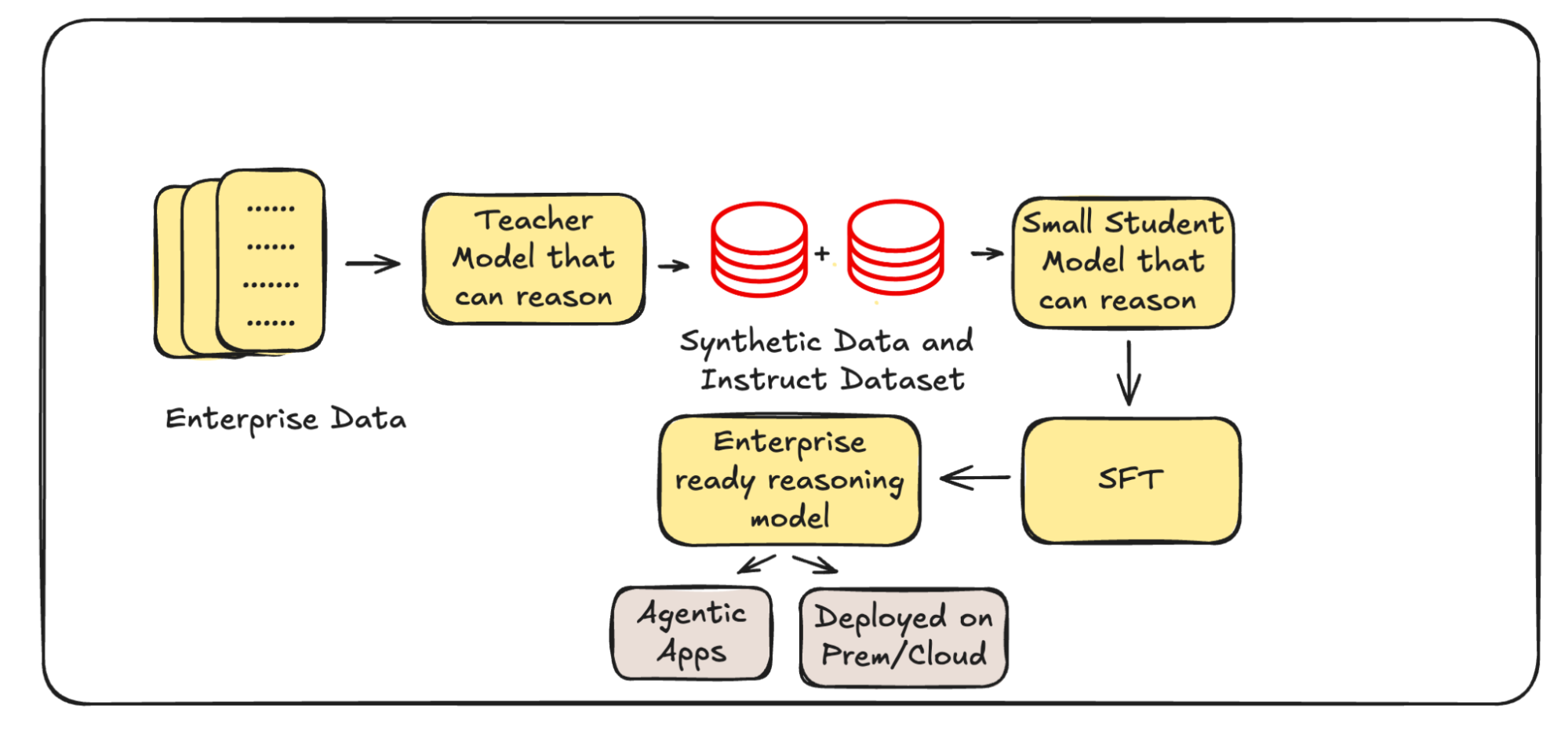

We start with a powerful teacher model and a student model with general reasoning ability. Using the customization process shown in Figure 2, the student is fine-tuned on enterprise-specific data, making it ready for real-world deployment. The resulting model can power agentic applications and be deployed on-premise or in the cloud, aligned with your organization’s security and compliance needs.

To demonstrate the power of enterprise reasoning models, let’s take a look at a few examples.

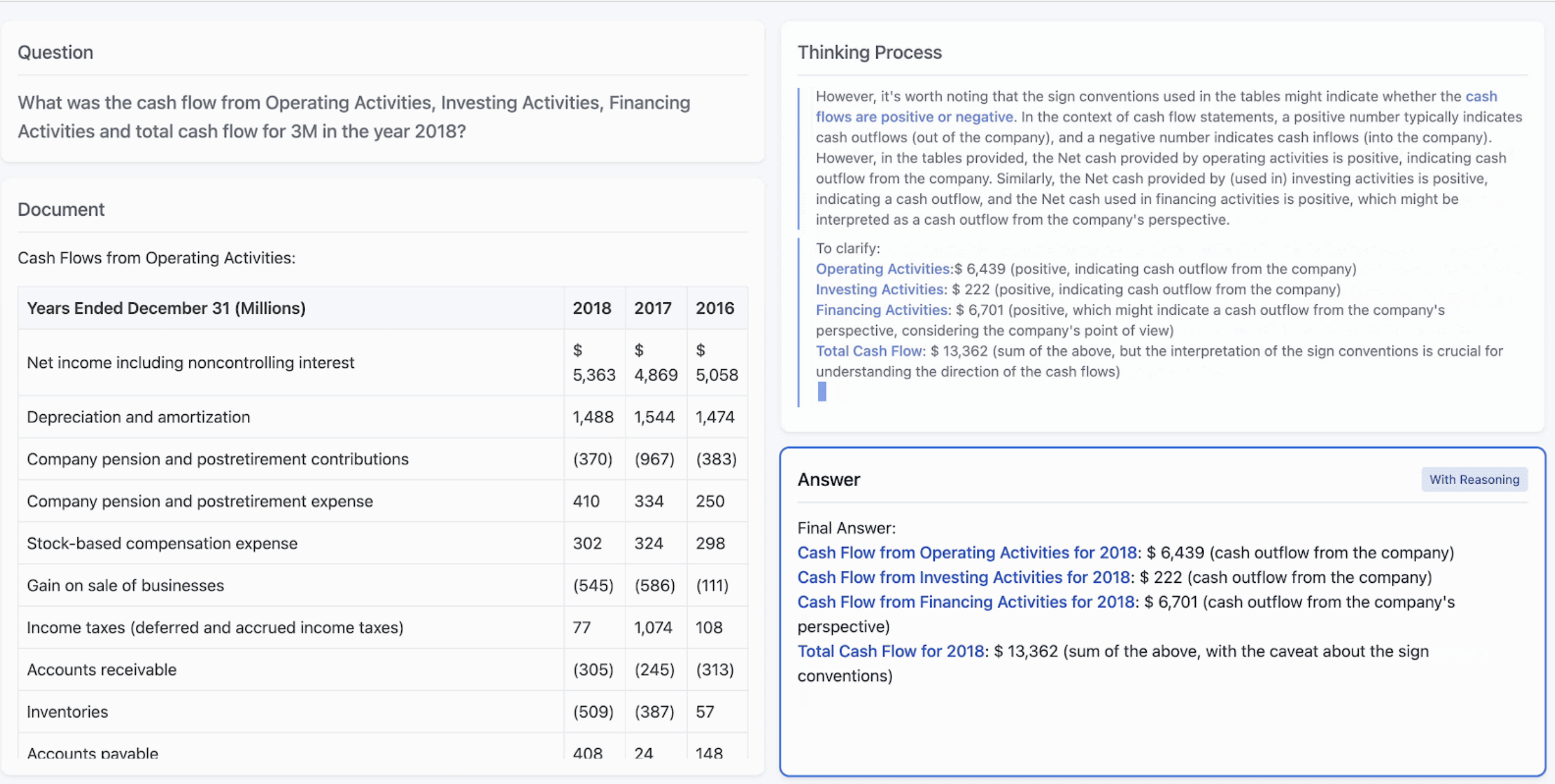

Let's first try an off-the-shelf reasoning model with a query from Figure 1. As shown in Figure 3, the model demonstrates some reasoning ability but fails to accurately interpret and reason over the document (the correct answer being -40 million).

Next, we use our agentic SDG reasoning pipeline from SDG-HUB to generate synthetic reasoning data.

Here is an example of generated data:

### Document

# 3M 2018 SEC Filings Form-10K

## PERFORMANCE BY BUSINESS SEG MENT

For a detailed discussion of the markets served and types of products offered by 3M's business segments, see Item 1, Business Segments. Financial information and other disclosures are provided in the Notes to the Consolidated Financial Statements. Effective in the first quarter of 2018, as part of 3M's continuing effort to improve the alignment of its businesses around markets and customers, the Company made the following changes:

## Consolidation of customer account activity within international countries - expanding dual credit reporting

The Company consolidated its customer account activity in each country into centralized sales districts for certain countries that make up approximately 70 percent of 3M's 2017 international net sales. Expansion of these initiatives, which previously had been deployed only in the U.S., reduces the complexity for customers when interacting with multiple 3M businesses. 3M business segment reporting measures include dual credit to business segments for certain sales and related operating income. This dual credit is based on which business segment provides customer account activity with respect to a particular product sold in a specific country. The expansion of alignment of customer accounts within additional countries increased the attribution of dual credit across 3M's business segments. Additionally, certain sales and operating income results for electronic bonding product lines that were previously equally divided between the Electronics and Energy business segment and the Industrial business segment are now reported similarly to dual credit.

### Generated Summary Instruction

**Employee Update**: Describe organizational changes in customer account management for internal stakeholders.

### Generated Document Summary

**Employee Update: Organizational Changes in Customer Account Management**

As part of 3M’s efforts to align businesses around markets and customers, effective Q1 2018, the company implemented two key changes in customer account management for international operations:

1. **Consolidation of Customer Account Activity**:

- **Scope**: Centralized sales districts were established in countries accounting for **~70% of 3M’s 2017 international net sales**.

- **Purpose**: Reduce complexity for customers interacting with multiple 3M businesses by streamlining account management within each country (previously only deployed in the U.S.).

2. **Expansion of Dual Credit Reporting**:

- **Mechanism**: Dual credit is assigned to business segments for sales and operating income based on which segment manages the **customer account activity** for a product in a specific country.

- **Impact**: The international consolidation increased dual credit attribution across segments.

- **Electronic Bonding Product Lines**: Previously split equally between the **Electronics and Energy** and **Industrial** segments, these are now reported using the dual credit methodology (aligned with country-based account responsibility).

**Key Data Points**:

- **Effective Date**: Q1 2018.

- **International Sales Coverage**: ~70% of 2017 international net sales impacted.

- **Product Adjustment**: Electronic bonding lines shifted from equal division to dual credit alignment.

These changes enhance market alignment, simplify customer interactions, and refine segment reporting accuracy.

---

*Note: The document contains no tables, so all relevant textual data is summarized above.*

### Question

What percentage of 3M’s 2017 international net sales were covered by the centralized sales districts established in 2018?

### Generated Answer

<think>

Okay, let's tackle this question. The user is asking about the percentage of 3M's 2017 international net sales that were covered by the centralized sales districts established in 2018.

First, I need to look back at the provided document. The relevant section is under "1. Consolidation of Customer Account Activity". The document states that the centralized sales districts were established in countries accounting for "~70% of 3M’s 2017 international net sales".

So the key phrase here is "70% of 2017 international net sales". The question is directly asking for this percentage. I should make sure there's no other detail that might contradict or add to this. The rest of the document talks about the purpose and other changes like dual credit reporting, but the percentage mentioned is clearly 70%.

I need to ensure the answer is exactly as per the document without adding any extra information. The format requires the answer in a box and concise. The example answers were straightforward, just stating the numbers and source. So the answer should be 70% as stated.

</think>

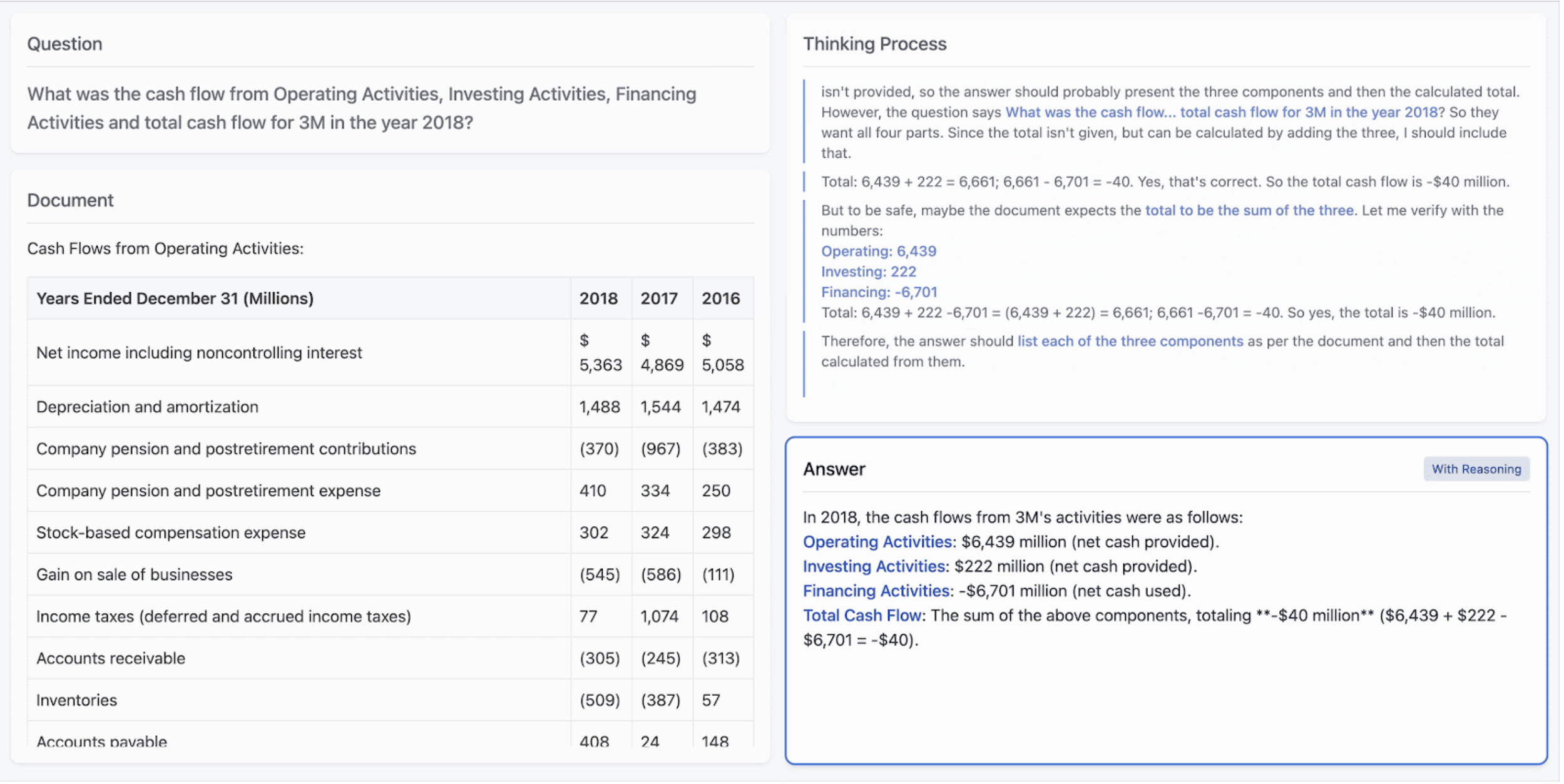

The centralized sales districts covered approximately **70% of 3M’s 2017 international net sales**.With new data we fine-tune our student model. Now, when we ask the same question (Figure 4), the model is able to gather relevant information, reason on it, and give the correct numerical answer.

So how do we customize the reasoning model?

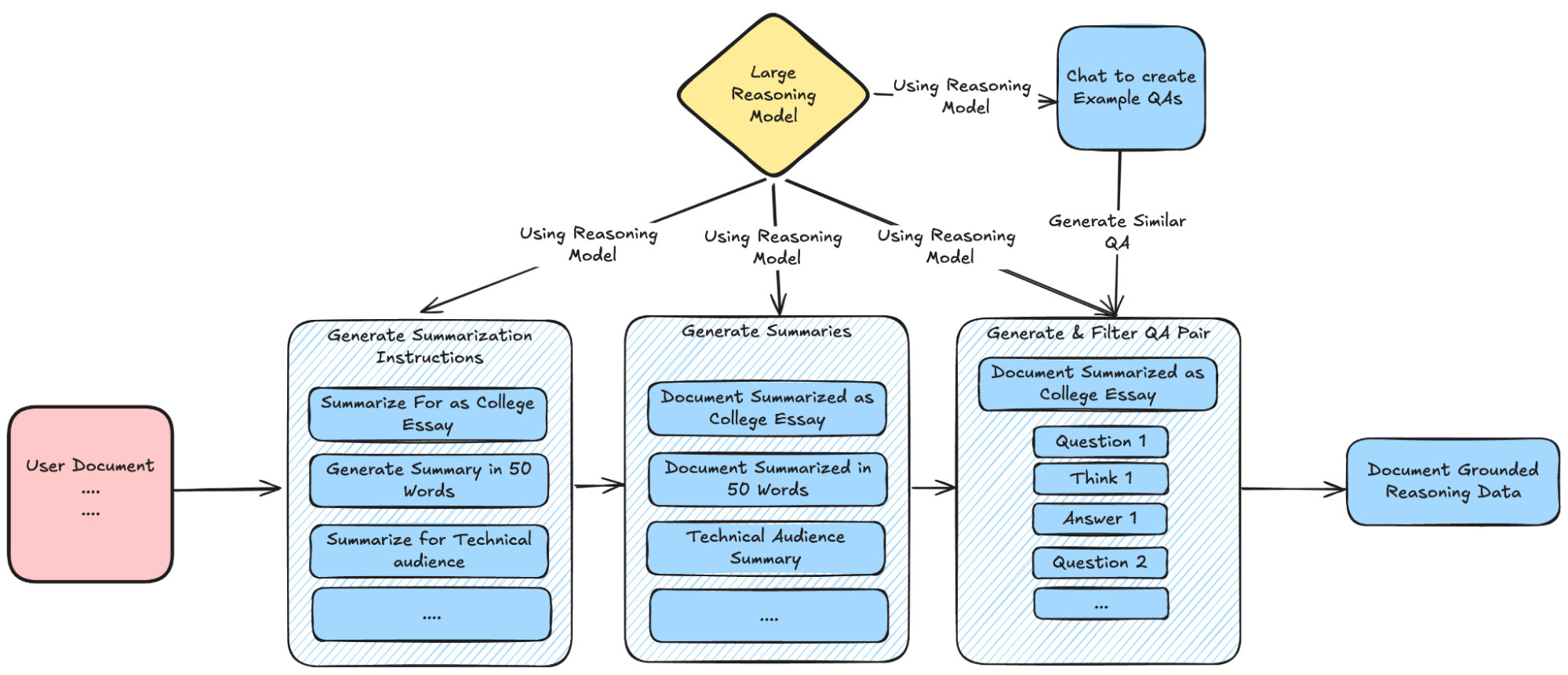

Figure 5 illustrates the end-to-end workflow for customizing reasoning models using your own data.

We begin by crafting a sample question-and-answer pair that the SDG library uses as a seed to generate similar examples. For instance, given a news article, the user might want to train a model to answer questions grounded in that specific article.

Users can provide example Q&A pairs that focus on specific facts within the article, address broader themes or context, and require reasoning across multiple sections of the document.

These examples, also known as in-context learning (ICL) examples, are then used to trigger an agentic pipeline for data generation. Each block can either invoke the teacher model to generate new samples or apply dataset transformations, such as post-processing thinking tokens, flattening nested columns, or parsing text using regex,

The LLM inference blocks use custom prompts to generate document augmentations, synthesize Q&A pairs, and assess data quality.

Figure 6 provides a detailed view of the reasoning agentic pipeline in action. The process begins with the reasoning model generating summarization instructions for a given document. It then produces summaries based on those instructions, followed by the generation of <question>, <thinking logic>, and <answer> triples for each summary.

In the sdg_hub examples, we demonstrate synthetic question-answer data generation with a third-party teacher model, llama-3.3-nemotron-super 49B. The generated data is designed to improve both the reading comprehension and reasoning abilities of a smaller student model, nematron nano, on a benchmark dataset of high-quality articles.

The reasoning data generated from user documents is then mixed with the student model reasoning data as shown in Figure 7. We use the InstructLab training library to fine-tune the model on the mixed data.

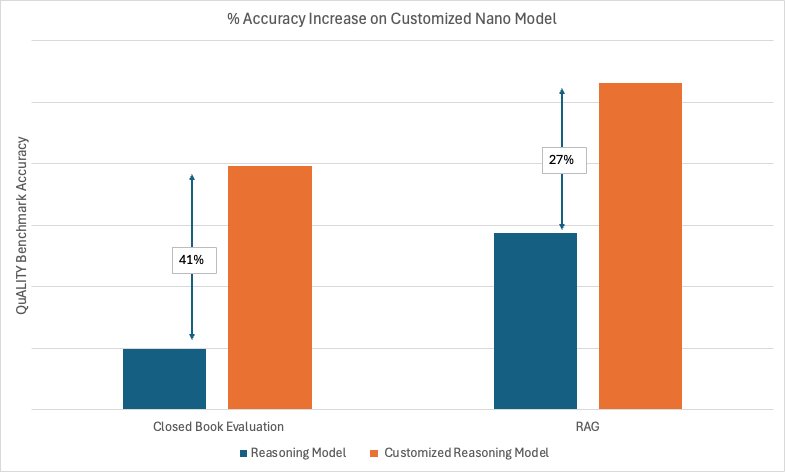

The trained model is evaluated in two settings:

- Closed book evaluation: Evaluate model’s knowledge recall by prompting it only with the user question.

- Retrieval-augmented generation (RAG): Evaluate by using a RAG system to find most relevant chunks and prompting the model with the context and question.

Note that official support for reasoning pipelines in Red Hat AI products is upcoming and the reasoning example mentioned above is part of the upstream open source project.

Output for SDG reasoning versus non-reasoning pipeline

Let's examine the distinct output formats between these approaches.

Understanding SDG output of a reasoning agentic pipeline

In a non-reasoning SDG pipeline, the output follows this format: <context/document,question, answer>. In a reasoning pipeline, the teacher model's reasoning capabilities ensure the SDG output adheres to <context/document, question, thinking logic, final answer>.

The model’s thinking is also part of the model response, as shown in Figure 7.

Improvements in model performance on quality benchmark

In Figure 8, we can observe model evaluation accuracy results in closed book and RAG settings. In both the settings, the customized nano model outperforms the base model by as large as ~27%.

The results reinforce the premise that customization on domain-specific reasoning data can enhance the performance of reasoning models. Hence, your unique business use case benefits from enhanced capabilities to process complex queries.

To approach influencing reasoning models, one needs to see how synthetic data pipelines can be tweaked for experimentation. Take a look at the Red Hat AI SDG hub for tools to help build and customize pipelines tailored to your specific business needs. You can also find example notebooks and contribute new use cases and pipelines.