We introduced OperatorPolicy as a tech preview feature in Red Hat Advanced Cluster Management for Kubernetes (RHACM) version 2.10 to improve the management of Kubernetes-native applications packaged with the Operator Lifecycle Manager (OLM) across a fleet of clusters. With the RHACM 2.11 release, this feature is now ready for you to try, with improvements in the following scenarios:

- Getting started: you have fewer required fields, lowering your barrier to entry.

- Controlling upgrades: an initial installation is treated separately from later upgrades, and you can have either restricted to specific versions of the operator.

- Alert control: you can configure which situations are considered as violations, or are just informational for your specific needs.

- Uninstallation: you have new tools to assure that installations are fully removed.

There are additional features of OperatorPolicy in this release that are not covered in this article, but can be found in our official documentation, including support for using templates in fields. We are still planning some further improvements to the feature, but we feel it is ready for real-world use. If you try it out and have any suggestions or find any issues, we welcome your feedback to help us make this more useful for everyone.

Making it easier for you

Subscriptions have many required fields, letting you specify exactly what you want to deploy into the cluster. However, that precision can be annoying when you need to look up multiple details just to get started, and some of those specifics can vary between clusters in a fleet. OperatorPolicy can now fill in these details for you. For details about applying a Policy on a cluster with Placement, refer to our other posts.

The following example deploys a specific version of the Red Hat OpenShift AI operator, without specifying the channel or catalog source:

apiVersion: policy.open-cluster-management.io/v1beta1

kind: OperatorPolicy

metadata:

name: example-rhods-operator

spec:

remediationAction: enforce

severity: medium

complianceType: musthave

subscription:

name: rhods-operator

startingCSV: rhods-operator.2.8.0

upgradeApproval: Automatic

versions:

- rhods-operator.2.8.0The policy controller on the managed cluster looks up the specified operator's default channel, suggested namespace, and catalog source. It uses those details to create the Subscription that OLM uses. Any of those details can be specified in the policy if the user has specific requirements, but are optional. In this example, the optional startingCSV and versions fields are set, which will cause it to install a specific version, and not upgrade beyond that version.

The required upgradeApproval field can be set to either None or Automatic, and configures whether the controller will automatically approve upgrades for the operator. Only versions specified in the policy will be approved, but if the versions field is not specified, any version of the operator is allowed. Note that the initial version of the operator will be installed as long as the versions field allows it, regardless of the upgradeApproval setting—this setting really does only affect upgrades.

The upgradeApproval and versions fields are our controls to version management of operators, and our solution to some drawbacks that we've found with the installPlanApproval field available in Subscriptions. Because of the way the controller must interact with OLM in order to approve specific versions, the installPlanApproval field is the only field that you can not set in the subscription section of an OperatorPolicy.

Viewing what is on the cluster

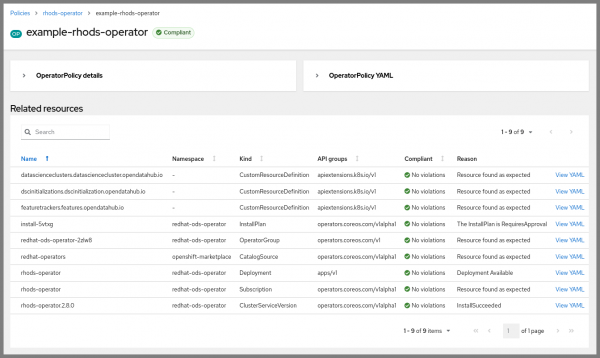

After creating this policy, and ensuring it gets placed on a managed cluster in the fleet, we can use the Red Hat Advanced Cluster Management console to view the details of the operator resources on that cluster. For an example, see Figure 1.

Compared to ConfigurationPolicy, we can see that there is much more reported here than just the Subscription specified in the OperatorPolicy: it shows the ClusterServiceVersion that is installed on the cluster, the CustomResourceDefinitions for the operator, the health of the Deployment, and more. The policy status reports that there are no violations based on the status of any of those resources, although if we look closely we can see that there is an InstallPlan for a new version of the operator, which we could approve.

Clicking the View YAML link in the table will use Red Hat Advanced Cluster Management's Search feature to show us exactly what is in that InstallPlan, without needing to log in to that managed cluster. For example, see Figure 2.

So we can see that there is an upgrade available for version 2.10.0, but because the policy only specifies version 2.8.0, this upgrade does not automatically get approved. By default, the OperatorPolicy will not report violations if an upgrade is available, as long as the other details are compliant, but this behavior can be configured by the optional complianceConfig field. We can see the defaults for this field and the removalBehavior field in the YAML on the details page, copied here with some metadata removed:

apiVersion: policy.open-cluster-management.io/v1beta1

kind: OperatorPolicy

metadata:

name: example-rhods-operator

namespace: local-cluster

spec:

complianceConfig:

catalogSourceUnhealthy: Compliant

deploymentsUnavailable: NonCompliant

upgradesAvailable: Compliant

complianceType: musthave

remediationAction: enforce

removalBehavior:

clusterServiceVersions: Delete

customResourceDefinitions: Keep

operatorGroups: DeleteIfUnused

subscriptions: Delete

severity: medium

subscription:

name: rhods-operator

startingCSV: rhods-operator.2.8.0

upgradeApproval: Automatic

versions:

- rhods-operator.2.8.0If we change spec.complianceConfig.upgradesAvailable to NonCompliant, then we would be alerted when there is a new version available.

Version management

The policy we have so far only specifies rhods-operator.2.8.0 as an allowed version. Let's pretend that we've vetted rhods-operator.2.10.0 as an acceptable version, and we want our clusters using that version if it is available. However, we don't want to allow updates to other versions.

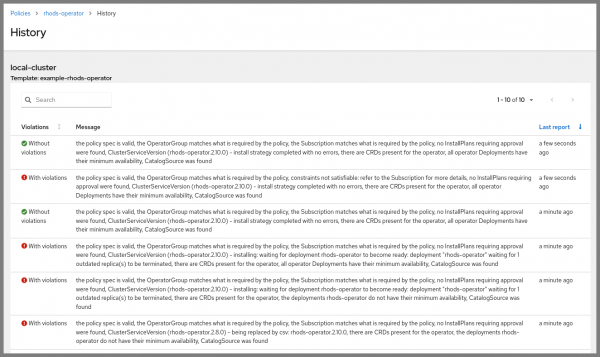

If our only tool was the installPlanApproval field on the subscription, we'd have to choose whether to allow any version on the cluster (by setting that field to Automatic) or we'd need to go to each of our clusters and manually approve the InstallPlans that upgrade to the version we want. This is a place where OperatorPolicy can shine. If we add that new version to the versions list on the policy, it will do the tedious work of finding the InstallPlans, ensuring they match the desired version, and approving them. After making the change, we can view the history of the policy, to see its status during and after the upgrade, as seen in Figure 3.

The history shows that after approving the InstallPlan, the policy reported violations until the new Deployment reported as Available. The cluster is now running the 2.10.0 version of the operator. If there was another new version like 2.10.1 published, OperatorPolicy would notify us, and not approve it yet. It's worth reminding again that updating the Subscription to have the installPlanApproval field set to Automatic would potentially move beyond the version we wanted.

Cleaning up

Easy installs are good, and safe upgrades are nice, but what about uninstallation? With all these resources, it could be complicated to get things back to the way they were, especially if the operator uses finalizers to safely clear its resources. OperatorPolicy provides mustnothave as a possible complianceType, as well as the removalBehavior field we briefly saw earlier, to help track and configure the uninstallation process.

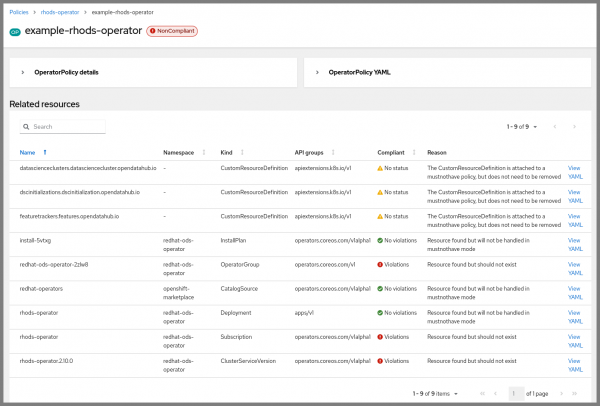

To see what the policy will remove during an uninstallation, without actually taking the actions yet, we can set the remediationAction to inform and the complianceType to mustnothave. If we return to the details page after making that edit, we will see a status like in Figure 4.

The policy is NonCompliant, because the Subscription and ClusterServiceVersion exist on the cluster. The status here lets us know what would happen if we enforced the policy: those resources would be removed, and should indirectly trigger other resources to be removed, like the Deployment.

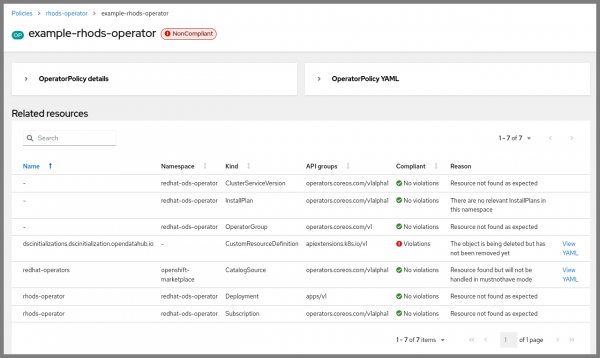

This view also draws attention to the CustomResourceDefinitions, which will not be removed by default. Removing those will cause all instances of those resources to be deleted, which could cause a loss of important data. If you are sure you want to remove them, you can set removalBehavior.customResourceDefinitions to Delete in the policy. Making that change, and enforcing the policy, leads to the view in Figure 5.

We can see that one of the CustomResourceDefinitions has not been removed from the cluster yet—Kubernetes will not remove CustomResourceDefinitions until all instances of that resource have finished deleting, and in this case at least one of them seems to be stuck. Resolving this situation is beyond the scope of this article, because it likely requires operator-specific knowledge to do safely. But, OperatorPolicy still provides visibility into this situation, allowing administrators to know that the operator has not been cleanly removed from the cluster yet, and that further action may be required.

Conclusion

Operator Policy in Red Hat Advanced Cluster Management for Kubernetes 2.11 makes it easier for you to get started, get visibility into the status of your operator, and it gives you controls for your upgrades and uninstallations. We're excited for you to use the OperatorPolicy to manage operator installations across your fleet of clusters, and we’re looking forward to your feedback!