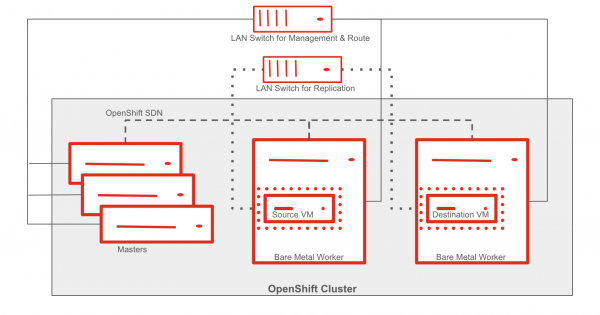

This article explores setting up MySQL replication using an external network across virtual machines (VMs) in separate projects in a Red Hat OpenShift cluster. The fundamentals discussed here can be used to set up replication across VMs in geographically distinct OpenShift clusters. This configuration offers robust disaster recovery for business critical databases. See Figure 1.

Set-up Details

This blog post explores a unique OpenShift cluster architecture that enables replication across geographically separated clusters. The cluster consists of three master nodes and two worker nodes. Each worker node houses a virtual machine (VM) equipped with an external network interface card managed by the NMState operator. Crucially, MySQL database replication leverages these external interfaces instead of the internal OpenShift network. This approach allows for similar replication to be established between VMs in geographically distinct OpenShift clusters.

Technical approach:

- Provision VMs: Deploy VMs in OpenShift cluster using OpenShift Virtualization with an additional network interface which is directly connected to LAN.

- Configure MySQL replication: Establish primary-secondary replication between the VMs using standard MySQL procedures.

- Test the replication: Create records in primary database and check if those are replicated to secondary.

Benefits:

- Disaster recovery: In case of an outage in one region, the secondary database can be promoted to primary quickly, minimizing downtime and data loss.

- OpenShift integration: Leverage OpenShift's container orchestration and management capabilities for both database VMs and applications.

Prerequisite:

By default, OpenShift Virtualization is installed with a single, internal pod network.

You must create a Linux bridge network attachment definition (NAD) in order to connect to additional networks. Refer to this documentation.

Provision VMs

Source VM

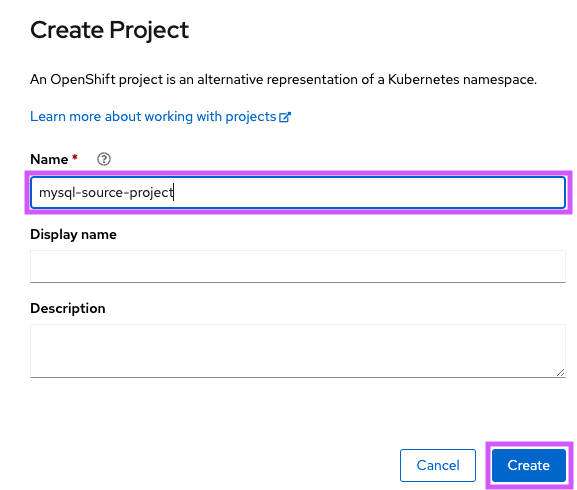

- Create the project “mysql-source-project” (Figure 2).

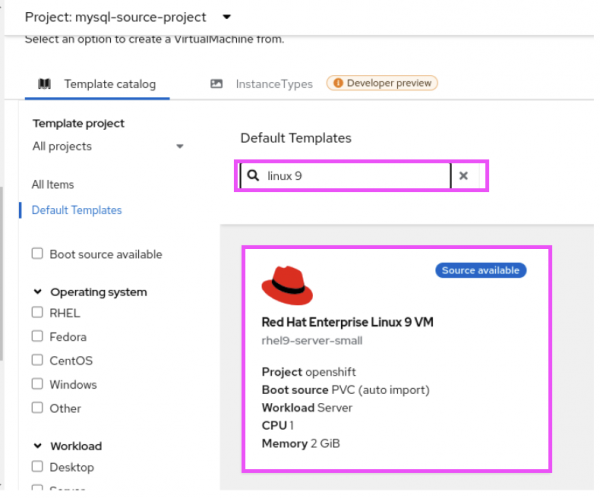

- Create source VM leveraging the Red Hat Enterprise Linux 9 VM Template (Figure 3).

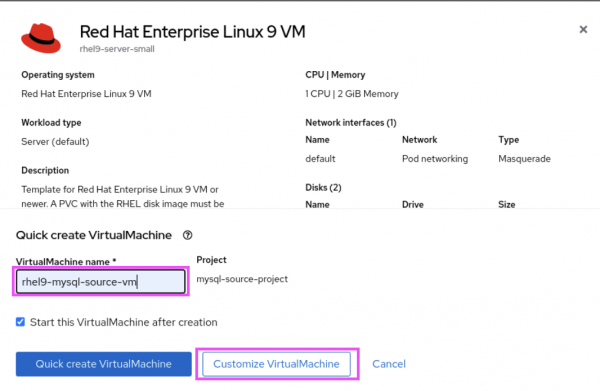

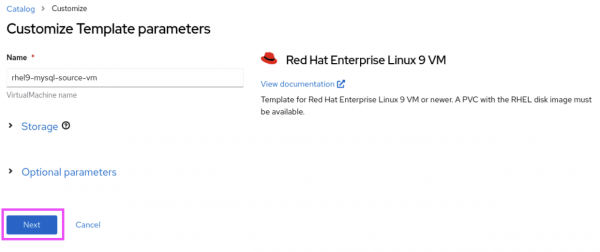

- Provide the name for the VM and select Customize VirtualMachine (Figure 4).

- Select Next (Figure 5).

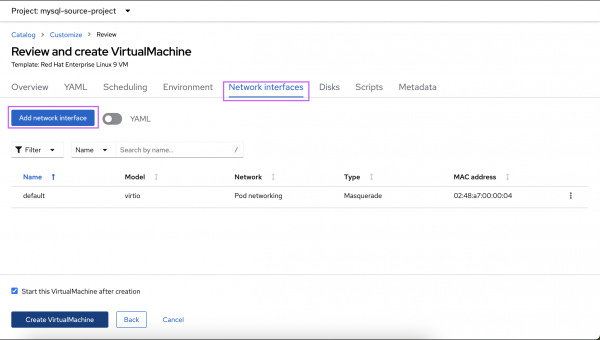

- Select Network Interfaces and Add network interface (Figure 6).

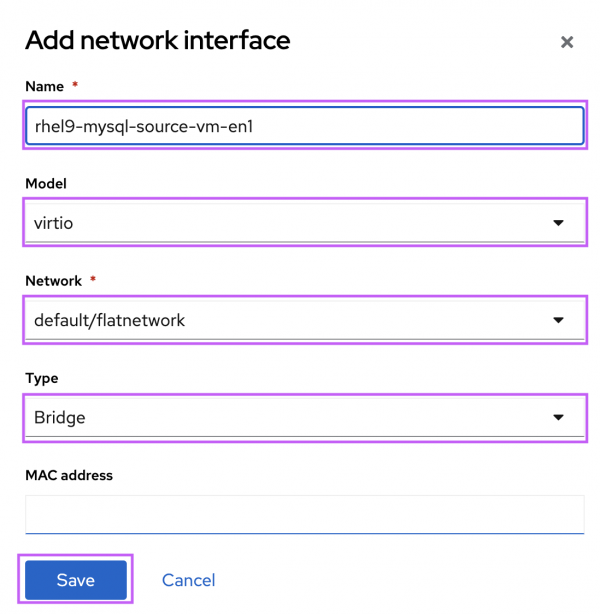

- Name the interface and select Save (Figure 7).

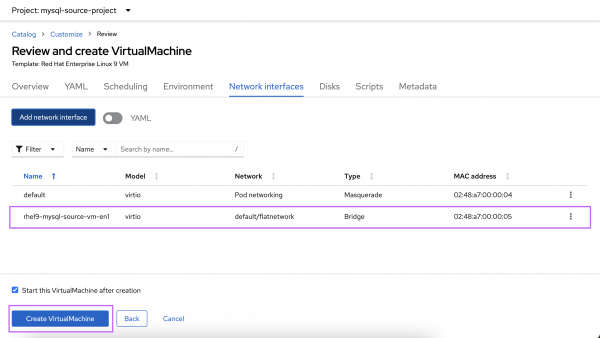

- Review and select Create VirtualMachine (Figure 8).

Destination VM

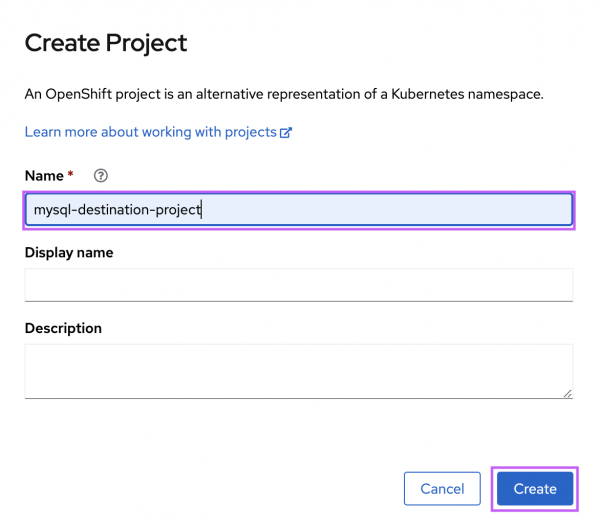

- Create the project “mysql-destination-project” (Figure 9).

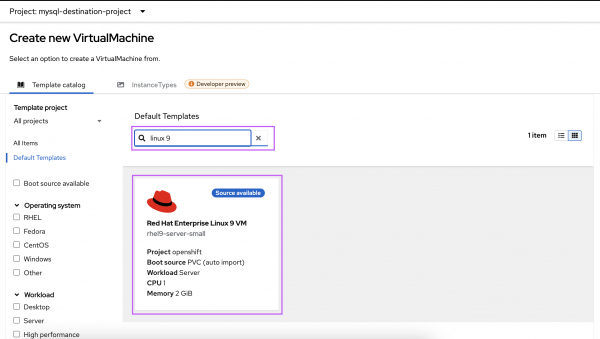

- Select the Red Hat Enterprise Linux 9 VM template (Figure 10).

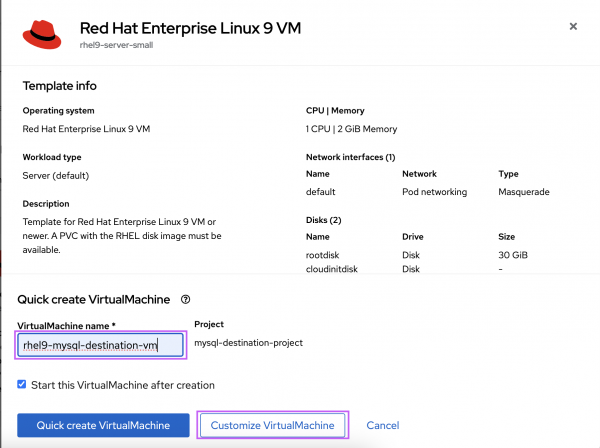

- Select Customize VirtualMachine once you have named the VM (Figure 11).

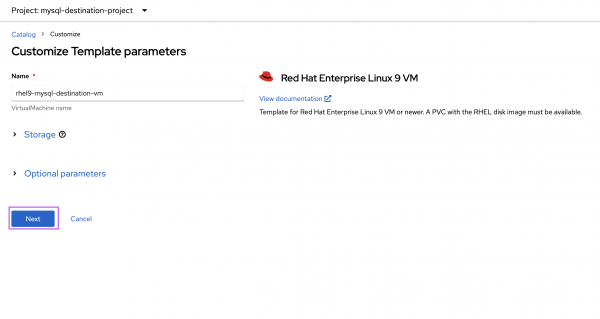

- Select Next (Figure 12).

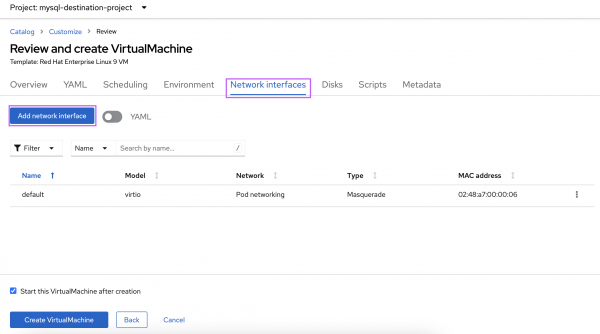

- Select Network Interfaces and Add network interface (Figure 13).

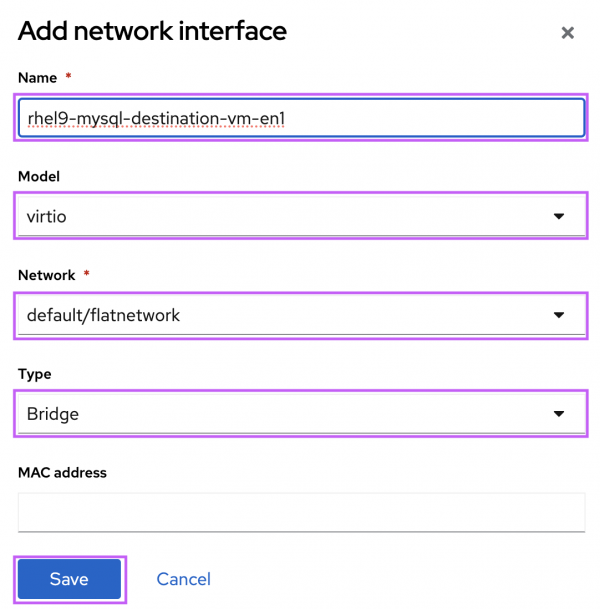

- Name the interface and select Save (Figure 14).

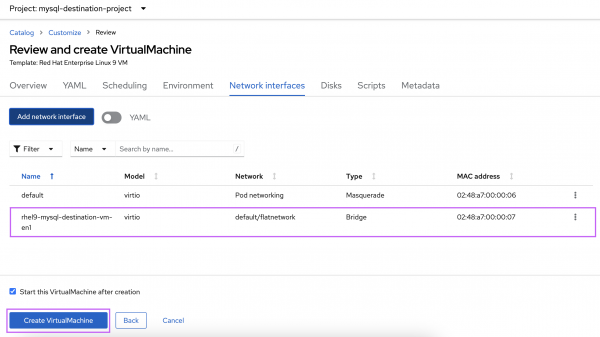

- Review and select Create VirtualMachine (Figure 15).

Once the VMs boots up, perform the following in both the VMs.

Register the VMs with repositories:

#subscription-manager registerInstall mysql-server:

#dnf install mysql-serverConfigure MySQL replication

In the MySQL source vm add the following in the

mysql-server.conf:Note: 192.168.3.106 is the IP address of the additional interface added to the MySQL source VM.

Check “ip addr show” in the VM you created.

[root@rhel9-mysql-source-vm ~]# cat /etc/my.cnf.d/mysql-server.cnf # # This group are read by MySQL server. # Use it for options that only the server (but not clients) should see # # For advice on how to change settings please see # http://dev.mysql.com/doc/refman/en/server-configuration-defaults.html # Settings user and group are ignored when systemd is used. # If you need to run mysqld under a different user or group, # customize your systemd unit file for mysqld according to the # instructions in http://fedoraproject.org/wiki/Systemd [mysqld] datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock log-error=/var/log/mysql/mysqld.log pid-file=/run/mysqld/mysqld.pid bind-address=192.168.3.106 server-id=100 log_bin=/var/log/mysql/mysql-bin.log gtid_mode=ON enforce-gtid-consistency=ON [root@rhel9-mysql-source-vm ~]#In the MySQL destination VM, add the following in the

mysql-server.conf:[root@rhel9-mysql-destination-vm ~]# cat /etc/my.cnf.d/mysql-server.cnf # # This group are read by MySQL server. # Use it for options that only the server (but not clients) should see # # For advice on how to change settings please see # http://dev.mysql.com/doc/refman/en/server-configuration-defaults.html # Settings user and group are ignored when systemd is used. # If you need to run mysqld under a different user or group, # customize your systemd unit file for mysqld according to the # instructions in http://fedoraproject.org/wiki/Systemd [mysqld] datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock log-error=/var/log/mysql/mysqld.log pid-file=/run/mysqld/mysqld.pid server-id=101 relay-log=/var/log/mysql/mysql-relay.log log_bin=/var/log/mysql/mysql-bin.log gtid_mode=ON enforce-gtid-consistency=ON log-replica-updates=ON skip-replica-start=ON [root@rhel9-mysql-destination-vm ~]#Start

mysqldin both the VMs:#systemctl start mysqld.serviceIn both the VMs, execute

mysql_secure_installation:# mysql_secure_installationIn the MySQL source VM, create the

replication_userand restrict access to this user only from MySQL destination VM :Note: 192.168.3.108 is the IP address of the additional interface added to the destination VM.

Check "ip addr show” in the VM you created.

mysql>CREATE USER 'replication_user'@'192.168.3.108' IDENTIFIED WITH mysql_native_password BY 'Test@123'; Query OK, 0 rows affected (0.01 sec)For the

replication_userin the MySQL destination VM, grant the replication privileges in the MySQL source VM :mysql> GRANT REPLICATION SLAVE ON *.* TO 'replication_user'@'192.168.3.108'; Query OK, 0 rows affected (0.01 sec)Reload the grant tables in the MySQL database in MySQL source VM :

mysql> FLUSH PRIVILEGES; Query OK, 0 rows affected (0.01 sec)Set the instance in MySQL source VM (primary) to read-only state:

mysql> SET @@GLOBAL.read_only = ON; Query OK, 0 rows affected (0.00 sec)Log in to the MySQL destination VM and switch the instance (secondary) to read-only mode:

mysql> SET @@GLOBAL.read_only = ON; Query OK, 0 rows affected (0.00 sec)Configure the replication source in MySQL destination VM:

mysql> CHANGE REPLICATION SOURCE TO -> SOURCE_HOST='192.168.3.106', -> SOURCE_USER='replication_user', -> SOURCE_PASSWORD='Test@123', -> SOURCE_AUTO_POSITION=1; Query OK, 0 rows affected, 2 warnings (0.07 sec)Start the replica thread in the MySQL destination VM:

mysql> START REPLICA; Query OK, 0 rows affected (0.02 sec)Unset the read-only state on both the primary and secondary MySQL servers:

mysql> SET @@GLOBAL.read_only = OFF; Query OK, 0 rows affected (0.00 sec)Inspect the status of the replication in the MySQL destination VM:

mysql> SHOW REPLICA STATUS\G; *************************** 1. row *************************** Replica_IO_State: Waiting for source to send event Source_Host: 192.168.3.106 Source_User: replication_user Source_Port: 3306 – snip – Source_Server_Id: 100 Source_UUID: f23afe9b-139a-11ef-8cf7-02d8aa000014 – snip – mysql>

Testing the replication

In the MySQL source VM, create database

test_123:mysql> CREATE DATABASE test_123; Query OK, 1 row affected (0.02 sec)Switch into

test_123database:mysql> use test_123; Database changedCreate

test_table:mysql> create table test_table ( -> name varchar(255), -> location varchar(255), -> number int -> ); Query OK, 0 rows affected (0.06 sec)Insert few record into the

test_table:mysql> insert into test_table values ('Ramu','Delhi','01'); Query OK, 1 row affected (0.02 sec) mysql> insert into test_table values (‘Raju’,'Mumbai','02'); Query OK, 1 row affected (0.01 sec) mysql> insert into test_table values ('Ravi','Bangalore','03'); Query OK, 1 row affected (0.01 sec)

In the MySQL destination VM, execute the following commands to check if the replication is successful.

List the databases and check if

test_123is present:mysql> show databases ; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | | test_123 | +--------------------+ 5 rows in set (0.00 sec)Switch into

test_123database:mysql> use test_123 Database changedList the tables and check if the

test_tableis replicated:mysql> show tables; +--------------------+ | Tables_in_test_123 | +--------------------+ | test_table | +--------------------+ 1 row in set (0.00 sec)Confirm the records are replicated:

mysql> select * from test_table; +--------+-----------+--------+ | name | location | number | +--------+-----------+--------+ | Ramu | Delhi | 1 | | Raju | Mumbai | 2 | | Ravi | Bangalore | 3 | +--------+-----------+--------+ 3 rows in set (0.00 sec)

Here we can see the records created in the Source VM is replicated to the MySQL instance in the Destination VM.

Conclusion

This blog walked you through how to set up MySQL replication across VMs in separate projects within an OpenShift cluster using an external network interface. This demonstrates the viability of setting up replication across VMs in geographically distinct OpenShift clusters, offering robust disaster recovery for business critical databases.

Last updated: September 16, 2024