With the fuse-overlayfs storage driver, you can enable faster builds and more optimized storage usage for podman build and buildah within your Red Hat OpenShift Dev Spaces cloud development environment (CDE). Before diving into its advantages, let’s first discuss some prerequisite details about container image layers and storage drivers.

Container images consist of layers which are stored and used for building and running containers. A huge benefit of this layer structure is that, assuming that each image layer stores only the differences compared to the previous layer (i.e., the delta), each layer is small which generally allows time and space savings when building and running containers. This is because small layers promotes re-usability allowing layer sharing between images and containers.

A storage driver for Docker and Podman is what manages these image layers upon image pulling, building, and running. By default, OpenShift Dev Spaces uses the vfs storage driver for Podman in the Universal Development Image. While vfs is generally considered very stable, the lack of copy-on-write (CoW) support poses a significant disadvantage compared to other storage drivers like fuse-overlayfs, overlay2, and btrfs. See Figure 1 for a podman build timing comparison for the github.com/che-incubator/quarkus-api-example project.

What is the disadvantage of vfs?

As mentioned previously, having smaller layers allows time and space savings when building and running containers. The disadvantage with vfs's lack of CoW is that whenever a new layer is created, a complete copy of the previous layer is created. This can create duplicate and redundant data in each layer and can quickly fill up your storage space, especially when working with larger images. Comparing that with image layers created with CoW-supported storage drivers like fuse-overlayfs, those image layers would typically not contain redundant data and would remain smaller since only the delta is stored in each image layer.

How can you use fuse-overlayfs in an OpenShift Dev Spaces CDE?

To use fuse-overlayfs in a CDE, the CDE’s pod requires access to the /dev/fuse device from the host operating system. With the recent release of Red Hat OpenShift 4.15, unprivileged pods can access the /dev/fuse device without any modifications to the cluster config. Here’s the documentation on how to enable the fuse-overlayfs storage driver for CDEs running on OpenShift 4.15 and older versions.

How does fuse-overlayfs compare to vfs in an OpenShift Dev Spaces CDE?

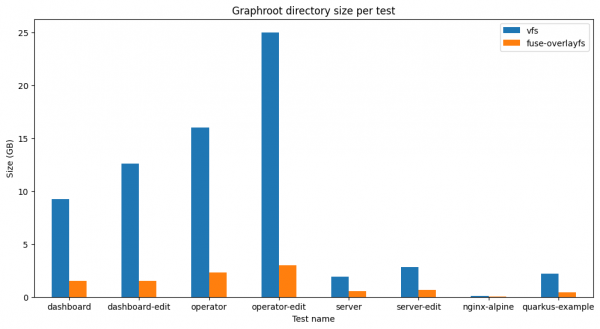

A series of tests were performed to measure the build time and storage usage differences between the fuse-overlayfs and vfs storage drivers in an OpenShift Dev Spaces CDE. This section presents the measurements for building a series of different GitHub projects. If you’re interested, the results for tests that were specifically designed to highlight the characteristics of each storage driver are available in this GitHub repository.

All tests presented in Table 1 were run on an OpenShift Dedicated 4.15.3 cluster with OpenShift Dev Spaces 3.12.

| Test name | GitHub Project URL |

|---|---|

| dashboard | che-dashboard |

| dashboard-edit | che-dashboard |

| operator | che-operator |

| operator-edit | che-operator |

| server | che-server |

| server-edit | che-server |

| nginx-alpine | docker-nginx |

| quarkus-api-example | quarkus-api-example |

For each test apart from the <projectname>-edit tests, the podman system reset command was run before running each test in order to clear the graphroot directory. This removes all image layers, therefore these tests measure a clean-build scenario, just like when a developer creates a CDE and runs podman build for the first time.

For the <projectname>-edit tests, the image container was built beforehand. The tests measure a rebuild of the image after changing the source code without running podman system reset command. This scenario mimics the case where the developer is running a new build after making code changes in a CDE. See Figure 3 and Figure 4.

For all tests in Table 1, the fuse-overlayfs storage driver had faster build times and significantly smaller storage consumption compared to vfs. The benefits of CoW is especially evident in Figure 4. For example, the operator-edit test showed that by using fuse-overlayfs, the graphroot directory was about 88% smaller, saving about 15GB of storage.

Conclusion

In conclusion, the fuse-overlayfs storage driver should be considered for your OpenShift Dev Spaces CDEs, saving time and storage thanks to the CoW support. In general, there is no “best” storage driver because the performance, stability, and usability of each storage driver is dependent on your specific workload and environment. However, with vfs not supporting CoW, fuse-overlayfs is a much more suitable choice for image layer management.

For additional content regarding fuse in OpenShift Dev Spaces, check out this blog post and demo project.

Thank you for reading.