A deep dive into OpenShift Container Platform 4.20 performance

Let’s compare the performance impact of three networking configurations in the DP workload to determine the most stable configuration for telco-grade environments.

Let’s compare the performance impact of three networking configurations in the DP workload to determine the most stable configuration for telco-grade environments.

Use Red Hat Lightspeed to simplify inventory management and convert natural language into inventory API queries for auditing and multi-agent automation.

Learn how OpenShift APIs for Data Protection self-service enables developers to manage OpenShift application backup and restore, enforcing least privilege.

Simplify the management of numerous Red Hat OpenShift HyperShift (HCP) clusters

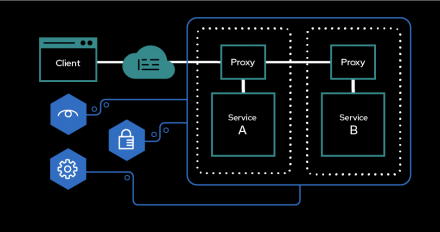

Learn how to integrate the Gateway API for OpenShift with OpenShift Service Mesh, utilizing certificate trust and mTLS communication.

Learn how to migrate from Llama Stack’s deprecated Agent APIs to the modern, OpenAI-compatible Responses API without rebuilding from scratch.

Explore new features in Red Hat JBoss EAP XP 6, including upgrades to MicroProfile 7, MicroProfile LRA and multi-app support, and observability tools.

Use SDG Hub to generate high-quality synthetic data for your AI models. This guide provides a full, copy-pasteable Jupyter Notebook for practitioners.

Learn how to install and use new MCP plug-ins for Red Hat Developer Hub that provide tools for MCP clients to interact with it.

Your Red Hat Developer membership unlocks access to product trials, learning resources, events, tools, and a community you can trust to help you stay ahead in AI and emerging tech.

HyperShift streamlines OpenShift cluster management with hosted control planes, cutting costs, accelerating creation, and efficiently scaling large fleets.

Discover how llama.cpp API remoting brings AI inference to native speed on macOS, closing the gap between API remoting and native performance.

AI agents are where things get exciting! In this episode of The Llama Stack Tutorial, we'll dive into Agentic AI with Llama Stack—showing you how to give your LLM real-world capabilities like searching the web, pulling in data, and connecting to external APIs. You'll learn how agents are built with models, instructions, tools, and safety shields, and see live demos of using the Agentic API, running local models, and extending functionality with Model Context Protocol (MCP) servers.Join Senior Developer Advocate Cedric Clyburn as we learn all things Llama Stack! Next episode? Guardrails, evals, and more!

Explore a fashion AI search on Red Hat OpenShift AI with EDB Postgres AI.

Learn how to integrate Ansible Automation Platform with Red Hat Advanced Cluster Management for centralized authentication across multiple clusters.

Building AI applications is more than just running a model — you need a consistent way to connect inference, agents, storage, and safety features across different environments. That’s where Llama Stack comes in. In this second episode of The Llama Stack Tutorial Series, Cedric (Developer Advocate @ Red Hat) walks through how to:- Run Llama 3.2 (3B) locally and connect it to Llama Stack- Use the Llama Stack server as the backbone for your AI applications- Call REST APIs for inference, agents, vector databases, guardrails, and telemetry- Test out a Python app that talks to Llama Stack for inferenceBy the end of the series, you’ll see how Llama Stack gives developers a modular API layer that makes it easy to start building enterprise-ready generative AI applications—from local testing all the way to production. In the next episode, we'll use Llama Stack to chat with your own data (PDFs, websites, and images) with local models.🔗 Explore MoreLlama Stack GitHub: https://github.com/meta-llama/llama-stackDocs: https://llama-stack.readthedocs.io5.

Learn how Red Hat has re-architected the REST API to provide direct and efficient access to event notifications, complying with the latest O-RAN standards.

Runtimes, frameworks, and services to build applications natively on Red Hat OpenShift.

Learn how to use Cryostat 4.0’s Kubernetes API discovery configurations with Quarkus-native image applications, leveraging GraalVM's JFR and JMX observability feature support.

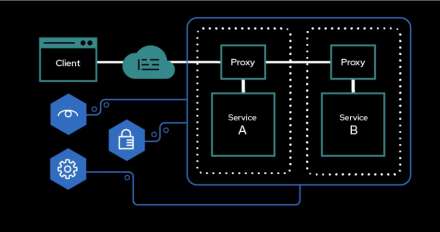

Everything you need to coordinate microservices in a service mesh with powerful

Red Hat build of Apache Camel 4.10 brings enhanced integration components, unified observability, expanded cloud and messaging support, and more.

Fill out the form to request early access to Red Hat Connectivity Link

Learn how to receive notifications about the status of Red Hat's services.

Connect, secure, and protect your distributed Kubernetes services with lightweight policy attachments.

This video demonstrates how to protect the signing data produced by the Red Hat Trusted Artifact Signer using the OADP (OpenShift APIs for Data Protection) Operator.