The concept of a business process (BP), or workflow (WF), and the discipline and practice of business process management (BPM) have been around since the early 90s. Since then, WF/BPM tools have evolved considerably. More recently, a convergence of different tools has taken place, adding decision management (DM) and case management (CM) to the mix. The ascendance of data science, machine learning, and artificial intelligence in the last few years has further complicated the picture. The mature field of BPM has been subsumed into the hyped pseudo-novelties of digital business automation, digital reinvention, digital everything, etc., with the addition of "low code" and robotic process automation (RPA).

A common requirement of business applications today is to be event-driven; that is, specific events should trigger a workflow or decision in real-time. This requirement leads to a fundamental problem. In realistic situations, there are many different types of events, each one requiring specific handling. An event-driven business application may have hundreds of qualitatively different workflows or processes. As new types of events arise in today's ever-changing business conditions, new processes have to be designed and deployed as quickly as possible.

This situation is different than the common requirement of scalability at runtime. It's not just a problem of making an architecture scale to a large number of events per second. That problem is in many respects easy to solve. The problem of scalability at design time is what I am concerned about here.

In this first article in the series, I will define the business use case and data model for a concrete example from the health management industry. Then, I will show you how to create the trigger process that begins your jBPM (open source business automation suite) implementation for this scenario. In subsequent articles, I will continue the detailed walk-through for this example implementation.

In particular, this example illustrates several Business Process Model and Notation (BPMN) constructs as they are implemented in jBPM:

- Business process abstraction.

- Service tasks or work item handlers.

- REST API calls from within a process.

- Email sending from within a process.

- Signal sending and catching.

- Timer-based reminders and escalations.

The business use case

Population health management (PHM) is an important approach to health care that leverages recent advances in technology to aggregate health data across a variety of sources, analyze this data into a unified and actionable view of the patient, and trigger specific actions that should improve both clinical and financial results. Of course, this topic implies handling protected personal information, which should be done in full compliance with existing legislation (or controversy will ensue).

An insurance company or health management organization tracks a considerable wealth of information about the health history of every member. For example, a member known to have a certain medical condition is supposed to periodically do things such as visiting a doctor or undergoing a test. Missing such actions should trigger a workflow in the PHM system to make sure that the member is back on track.

For the sake of the example, let's say that a member has rheumatoid arthritis. This person is supposed to take a DMARD drug. It is easy to check periodically if a prescription for such a drug has been filled over a certain period of time, say one year. If no such prescription has been filled in the past year for a member with this condition, certain actions should be taken by a given actor:

| Task code | Activities | Actor |

|---|---|---|

| A490.0 | The member's doctor should be notified. | PRO |

| B143 | An insurance channel worker should perform related administrative tasks. | CHW |

| C178 | The member should be educated. | MEM |

| C201 | The member should talk to a pharmacist. | RXS |

Certain tasks should occur only after another task has completed:

| Task code | Predecessor task |

|---|---|

| A490.0 | |

| B143 | |

| C178 | A490.0 |

| C201 | A490.0 |

The task life cycle should be determined by the change of task status, where the task status has the following values and meanings:

| Status | Description |

|---|---|

| Inactive | Not the season for this task. |

| Suppressed | Specific member situation dictates this task should be suppressed. |

| Closed | Soft close: Assumed completed until hard close notification. |

| Completed | Hard close: Verification of completion received. |

| Expired | Not completed prior to expiration. |

The distinction between closed (soft close) and completed (hard close) is important. An actor is allowed to soft close a task, but the task should be considered to be completed only upon verification of the task's outcome. Only then should the task contribute to the measurement of key performance indicators (KPIs).

A soft close should be accomplished by the actor by either clicking on a Complete button or specifying that the task is Not Applicable (and providing an explanation with supplemental data that can be uploaded). The hard close should require notification from an external system:

| Code | Soft Close | Hard Close |

|---|---|---|

| A490.0 | Completed or N/A | HEDIS Engine Compliance. |

| B143 | Completed or N/A | Provider and pharmacy attestation. |

| C178 | Completed or N/A | With soft close. |

| C201 | Click to call pharmacist | With soft close |

If a task actor is late in completing the assigned task, a reminder must be sent to the corresponding actor. Then, if the task is not closed after a certain period of time, the action must be escalated to a manager:

| Task Code | Reminder Frequency | Escalation After | Escalation Actor |

|---|---|---|---|

| A490.0 | 14 days | 30 days | PEA |

| B143 | 30 days | 90 days | MCH |

| C178 | 7 days | 60 days | MRX |

| C201 | 7 days | 30 days | CHW |

In any case, each action should expire by the end of the year.

The last requirement is that it should be possible to prevent a task from being executed during a defined suppression period. This practice is the equivalent of dozing an alarm clock.

The following table briefly describes all of the actors mentioned so far:

| Actor | Description |

|---|---|

| PRO | Provider |

| MEM | Member |

| CHW | Community Health Worker |

| RXS | Pharmacist |

| PEA | Provider Engagement Advocate |

| MCH | Community Health Manager |

| MRX | Pharmacy Manager |

In essence, these requirements define a workflow that must be completed for a given PHM event or trigger, such as the member missing a DMARD prescription.

You don't want to have to redo all of the implementation work if the member is diabetic and did not receive a statin medication within the year instead of missing a DMARD prescription. There are possibly hundreds of distinct events/triggers in PHM, and having to model the workflow of each one of them separately does not scale. This is the most important requirement from a business perspective: The design of the implementation must be able to scale to as many different types of triggers that there could possibly be, and it must be such that new triggers can be added with the least possible amount of effort.

Implementation as a business process

A complete business process implementing these requirements in jBPM can be imported from GitHub. However, I encourage you to build it from scratch following the detailed steps starting in the next section.

Business processes in jBPM follow the latest BPMN 2.0 specification. You can design a business process to be data-driven as well as event-driven. However, business processes are a realization of procedural imperative programming. This means that the business logic has to be explicitly spelled out in its entirety.

The implementation should be completely data-driven to satisfy the business scalability requirement as much as possible. The trigger workflow should be parameterized with data fed to the process engine so that one business process definition is capable of handling any trigger event.

The data model

Most of the workflow-related properties of a task are contained in the custom data type Task:

| Attribute | Description |

|---|---|

| id | The id of the task. |

| original id | The task code. |

| status | The status tracking the task life cycle. |

| predecessor | The task preceding the current one in the task workflow. |

| close | The task closing type (soft or hard). |

| close signal | The signal to hard close the task. |

| reminder initiation | When the first reminder should occur. |

| reminder frequency | The frequency of the reminders. |

| escalated | A flag indicating if an escalation should occur. |

| escalation timer | When an escalation should occur. |

| suppressed | A flag indicating if task is suppressed. |

| suppression period | The period of time the task has to remain suppressed. |

The custom data type TaskActorAssignment holds the information needed to assign the task to an actor:

| Attribute | Description |

|---|---|

| actor | The task's actor. |

| channel | The application (user interface) where the task is performed (i.e., data entry). |

| escalation actor | The actor responsible for the escalation. |

| escalation channel | The application (user interface) used in the escalation. |

The custom data type Reminder holds the information needed to send a reminder to the task actor:

| Attribute | Description |

|---|---|

| address | The (email) address of the task's actor. |

| subject | The subject of the reminder. |

| body | The content of the reminder. |

| from | The (email) address sending the reminder. |

All of this data is retrieved from a service. You need to represent the response of the service as the custom data type Response:

| Attribute | Description |

|---|---|

| task | The task data. |

| assignment | The task actor assignment data. |

| reminder | The reminder data. |

It is understood that for any given PHM event or trigger, the service will produce a list of response objects, one for each task in the PHM event workflow. The Java class diagram shown in Figure 1 summarizes what is needed.

You should implement the model in Java. All classes must be serializable. Overriding the toString method is optional, but it helps when tracing process execution.

This model can be imported into jBPM from GitHub.

The trigger process

After creating the project in jBPM for the business process, implementing the trigger workflow makes sure that the model project is a dependency in the project settings:

- Create the process in the process designer with the properties shown in Figure 2.

- Add the process variables as shown in Figure 3.

- Add the imports as shown in Figure 4.

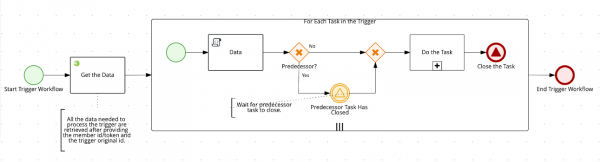

- Draw the following diagram in the process designer as shown in Figure 5:

This type of business application is typically subscribed to a data streaming solution's topic, such as Apache Kafka. However, we are not concerned with the precise data feeding mechanism for now, and the process is simply started by the REST API.

Get the data

Start by creating an external service call to get all of the data needed to execute the subprocess as a function of the member token and the trigger id. This call frees the streaming application starting the process from the burden of orchestrating data services. This activity is implemented as a REST Work Item Handler, which is pre-installed in jBPM.

You need to create two actions for this step: an on entry action, and an on exit action. (You will also need to implement the service called by the service task, but we will talk about that later.) Add the following code to the on entry action:

final ObjectMapper om = new ObjectMapper();

ArrayList<Response> dd = new ArrayList<Response>();

List rr = (List)kcontext.getVariable("pResult");

Iterator<LinkedHashMap> i = rr.iterator();

while(i.hasNext()) {

LinkedHashMap m = (LinkedHashMap)i.next();

dd.add(om.convertValue(m,Response.class));

}

kcontext.setVariable("pDataList",dd);

Then, add the following code to the on exit action:

final ObjectMapper om = new ObjectMapper();

ArrayList<Response> dd = new ArrayList<Response>();

List rr = (List)kcontext.getVariable("pResult");

Iterator<LinkedHashMap> i = rr.iterator();

while(i.hasNext()) {

LinkedHashMap m = (LinkedHashMap)i.next();

dd.add(om.convertValue(m,Response.class));

}

kcontext.setVariable("pDataList",dd);

The reason for the on exit action is that the REST API service delivers the data as a list of maps. The actual Response objects must be obtained by converting each map in the list. There is no way around this requirement, so the collection that contains the list of Response objects is the variable pDataList and not pReturn.

The only parameters to configure are:

MethodasGETUrlaspGetInfoUrlContentTypeasapplication/jsonResultClassasjava.util.ListResultaspResult

In real life, more parameters are needed. For example, the BPM will have to authenticate to retrieve the data. However, for the sake of this exercise, you will keep this service as simple as possible.

Handle the data

Next, you need to create the multiple instance subprocess that iterates over each task in the trigger's workflow once the data is available. You need to implement the requirement that certain tasks must come after given tasks in the workflow. In our example, tasks C178 and C201 must follow A490.0.

To configure the multiple instance subprocess with parallel execution (you want all subprocess instances to start at the same time), use pDataList as the collection and pData as the item, as shown in Figure 6.

The individual task workflow is modeled as a reusable subprocess with properties, as shown in Figure 7.

The process variables should be defined as well, as shown in Figure 8.

The subprocess variables are initialized in a script task. Write the following code in the body of the script task:

Response d = (Response)kcontext.getVariable("pData");

kcontext.setVariable("_taskId",d.getTask().getOrigId());

kcontext.setVariable("_predecessorId",d.getTask().getPredecessor());

The task sorting logic is based on the task's predecessor property, as shown in Figure 9.

The first diverging exclusive gateway lets the process proceed to the task subprocess if this property is null, as shown in Figure 10.

If the current task has a predecessor, as shown in Figure 11, the catching intermediate signal will wait for the signal that the predecessor task is closed before going further.

Note: You also need to create the task subprocess. Leave the Called Element property blank until you have done that.

The only variable that needs to be passed to the subprocess is pData of type com.health_insurance.phm_model.Response, as shown in Figure 12.

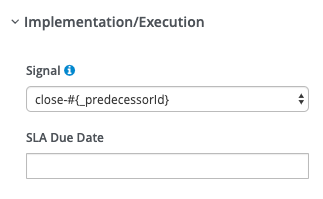

Finally, send the signal that the current process is closed. Note that the name of the signal is parameterized with the current task ID, as shown in Figure 13.

Conclusion

Now you have your data model and you have created your trigger process in jBPM. In the next article, we will walk through creating each component of the task subprocess.

Last updated: March 29, 2023