The purpose of this blog post is to provide an overview of the APIs and specifications in the Eclipse Microprofile 1.2 release. In particular, I'll try to connect these specifications and APIs with their architectural purpose. Where do they fit and why? If you're thinking of moving your Java application to the cloud, then this post might be for you.

Cloud native applications

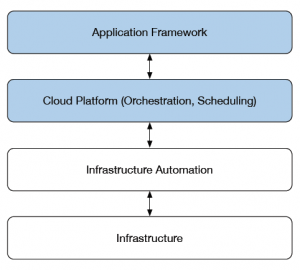

Cloud native mean different things to different people. Let’s step back a bit and establish a definition useful to frame this discussion. In this article, we will lean on the description provided by the Cloud Native Computing Foundation. According to their terms, cloud native computing defines a software stack that is:

- Containerized: Each part (applications, processes, etc) is packaged in its own container. This facilitates reproducibility, transparency, and resource isolation.

- Dynamically orchestrated: Containers are actively scheduled and managed to optimize resource utilization.

- Microservices oriented: Applications are segmented into microservices. This significantly increases the overall agility and maintainability of applications

The first notable thing is the mention of a “software stack”. This stack goes beyond the application level and includes the infrastructure, ways to automate it, and services that orchestrate and schedule computing nodes atop of these layers.

Relevant to our discussion are the contracts between the application and cloud platform (i.e. programming models), common cloud platform concepts and their impact on the application, as well as overarching architectural concerns, in particular the idea of service-oriented architectures.

The Eclipse Microprofile 1.2

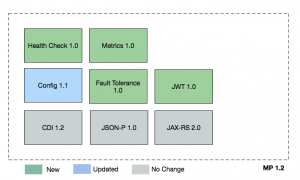

The Microprofile project aims at optimizing Java for Microservice architectures. Here cloud-native computing is a first class concept and therefore the majority of specifications and APIs developed in this community revolve around these areas. Version 1.2 is one of the recent releases and addresses configuration, health checks, metrics, fault tolerance, and token-based security concepts. This is in addition to CDI, JSON-P, and JAX-RS, which form the baseline upon which the Microprofile standard builds.

Let’s look at each of those specifications in more detail to explain where they fit in your overall application architecture that sits on a cloud-native computing platform.

Application Configuration

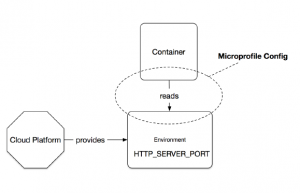

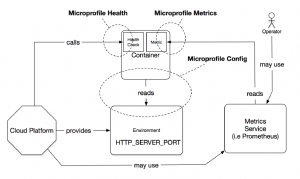

The Microprofile Configuration specification, as the name suggests, addresses configuration related concerns. The Configuration API provides a uniform way to retrieve configuration values in the Java code regardless of the actual source of the configuration. It supports a means to reconfigure your application, without the need to repackage the whole application binary. You can bundle default configuration with the application, but the API allows overrides from outside. The specification mandates common sense configuration sources (i.e. property files, system properties, or environment variables). These will be provided for all implementations, and include the ability to bring our own sources. Both dynamic and static configuration values are supported to cover a wide range of use cases (i.e. service reloading and refresh).

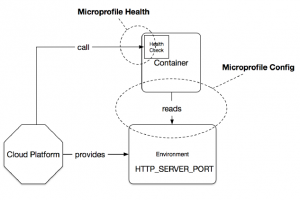

It’s common and desirable in cloud-native applications that the configuration is separated from the application and maintained by the cloud platform. A common use case for the Configuration API is to bridge between container (hosting your application) and the configuration provided by the cloud platform.

For instance, in this scenario the configuration values are injected into the container at startup (usually environment variables) and consumed by the application through the Microprofile configuration API’s.

Health Checks

Health checks determine if the computing node (i.e. the application within a container) is alive and ready to perform work. The concept of readiness is used when containers startup or when they roll over (i.e. redeployment). During this time the cloud platform needs to ensure that no network traffic is routed to that instance before it is ready. Liveness describes the state of a running container. Can it still respond to requests? Did it get stuck or did the process eventually die? If any of these states are detected, the computing node will be discarded (terminated and shutdown) and eventually replaced by another healthy instance.

Health checks are an essential contract with the orchestration framework and scheduler of the cloud platform. The checks procedures are provided by the application (i.e. you decide how to respond to the alive and readiness checks) and the platform leverages it to continuously ensure the availability of your container.

Metrics

Metrics are used for service monitoring. The Microprofile metrics specification provides the contracts to expose application or runtime data that can be used for long-term and continuous monitoring of service performance. You can use it to provide long-term trend data for capacity planning and proactive discovery of issues. It includes a coherent model that define metrics, an API to provide a measurement from the application level, a reasonable set of out-of-the-box metrics for runtimes (i.e. relevant JVM data) and a REST interface to retrieve the data from monitoring agents.

Generally speaking, metrics do provide integration with monitoring and analysis systems. These metrics might guide scheduling decisions of the cloud platform (i.e. number of replicas and capacity), support operational aspect of your application or to do A/B testing of features across deployments.

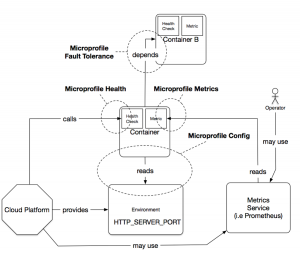

Fault Tolerance

Now we come to the fun part of distributed systems. It’s 2018, and the network still isn’t reliable (latency, partitioning, etc). But moving to service-oriented architectures leveraging cloud platforms effectively means you are building a distributed system with a lot of network communication. We can address this by introducing failure detection and handling capabilities into our application to deal with this. The Microprofile Fault Tolerance Specification sets out to allow this at the application level. It delivers a set of fault handling primitives that you can use to enhance the failure handling in your application and between distributed services.

In cases where one or more services interact with each other remotely, the Microprofile Fault Tolerance specification helps to deal with unexpected errors and planned unavailability (i.e. upon rollover of deployments). It enables the means to protect your application from errors cascading through the system. The API contains constructs to insert timeout and retry logic, as well as more sophisticated patterns like circuit breakers and bulkheads.

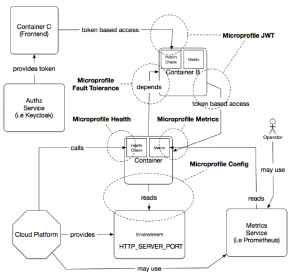

Token Based Security

The idea to leverage token-based security and the motivation for the Microprofile JSON Web Token (JWT) specification derives from the need to authenticate, authorize and verify identities in service-oriented architectures. In these settings, security becomes a concern across client-to-service and service-to-service interactions. A simple way to describe a token-based approach to security is to think of an authority that proves claims and identities (i.e. user ID with roles attached) and that issues a time-bound ticket that grants access to specific services. When you request a service and the ticket is valid, access to the service will be granted. All of this happens without going back to the identity management system and is solely based on the contents and validity of the ticket. Once the ticket becomes invalid, further access is denied and you will be redirected to the identity management system to request a new ticket.

Token based security, as supported by the Microprofile JWT specification, allow you to secure stateless service architectures. It’s based on self-contained tokens issued by an identity management system, like Keycloak. It’s often used with OpenID Connect (OIDC) authentication and leverages JSON Web Tokens underneath.

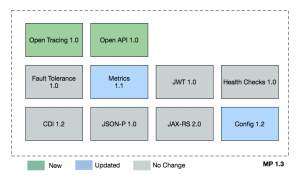

Future Microprofile Specifications

The Microprofile community innovates steadily and keeps adding specifications to further enhance the needs of cloud-native applications. In 1.3 we can expect specifications that address distributed tracing and service contract descriptions.

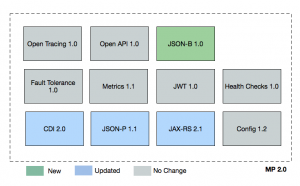

The Microprofile 2.0 on the other hand will focus on integration of the most recent Java EE 8 API’s, to enhance the baseline of the other Microprofile APIs. Among these changes are updates to CDI, JAX-RS, and JSON-P APIs, as well as the inclusion of JSON-B, a standard binding layer for converting Java objects to/from JSON messages.

Contribute and Collaborate

The Microprofile is an open and vibrant community hosted under the Eclipse foundation. This, together with the decision to move future Java EE specification work under the Eclipse foundation as well, provides an excellent opportunity to provide feedback, contribute and collaborate.

Eclipse Microprofile and EE4J projects

- https://projects.eclipse.org/proposals/eclipse-microprofile

- https://projects.eclipse.org/projects/ee4j/

Microprofile 1.2 Specifications

- BOM's and Maven Coordinates

- https://github.com/eclipse/microprofile-metrics

- https://github.com/eclipse/microprofile-health

- https://github.com/eclipse/microprofile-jwt-auth

- https://github.com/eclipse/microprofile-config

Discussion Groups and Mailing lists

To get started implementing microprofile applications and microservices, try the WildFly Swarm runtime in Red Hat OpenShift Application Runtimes. Using the Launch service at developers.redhat.com/launch you can get complete, buildable code, runnable in the cloud on Red Hat OpenShift Online that implements microservices patterns such as health check, circuit breakers, and externalized configuration.

Last updated: February 6, 2024