The release of OpenShift Container Platform 3.6 brings support for vsphere cloud provider. This provides vsphere VMDK dynamic provisioning for persistent volumes for container workloads. The storage presented to vsphere virtual machines as a VMDK has ReadWriteOnce access mode.

In the OCP 3.6 on vSphere reference architecture, much of this process is automated and can be implemented easily.

Virtual Machine Disks or VMDKs exists in virtual machines. Configuring the OCP cluster for vsphere cloud provider support requires:

- Master Node Configuration

- Application Node Configuration

- vCenter or vSphere Requirements

The kubernetes docs do a great job of highlighting the requirements for vSphere.

First, both master and nodes need the following parameters set and services restarted:

- cloud-provider=vsphere

- cloud-config=path_to_config.conf

Master configuration

$ vi /etc/origin/master/master-config.yaml

kubernetesMasterConfig:

apiServerArguments:

cloud-config:

- /etc/vsphere/vsphere.conf

cloud-provider:

- vsphere

controllerArguments:

cloud-config:

- /etc/vsphere/vsphere.conf

cloud-provider:

- vsphere

$ sudo systemctl restart atomic-openshift-master.service

Node configuration

$ vi /etc/origin/node/node-config.yaml

kubeletArguments:

cloud-config:

- /etc/vsphere/vsphere.conf

cloud-provider:

- vsphere

$ sudo systemctl restart atomic-openshift-node.service

Next, the vsphere.conf file should loosely resemble this:

$ cat /etc/vsphere/vsphere.conf

[Global]

user = "administrator@vsphere.local"

password = "vcenter_password"

server = "10.*.*.25"

port = 443

insecure-flag = 1

datacenter = Boston

datastore = ose3-vmware-prod

working-dir = /Boston/vm/ocp36/

[Disk]

scsicontrollertype = pvscsi

The variables are discussed more in the Kubernetes document. The working-dir variable is the folder that houses the OpenShift guest machines. In vSphere, the folder syntax will be /Datacenter/vm/foldername.

Using GOVC

The tool govc is a GO-based application for interacting with vSphere and vCenter.

The documentation for govc is located in github:

https://github.com/vmware/govmomi/tree/master/govc

First, download govc then export the vars that govc needs to query vCenter:

curl -LO https://github.com/vmware/govmomi/releases/download/v0.15.0/govc_linux_amd64.gz

gunzip govc_linux_amd64.gz

chmod +x govc_linux_amd64

cp govc_linux_amd64 /usr/bin/

export GOVC_URL='vCenter IP OR FQDN'

export GOVC_USERNAME='administrator@vsphere.local'

export GOVC_PASSWORD='vCenter Password'

export GOVC_INSECURE=1

$ govc ls

/Boston/vm

/Boston/network

/Boston/host

/Boston/datastore

$ govc ls /Boston/vm

/Boston/vm/ocp36

$ govc ls /Boston/vm/ocp36

/Boston/vm/ocp36/haproxy-0

/Boston/vm/ocp36/app-0

/Boston/vm/ocp36/infra-0

/Boston/vm/ocp36/haproxy-1

/Boston/vm/ocp36/master-0

/Boston/vm/ocp36/nfs-0

vSphere Pre-requisites

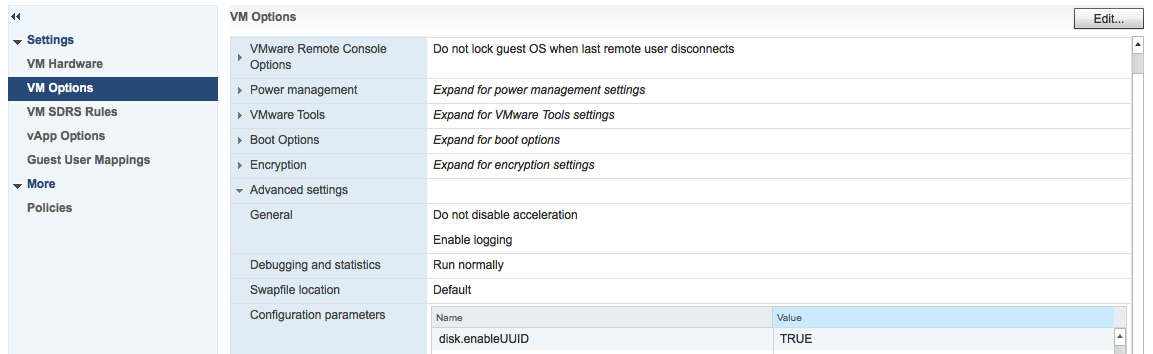

- disk.enableUUID

Next, the enableUUID parameter needs to be set on all virtual machines in the OCP cluster. The option is necessary so that the VMDK always presents a consistent UUID to the VM, this allows the new disk to be mounted properly.

The enableUUID option can be set at the template or VM in the vCenter client. Next, the template would then be used to deploy the OpenShift VMs.

Additionally, govc can be used to set this as well:

for each VM in `govc ls /Boston/vm/ocp36/`;do govc vm.change -e="disk.enableUUID=1" -vm="$VM"

Lastly, with the cloud provider configuration in place a new storage class to deploy VMDKs can be created.

Storage Classes and VMDK Provisioning

The datastore storage class can be created with the following yaml file:

$ vi cloud-provider-storage-class.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: "ose3-vmware-prod"

provisioner: kubernetes.io/vsphere-volume

parameters:

diskformat: zeroedthick

datastore: "ose3-vmware-prod"

oc create -f cloud-provider-storage-class.yaml

Now that the StorageClass object is created. The oc command can be used to verify the StorageClass exists.

$ oc get sc

NAME TYPE

ose3-vmware-prod kubernetes.io/vsphere-volume

$ oc describe sc ose3-vmware-prod

Name: ose3-vmware-prod

IsDefaultClass: No

Annotations: <none>

Provisioner: kubernetes.io/vsphere-volume

Parameters: datastore=ose3-vmware-prod,diskformat=zeroedthick

Events: <none>

OpenShift can now dynamically provision VMDKs for persistent container storage within the OpenShift environment.

$ vi storage-class-vmware-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ose3-vmware-prod

annotations:

volume.beta.kubernetes.io/storage-class: ose3-vmware-prod

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

$ oc create -f storage-class-vmware-claim.yaml

$ oc describe pvc ose3-vmware-prod

Name: ose3-vmware-prod

Namespace: default

StorageClass: ose3-vmware-prod

Status: Bound

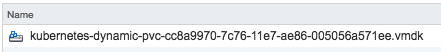

Volume: pvc-cc8a9970-7c76-11e7-ae86-005056a571ee

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed=yes

pv.kubernetes.io/bound-by-controller=yes

volume.beta.kubernetes.io/storage-class=vmware-datastore-ssd

volume.beta.kubernetes.io/storage-provisioner=kubernetes.io/vsphere-volume

Capacity: 2Gi

Access Modes: RWO

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

19s 19s 1 persistentvolume-controller Normal ProvisioningSucceeded Successfully provisioned volume pvc-cc8a9970-7c76-11e7-ae86-005056a571ee using kubernetes.io/vsphere-volume

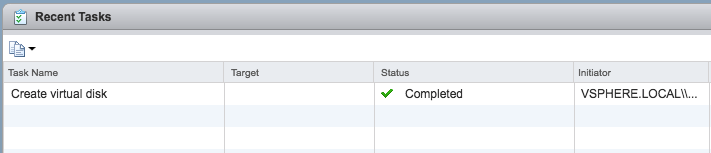

Now, in vCenter, a couple of changes are initiated:

First, the new disk is created.

Secondly, the disk is ready to be consumed by a VM to be attached to a POD.

Lastly, while datastores are generally accessible via shared storage across a vCenter cluster, the VMDKs are tied to a specific machine. This explains the 'ReadWriteOnce' limitation of the persistent storage.

Last updated: November 2, 2023