Back story

As virtualization was beginning it's march to prominence, we saw a phased approach to adoption. This is common with any sort of game changing technology....let's take electric cars as an example. Early adopters are willing to make certain trade-offs (short range) to gain new capabilities (saving money at the gas station).

In the meantime, engineers are off in the lab working hard to increase the possible consumer-base for electric cars by increasing range, decreasing charging cycle times, and improving performance. Taken in aggregate, those changes are meant to address objections to the first-cut of the technology.

In the meantime, engineers are off in the lab working hard to increase the possible consumer-base for electric cars by increasing range, decreasing charging cycle times, and improving performance. Taken in aggregate, those changes are meant to address objections to the first-cut of the technology.

Virtualization is to Linux containers is to...

Much like the electric car, Linux container technology is working its way through some of the same early adoption phases that virtualization technology underwent. We have an easy-to-use implementation of Linux containers via the Docker tooling, so the on-ramp is fairly painless. If you recall the early days of virtualization (before CPUs added hardware-assist), performance was fairly slow. Things have changed significantly since then, with RHEL and KVM performance leadership proven through many industry benchmarks. Hardware has gotten faster, software has gotten smarter, and that has allowed virtualization to address many objections. Virtualization grew from low risk workloads such as dev and test, to more difficult (but still out of the critical path) disaster-recovery site consolidation, to now running systems-of-record, including some of the most mission-critical workloads in the enterprise. We expect this same pattern from Linux container technology, and that’s why we’re working hard to make sure it is not only secure, but also that it performs and scales well.

What more can we do?

As mentioned in previous blog posts, the Performance Engineering Group at Red Hat is busy getting all sorts of workloads running in containers. From all phases in the adoption cycle, we’re looking far ahead to ensure we've mine-swept major issues on our customer's behalf.

We're readying the path to systems-of-record in containers.

By solving for the toughest workloads, we indirectly address the less difficult ones. This effort provides advanced capabilities, improved performance, and scalability to all...whether scaling vertically or horizontally.

The Bake-off

You may have seen performance results from micro-benchmarks and early candidate apps for virtualization (web apps). We wanted to share results from some of the toughest workloads... the ones that can consume all of a system’s cores, memory, and bandwidth, and make storage beg for mercy.

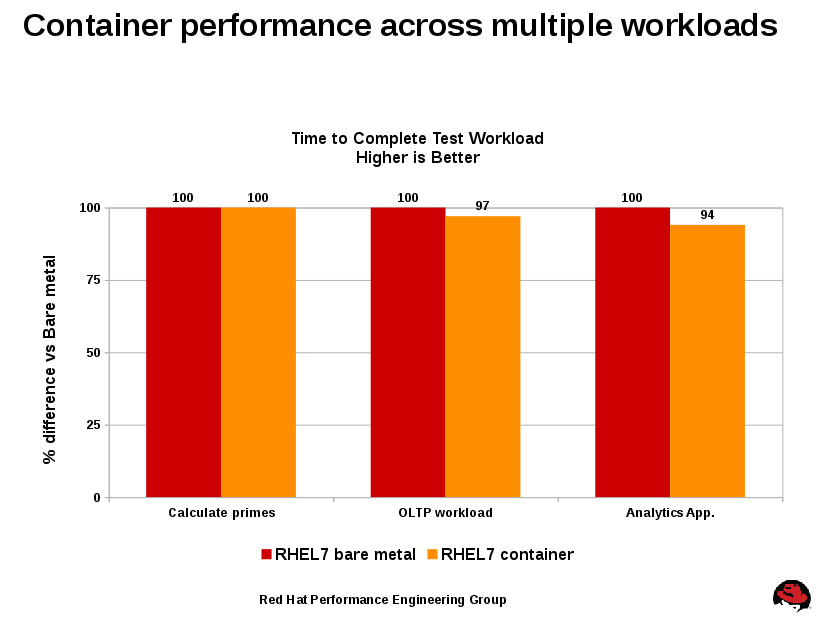

Here's a normalized comparison of three workloads that are particularly demanding on one or more subsystems. Bare metal is normalized to 100%, then compared to containerized results.

- Calculate primes: this is sysbench cpu, and the code for it is here. This sits on the CPU. I like to call this "straight line wind" performance, in that it is completely bound by individual CPU core performance.

- The fact that this is dead-on with bare metal indicates that there is no measurable overhead for straight line wind mathematical workloads found in financial services or high performance computing industries.

- OLTP workload: this is a well-known transactional database. Stresses a number of subsystems, including kernel memory management, block I/O and CPU.

- This was frankly the most impressive result of the bunch: only 3% off bare metal is a testament to the Linux kernel namespace implementation. This required volume storage, passed through to the container.

- Analytics App: this is a well-known business analytics application.

- This workload runs for half a day, and is particularly brutal on the storage subsystem. This again required passthrough volume storage, no surprise there as the data set and total I/O is hundreds of terabytes.

These tests were ran by our highly skilled performance engineers exercising precise attention to detail (no corners cut) to provide the most up-to-date data possible to our engineering teams. But for the record, the same equipment was used in the bare metal vs container cases. The same storage devices were used in the bare metal case, then passed into containers for those permutations.

In summary...

Red Hat’s track record of hammering open source bits into secure, performant and reliable enterprise software is unmatched. Applying that playbook to containers just makes sense, and the early performance data proves this a worthy goal.

Last updated: February 26, 2024