As a developer, it can be challenging to test certain real world use cases and verify how our application behaves in case of network connectivity or performance problems. With limited resources and permissions sometimes we can't reproduce issues that we face in QA or production environments, which makes debugging and fixing them cumbersome. In this post, we'll take a look at how to use containers locally to simulate the impact of network latency on a distributed database cluster.

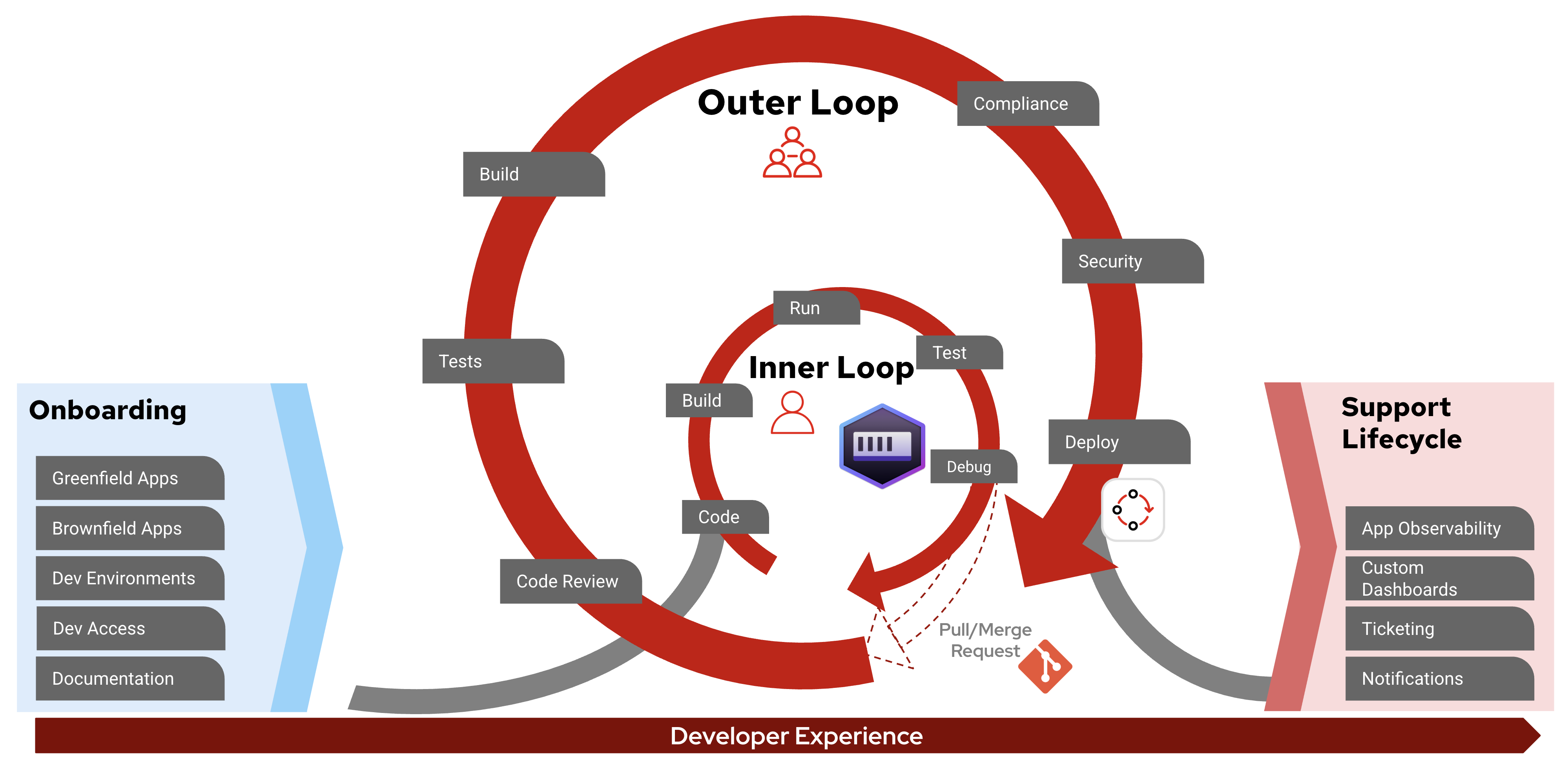

Generally, it's a good idea to use containers in a local environment—the developer’s inner loop, shown in Figure 1—to test how the application works in a production-like environment in certain situations (for example, limited resource availability or network issues).

If you have a Linux host, you can use Podman directly to spin up containers. On Mac or Windows, you'll need Podman Desktop to creates a Linux virtual machine (VM) under the hood to run the containers.

Before trying the following commands, read the Prepare Podman host section for guidance on how to prepare the host to load the required kernel module.

Add delay to an interface

Use Linux traffic control (tc) and NetEm to add delays or simulate other network issues on an interface. The tc operations require the NET_ADMIN capability to be added to the container when it's created (otherwise getting RTNETLINK answers: Operation not permitted). See details about using NetEm.

Install tc

Install the tc tool within the container. We can build a whole new image with a Containerfile or simply add the installation steps to the container's entry point if its user is root or it can sudo like this:

On a Fedora or Red Hat Enterprise Linux-based image:

dnf update -y && dnf install -y iproute-tcOn a Debian-based image:

apt update && apt-get install -y iproute2Use tc

A container running locally usually has two interfaces: lo and eth0.

Add a delay to the lo interface to have an impact on a port published to the host. Run as root inside the container:

tc qdisc add dev lo root netem delay 50msUse interface eth0 to add delay between containers attached to the same internal network:

tc qdisc add dev eth0 root netem delay 50msAdditional commands

Check status for all interfaces:

tc qdisc showRemove set delay:

tc qdisc del dev lo rootTry with PostgreSQL

Start the container with published database port:

podman run -d --name mypostgres --cap-add NET_ADMIN -p 5432:5432 -e POSTGRES_PASSWORD=secret docker.io/library/postgres:17.4Check that a SELECT is quick (less than 1 millisecond) by default. We enabled \timing, so psql logs the execution time.

If you have psql installed on your laptop:

PGPASSWORD=secret psql -h localhost -p 5432 -U postgres postgres -c "\timing" -c "SELECT 1"Or use psql within the container:

podman exec -it mypostgres sh -c 'psql -h 127.0.0.1 -p 5432 -U postgres postgres -c "\timing" -c "SELECT 1"'Let's install tc and add delay to the lo interface:

podman exec -it mypostgres sh -c 'apt update && apt-get install -y iproute2 && tc qdisc add dev lo root netem delay 50ms'Run SELECT 1 again. It should report ~100 millisecond execution time because of the added network delay.

Try 2 containers on the same network

Run a container with a 50-millisecond delay on eth0 and ping it from another container on the same internal network:

#Create container network

podman network create mynetwork

# Start container on custom network

podman run -d --name fedora --net mynetwork --cap-add NET_ADMIN fedora:42 sh -c 'dnf install -y iproute-tc && tc qdisc add dev eth0 root netem delay 50ms && sleep infinity'

# Ping from another container

podman run --rm -it --name ping --net mynetwork fedora:42 sh -c 'dnf install iputils -y && ping fedora'Expect to see the added ~50 millisecond ping time.

Try a whole YugabyteDB cluster

YugabyteDB is a distributed PostgreSQL-compatible database. See steps in this script how to create a whole db cluster in containers simulating a five node database distributed in two different regions/datacenters with network latency in between:

- Region-A has

db-node1-2. Region-B hasdb-node3-5with network latency. - Ports of

db-node1are published to host, to connect withpsqlor a test application. SELECTis quick as there is no latency set fordb-node1.INSERTis slow because we enforced replication to all 5 nodes including the ones "in the other region."

Original use case

Here is a quick note about a real-world problem we managed to replicate and resolve using local containers as explained in the previous section. We saw significant performance degradation with a Spring Boot application caused by longer than expected execution time for inserting hundreds of rows in a database.

Creating a YugabyteDB cluster with network delays matching the production environment in containers made it possible to do an in depth analysis and debugging on our local laptops. The tools available in inner loop accelerated investigation and resulted in a short feedback loop for developers trying different approaches to fix the root cause of a problem.

In this special case, we found that enabling batch inserts was not enough to achieve performance improvement due to the distributed nature of the database, but we had to add reWriteBatchedInserts=true in our postgresql JDBC connection string to merge INSERT statements.

Using a Pod instead of a single container

If you can't install the tc tool directly in the main container (for example, if you're using Red Hat Universal Base Image without a subscription attached), you can run the tc command in another container attached to the same container network namespace.

Podman supports the concept of Pods for such purposes, similar to Kubernetes. Containers in the same Pod share the same network interface, so the network delay set in one container has an impact on the whole Pod.

For example, add latency to a published port:

# Create a Pod with a published port

podman pod create -p 5432:5432 mypod

# Run a temporary container in the Pod having the "tc" tool

podman run --pod mypod -it --rm --cap-add NET_ADMIN fedora:42 sh -c 'dnf install -y iproute-tc && tc qdisc add dev lo root netem delay 50ms'

# Run the main container in the Pod

podman run --pod mypod -d -e POSTGRES_PASSWORD=secret postgres:17.4

# Verify increased query time with "psql"

PGPASSWORD=secret psql -h localhost -p 5432 -U postgres postgres -c "\timing" -c "SELECT 1"Similarly, increase ping time between containers:

# Create a Pod attached to a network

podman pod create --network mynetwork mypod

# Run a temporary container in the Pod having the "tc" tool

podman run --pod mypod -it --rm --cap-add NET_ADMIN fedora:42 sh -c 'dnf install -y iproute-tc && tc qdisc add dev eth0 root netem delay 50ms'

# Run the main container in the Pod

podman run --pod mypod -d redhat/ubi9 sh -c 'sleep infinity'

# Hostname to ping is the Pod's name in this case

podman run --rm -it --name ping --net mynetwork fedora:42 sh -c 'dnf install iputils -y && ping mypod'Prepare Podman host

The preceding tc qdisc commands require the sch_netem Network Emulator kernel module to add delay to network interfaces. It's common to run into the error Specified qdisc not found if this kernel module is not available on your host. As the kernel is shared between containers, you need to enable the kernel module on your Linux host or within the Linux VM on Mac or Windows.

On Windows, you need to use Podman with Hyper-V backend instead of Windows Subsystem for Linux (WSL2). Make sure that Hyper-V is enabled on your machine and select Hyper-V as the virtualization provider during installation. You need local admin permissions.

The Linux VM machine used by Podman Desktop is based on Fedora CoreOS. See available images and repo for details. Currently image v5.3 is used by default, but you can enforce a specific version as follows:

podman machine init --image docker://quay.io/podman/machine-os:5.5To enable the sch_netem kernel module, get a shell inside the VM:

podman machine sshThis command will drop an error if the required module is not installed yet:

sudo modprobe sch_netemInstall the missing kernel module:

sudo rpm-ostree install kernel-modules-extraRemove the default config blocking auto-load for this kernel module (this is a dangerous module, if you think about it):

sudo rm /etc/modprobe.d/sch_netem-blacklist.confEnable loading kernel module on startup:

sudo sh -c 'echo sch_netem >/etc/modules-load.d/sch_netem.conf'Exit from the VM, then restart the machine:

podman machine stop && podman machine startThe sch_netem kernel module should be loaded now in the VM:

podman machine ssh lsmod | grep sch_netemIf you work directly on a Linux host, you need to run similar commands based on your Linux distribution to enable the sch_netem kernel module. It’s better to avoid auto-load and just activate the module with modprobe sch_netem whenever it’s needed.

Conclusion

“Shift left” is a software development approach that encourages testing and detecting performance or security problems in the earlier phases of the development life cycle. Using containers in inner loop developer experience with tools like Podman Desktop helps you test complex architectures and simulate use cases that you might run into later in a production environment.