Streaming applications process data such as video, audio, and text as a continuous flow of messages. Working with streams adds a new dimension to application programming. The difference between handling events and data streaming is like going from drinking water one glass at a time to taking it in from a garden hose. This article shows how to get a stream up and running under Amazon Kinesis, a stream management service offered by Amazon Web Services (AWS).

Data streaming is a different way of doing business. Making it all work requires both an understanding of some basic streaming patterns and an awareness of the tools and techniques you can use to get the job done. This article takes you from the initial configuration through running a producer and checking reports on its behavior.

Understanding streaming patterns

As the name implies, a stream is a continuous flow of data transmitted at high-speed rates between a source and target. Probably the best example of streaming data is video content from a producer such as C-SPAN. The C-SPAN studio streams bytes of video data that make up a telecast through a streaming manager to a data center on the backend. Then that stream is forwarded onto users' computers or smart TVs. In this scenario, you can think of the video source as the producer and the viewers at home as consumers (Figure 1). Multiple consumers are easy to support, which is great for broadcasting.

There are also streaming scenarios in which several producers stream data into a streaming manager, which sends them on to consumers. This pattern is shown in Figure 2.

An example of this many-to-many scenario is where many browsers act as producers on the client side of a web application. Each browser sends a continuous stream of messages back to a stream manager on the server side. Each message might describe a particular mouse event from the user's browser.

Then, on the server side, there might be any number of consumers interested in a user's mouse events. The marketing department might be interested in the ads the user clicks on. The user experience team might want to know how much time a user takes moving the mouse around a web page before executing an action. All that any party needs to do to consume information from the stream of interest is establish a connection to the stream manager.

The important thing to understand about streaming is that it's very different from a request/response interaction that handles one message at a time, which is typical when web surfing or using an HTTP API such as REST. In contrast, streams involve working with an enormous amount of messages flowing continuously in one direction all the time.

A number of services support streaming. Google Cloud has its DataFlow service. The streaming component of Red Hat AMQ is based on Apache Kafka and integrates with Red Hat OpenShift. There are other services too. In this article, we're going to look at the Amazon Kinesis service.

Note: To learn how to implement message streams using OpenShift and Kafka, try this activity in the no-cost Developer Sandbox for Red Hat OpenShift: Connecting to your Managed Kafka instance from the Developer Sandbox for Red Hat OpenShift.

Amazon Kinesis Data Streams

Amazon Kinesis is made up of a number of subservices such as Amazon Kinesis Data Streams, Amazon Kinesis Data Firehose, and Amazon Kinesis Video Streams. In this article, we will use Kinesis Data Streams, which is a general streaming manager.

Working with a Kinesis data stream is a three-step process, discussed in the sections that follow:

- Create the stream.

- Attach a user or user group to the stream. The user or group must have AWS permissions to use the stream.

- Submit stream data as that user (group) to the stream on the backend.

There is actually a fourth step: In order for stream data to be useful, it needs to be processed by a consumer. However, creating and using a consumer is beyond the scope of this article. In this article, we'll just get data into Kinesis Data Streams.

Set up the stream on Amazon Kinesis

There are a few ways to set up a Kinesis stream:

- Create the stream directly using the AWS command line.

- Run an AWS CloudFormation script.

- Use the AWS dashboard.

In this article, we'll use the dashboard.

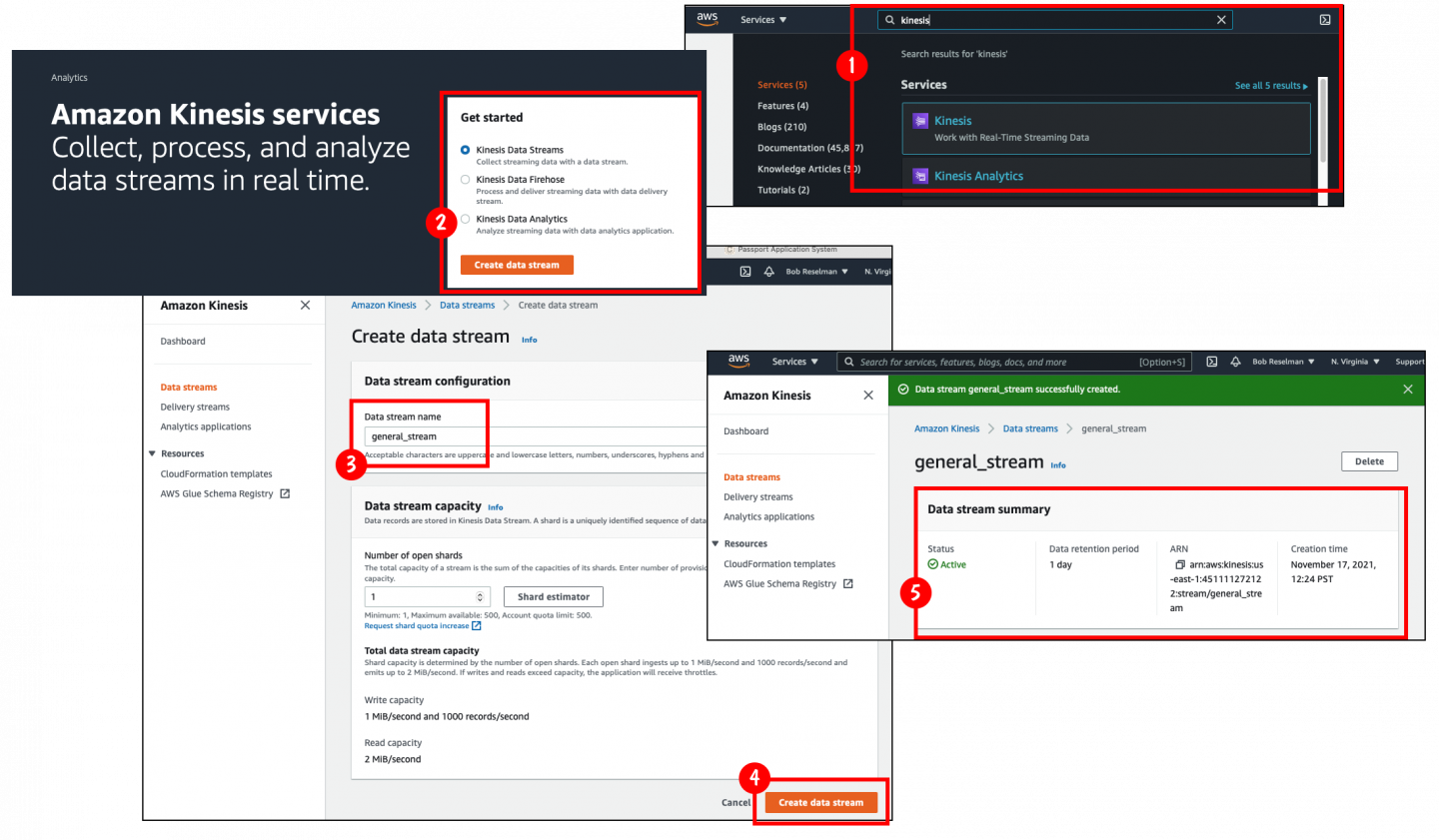

Figure 3 illustrates the process for creating the Kinesis stream.

The steps are as follows:

- Search the AWS Services main page for the term "Kinesis "and then select the Kinesis service.

- Select the Kinesis Data Streams option in the dialog that appears.

- Enter the name of the new data stream in the Data stream name box. In this case, we'll name the stream

general_stream. - Click the Create data stream button at the bottom of the Create data stream page.

- The newly created stream is displayed on the stream's page, in this case, Amazon Kinesis→Data streams→general_stream.

Create a user for the stream

After the stream is created, you'll need to create a user in AWS that has to write access to the stream. There are two ways to grant permission. You can create an AWS group that has write access permission to the stream and then add an existing or new user to the group. Another way is to create a new user and give that user the required permission to write to the stream.

For this article, we'll create a new user specifically dedicated to writing to the stream. Please be advised that we're doing this for demonstration purposes only. At the enterprise level, granting permissions to AWS resources is a very formal process that can vary from company to company. Some companies create user groups with specific permissions and assign users to that group. Other companies assign permissions on a user-by-user basis. In this case, we'll take the single-user approach.

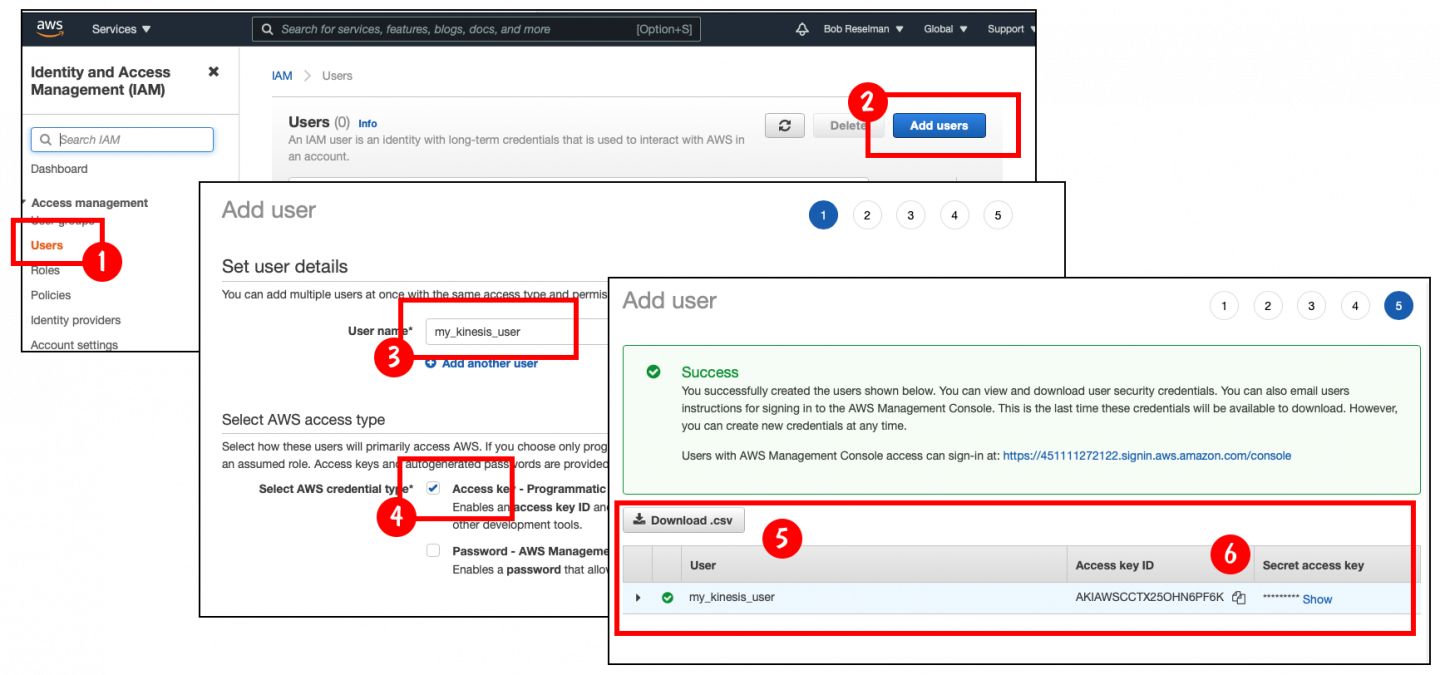

Figure 4 illustrates the process.

The steps are as follows:

- Select the Identity and Access Management (IAM) service from the AWS Services page. In the IAM page, select Users. The Users page will appear.

- Click the button labeled Add users on the upper right of the page. The Add user page appears.

- Enter the name of the user. In this case, we'll enter the name

my_kinesis_user. - Check the Access key checkbox. An access key ID and secret access key are generated for this user. These two credentials are very important. You'll use them to allow write access to the stream from outside of AWS.

- Once you fill out the first page in the create user process, you'll be presented with pages for setting up permissions, tags, etc. You can just click through these pages without making any entries. We'll set up permissions later on.

- Finally, you have created the user. The Success page displays the access key ID and the secret access key. Also, there's a button labeled Download .csv. Click this button to download the

.csvfile that contains the access key ID and the secret access key information to your local machine. You'll need that information when creating programs that write to a Kinesis stream as the user you've just created.

Define access to the Amazon Kinesis stream

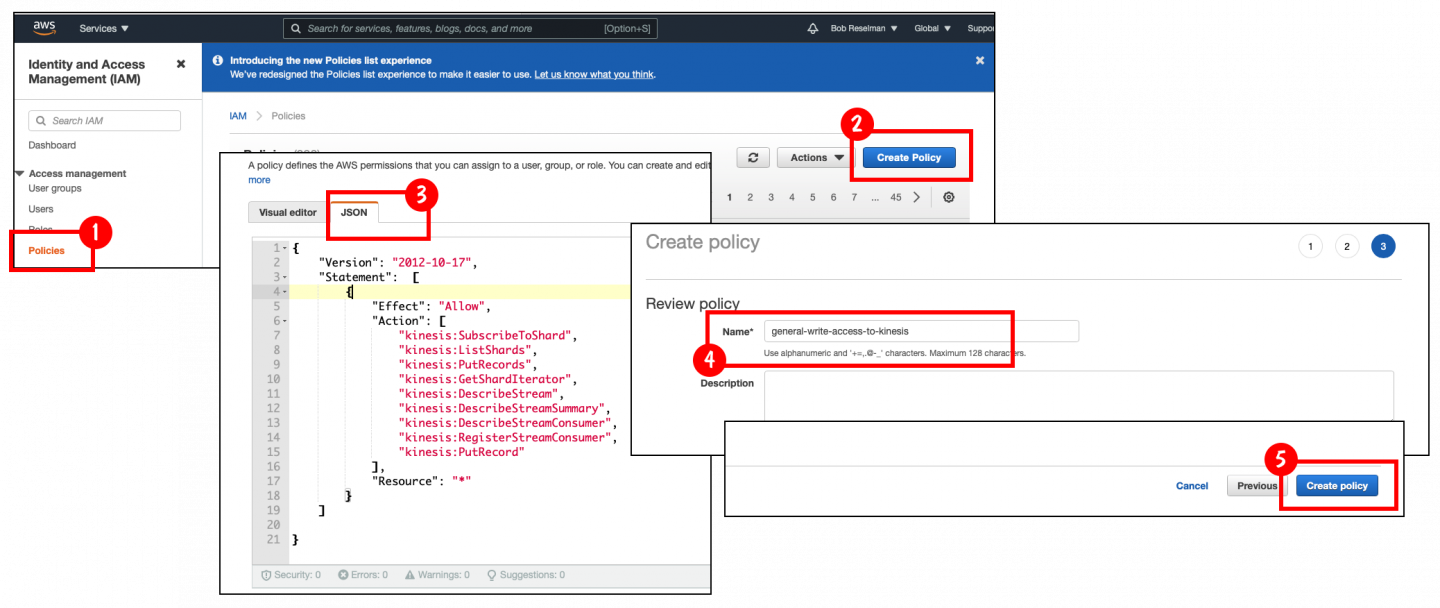

Now that you've created the special user, you need to create a policy granting write permission to the stream. After the policy is created, you'll assign it to the user that was created in the previous section.

There are a lot of permissions to choose from. However, if you know exactly the permissions you want to assign to the policy, you can define them in JSON format and apply them directly. This is the approach we'll take. Our policy is defined in the following JSON, which describes all the permissions a user needs to have write access to any Kinesis stream:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kinesis:SubscribeToShard",

"kinesis:ListShards",

"kinesis:PutRecords",

"kinesis:GetShardIterator",

"kinesis:DescribeStream",

"kinesis:DescribeStreamSummary",

"kinesis:DescribeStreamConsumer",

"kinesis:RegisterStreamConsumer",

"kinesis:PutRecord"

],

"Resource": "*"

}

]

}Figure 5 illustrates how to add the permissions.

The steps are as follows:

- In the Identity and Access Management (IAM) page, click Policies.

- Click the Create Policy button, shown on the right side of the screen. This displays the permissions dialog.

- Select the JSON tab in the permissions dialog. Substitute the JSON shown earlier for the placeholder text that appeared initially. Click through the next set of pages until you get to the Review policy page.

- In the Review policy page, enter a name for the policy in the text box. In this case, we use the name

general-write-access-to-kinesis. - Click the Create policy button.

Now that we've created the policy, we need to apply it to the previously created user named my_kinesis_user. That task is the subject of the next section.

Enable access to the Amazon Kinesis stream

At this point we have a special user named my_kinesis_user and a policy named general-write-access-to-kinesis. Now we need to attach the policy to the user. Figure 6 illustrates the process.

The steps are as follows:

- In the Identity and Access Management (IAM) page, click Users on the left side to return to the Users page. A list of users will appear. Click on the user named

my_kinesis_user. A summary page for that user will appear. - Under the Permissions tab, click the Add permissions button. The Add permissions page will appear.

- Select the button labeled Attach existing policies directly.

- In the Filter policies text box, enter the term,

general. As you type the letters, the custom policygeneral-write-access-to-kinesisappears. This is the policy you created previously. - Select the checkbox associated with the policy.

- Click the Next: Review button on the right side.

- Review the policy assignment and then click the Add permissions button.

- The summary page for the user shows its Amazon Resource Name (ARN).

- On the user's Permissions tab, the

general-write-access-to-kinesispolicy is shown applying to the user.

At this point, you can create an application that uses the AWS SDK to write to a Kinesis stream as the user my_kinesis_user. The program will need the access key ID and secret access key ID credentials you created earlier.

Stream data to Amazon Kinesis using the AWS SDK

The Node.js application we'll use to demonstrate the use of the AWS SDK is stored as a GitHub project named kinesis-streamer. The application is intended to show you how to write to a Kinesis stream from outside of AWS. You can find the source code in my GitHub repository.

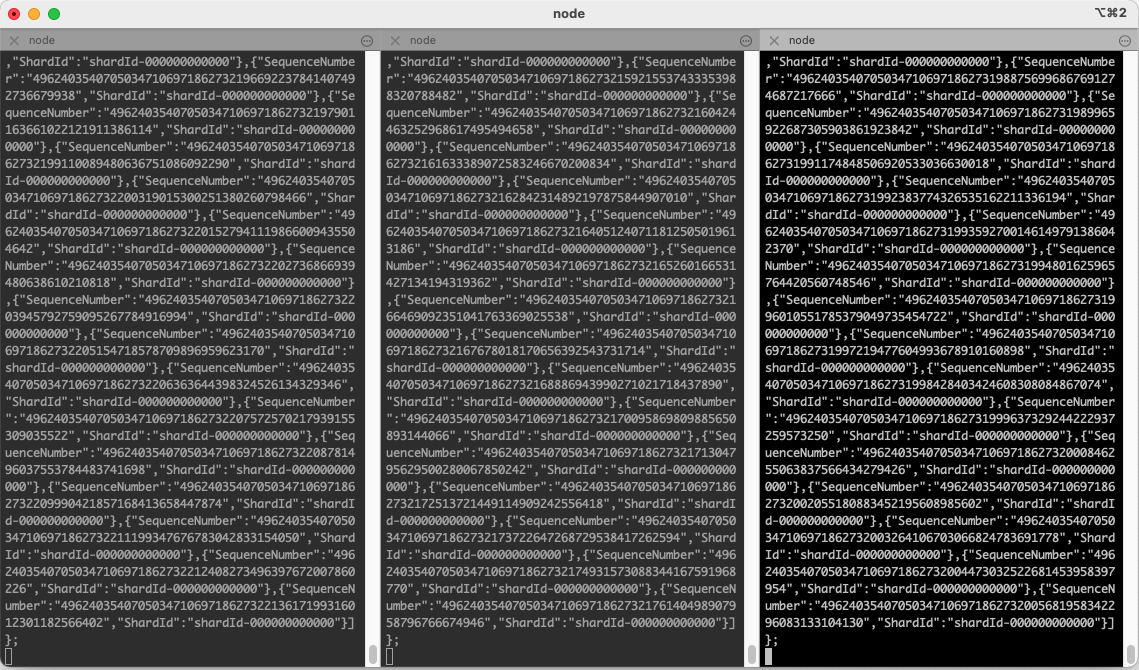

Figure 7 shows a screenshot of three instances of kinesis-streamer submitting data to a single Kinesis stream. This is a real-world example of the "many producer to single stream" pattern shown in Figure 2.

Each instance of kinesis-streamer creates a number of cron jobs that fire every second. Each cron job sends a number of messages to a particular Kinesis stream. By default, the application creates ten cron jobs, each of which submits ten messages to the Kinesis stream.

A programmer binds to an Amazon Kinesis stream by setting values to environment variables that allow the application to write to the defined Kinesis stream as a particular AWS user. The user is identified by its AWS access key ID and secret access key. Also, the target Kinesis stream is declared by an environment variable. In addition, the developer can set environment variables to override the default number of cron jobs and messages to create. You can read the details of how to get the application up and running at its GitHub repository. The following settings illustrate how to configure the environment variables:

AWS_ACCESS_KEY_ID="a^ACcEsS_KEY_tokEN!"

AWS_SECRET_ACCESS_KEY="a^SecRET_accESS_k3y_TOken"

AWS_KINESIS_STREAM_NAME=my-stream-kinesis

CRON_JOBS_TO_GENERATE=50

MESSAGES_PER_CRON_JOB=20Note: Be advised that the values assigned to the environment variables AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY shown in the preceding snippet are only fictitious placeholder values.

The kinesis-streamer project uses the AWS SDK for JavaScript to create a JavaScript client running under Node.js that writes to a Kinesis stream. There are many other ways to send data to Kinesis from both outside and inside AWS. You can use a technology such as AWS's Kinesis Data Generator (KDG) to send messages externally to an internal Kinesis stream. Or you can use an AWS Lambda function to send messages internally to Kinesis from within AWS. Of course, you can always create your own program using one of the many programming languages that are supported by the AWS SDK.

The kinesis-streamer project demonstrates such possibilities. So you might want to examine the source code in the demonstration project to learn the details of working with Kinesis streams using the AWS SDK. The code is well documented to make it easier for developers to absorb the details.

Get performance data using Kinesis stream reports

Message activity in a Kinesis stream can be monitored using a number of graphical reports that AWS provides out of the box. From a developer's point of view, at the very least, you'll want to know whether the messages your application emits are actually making it into the Kinesis stream manager. These reports will tell you that information at a glance.

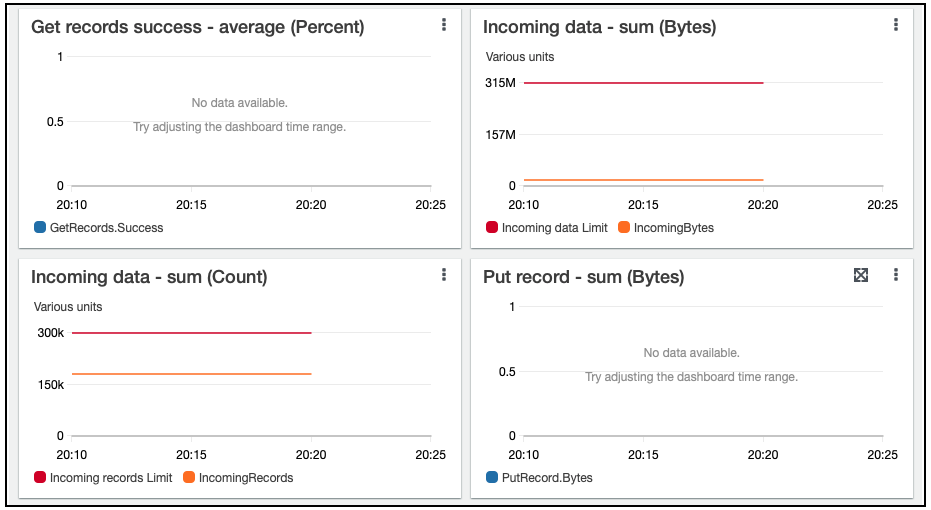

For example, Figure 8 shows reports for incoming data. These reports indicate that the stream received records. As simple as it sounds, this is very useful information.

There are a number of other reports available for monitoring stream activity, such as latency, errors, and throughput reached, to name a few.

Kinesis reporting allows developers to see the results of their application's streaming activity. This information is useful not only for smoke testing on the fly, but also for more extensive troubleshooting when trying to optimize the overall performance of a Kinesis stream.

Next steps

The information in this article shows you how to create a Kinesis data stream and how to send data to that stream. However, as mentioned earlier, simply sending data to a stream isn't enough. In order for the stream to be useful, there need to be consumers on the other end of the stream that are processing the incoming messages.

Writing consumers is a topic worthy of an article all to itself. Each consumer satisfies a specific use case. Not only do developers need to create programming logic for their producers and consumers, but they also need to decide which programming language best suits the need at hand. For example, Node.js JavaScript applications are operating system agnostic and a bit simpler to program in terms of language syntax, yet consumers written in Go run a lot faster. There's a lot to consider.

As mentioned at the beginning of this article, data streams add a new dimension to application development. Applications that use data streams make online services such as Netflix and Hulu possible. Yet technology does not stand still. New use cases are sure to emerge, and new streaming technologies will appear to meet many of the new challenges that older technologies can't address. As a result, developers who master the intricacies of working with data streams today are sure to enjoy a prosperous career in the future that's to come.

Resources

Check out the following resources to learn more:

Last updated: October 25, 2024