AI/ML Workloads

Applications based on machine learning and deep learning, using structured and unstructured data as the fuel to drive these applications.

AI Workloads - the engines behind AI algorithms

Workloads, in general, can be classified as task-oriented processes and applications that extract a finite amount of computing resources and time to complete the task in order to produce an outcome. AI workloads are no different, but describe applications and processes that are based on the core underlying AI techniques of machine learning and deep learning where data and information drive these processes. AI workloads fall into two main categories: training and inference.

Model training: Large amounts of data are fed into machine learning models and letting them learn to identify patterns and make predictions.

Model inference: Brand-new data are fed into trained machine learning models that draw conclusions without examples of the desired result.

AI workloads are identified by their complexity and the type of data processed. AI workloads deal with unstructured data like images, generic text, and other non-tagged and structured data. This is where machine learning and deep learning algorithms play a critical role in analyzing unstructured data.

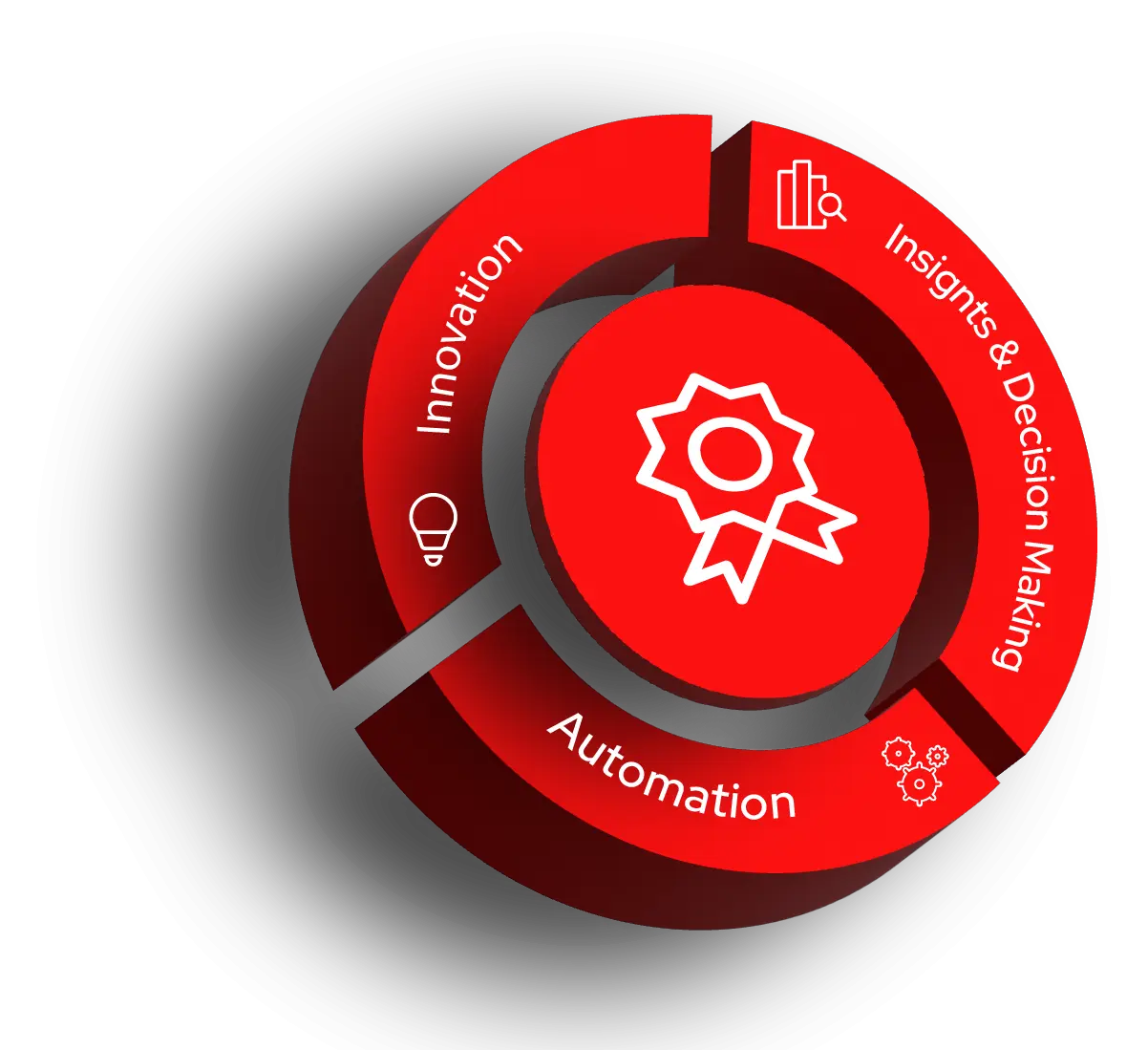

Benefits of AI Workloads

AI workloads drive innovation in products and services, produce insight into AI model outcomes, and allow the AI development process to operate with efficiency. AI workloads are computational tasks powered by machine learning and trained on vast datasets which offer key advantages:

AUTOMATION: AI workloads help offload repetitive, time-consuming tasks to AI, opening up human talent for other needs.

INSIGHT & DECISION-MAKING: AI workloads enable the analysis of vast amounts of data, uncovering potential outcomes and hidden patterns that can lead to better choices in healthcare, business, and scientific research.

INNOVATION: AI workloads propel AI services and products in areas once thought to be out of reach, like prescriptive and personalized medicine, autonomous vehicles, and environmental modeling.

AI workload requirements

AI workloads need to adapt to the demands of data, infrastructure, security, performance, and scalability requirements in order to train large language models (LLMs) and multiple clusters using thousands of GPUs transferring large datasets. Underlying AI platforms including operating systems, application and container orchestration systems, and IT automation systems need to be optimized to meet the requirements of AI workloads, enabling high-performance AI application deployment.

Developers and architects have demanding AI workload requirements, where critical platforms need to be optimized, including operating systems, IT orchestration systems, and IT automation systems so as to maximize application deployment across the hybrid cloud.

- Operating systems need to offer a secure and consistent administration experience with support for AI/ML libraries, hardware architectures, and accelerators.

- IT and container orchestration systems need to offer simplified deployment, scaling, and management of AI/ML training and serving.

- IT automation systems need to build, provision, and manage applications and infrastructure across platforms.

Hands-on learning

Recent AI articles

Learn how to fine-tune AI pipelines in Red Hat OpenShift AI 3.3. Use Kubeflow...

Build better RAG systems with SDG Hub. Generate high-quality...

Explore big versus small prompting in AI agents. Learn how Red Hat's AI...

Learn how ATen serves as PyTorch's C++ engine, handling tensor operations...