First off, I'm not referring to Knative, the Kubernetes-based platform for modern serverless workloads, but Kubernetes native. In this article, I will explain what Kubernetes native is, what it means, and why it should matter to developers and enterprises. Before we delve into Kubernetes native, I will recap what cloud-native application development is and how that leads us to Kubernetes-native application development.

Cloud native: A recap

We’ve all heard of the cloud-native approach for developing applications and services, and even more so since the Cloud Native Computing Foundation (CNCF) was founded in 2015—but where did the term come from? The term cloud native was first used by Bill Wilder in his book, Cloud Architecture Patterns (O'Reilly Media, 2012). According to Wilder, a cloud-native application is any application that was architected to take full advantage of cloud platforms. These applications:

- Use cloud platform services.

- Scale horizontally.

- Scale automatically, using proactive and reactive actions.

- Handle node and transient failures without degrading.

- Feature non-blocking asynchronous communication in a loosely coupled architecture.

Related to cloud-native technologies is The Twelve-Factor App, a set of patterns (or methodology) for building applications that are delivered as a service. Cloud architecture patterns are often described as being required for developing cloud-native applications. Twelve-factor overlaps with Wilder's cloud architecture patterns, but 12-factor goes into the details of application development that are not specifically related to cloud-native development. They equally apply to application development in general and how an application integrates with the infrastructure.

Wilder wrote his book during a period of growing interest in developing and deploying cloud-native applications. Developers had a variety of public and private platforms to choose from, including Amazon AWS, Google Cloud, Microsoft Azure, and many smaller cloud providers. Hybrid-cloud deployments were also becoming more prevalent around then, which presented challenges.

Note: Issues related to the hybrid cloud are not new, Reservoir - When One Cloud is Not Enough (IEEE 2011), covers several of them.

Orchestrating the hybrid cloud

As an architectural approach, the hybrid cloud supports deploying the same application to a mixture of private and public clouds, often across different cloud providers. A hybrid cloud offers the flexibility of not being reliant on a single cloud provider or region. If one cloud provider has network issues, you can switch deployments and traffic to another provider to minimize the impact on your customers.

On the downside, each cloud provider has its preferred mechanisms—the command-line interface (CLI), discovery protocols, and event-driven protocols, to name a few—that developers must use to deploy their applications. This complication made deploying the same application to multiple cloud providers in an automated fashion impossible.

To solve the problem, many orchestration frameworks began to appear. However, it wasn’t long before Kubernetes became the de-facto standard for orchestration. These days it would be unusual to find a cloud provider that doesn’t offer the ability to deploy applications into Kubernetes. Google, Amazon, and Microsoft all offer Kubernetes as an orchestration layer.

Get ready for Kubernetes native

For developers deploying applications to a hybrid cloud, it makes sense to shift focus from cloud native to Kubernetes native. There’s a great article on a Kubernetes-native future that covers what a Kubernetes-native stack means. The key takeaway is that Kubernetes-native is a specialization of cloud-native, and not divorced from what cloud native defines. Whereas a cloud-native application is intended for the cloud, a Kubernetes-native application is designed and built for Kubernetes.

Before discussing the benefits of targeting Kubernetes-native applications and services, we need to understand all of the differences. Above we mentioned the benefits of Kubernetes native for infrastructure management. Deploying applications to Kubernetes with identical tools across cloud providers is key. Next, we revisit the question of what makes an application (or a microservice, function, or deployment) cloud-native. Can an application truly be cloud-native? Can an application seamlessly work on any cloud provider?

In the early days of cloud-native development, orchestration differences prevented applications from being truly cloud-native. Kubernetes resolved the orchestration problem, but Kubernetes does not cover cloud provider services or an event backbone. To answer whether any application can be cloud-native we need to cover the different types of applications that can be developed.

Stateful versus isolated applications

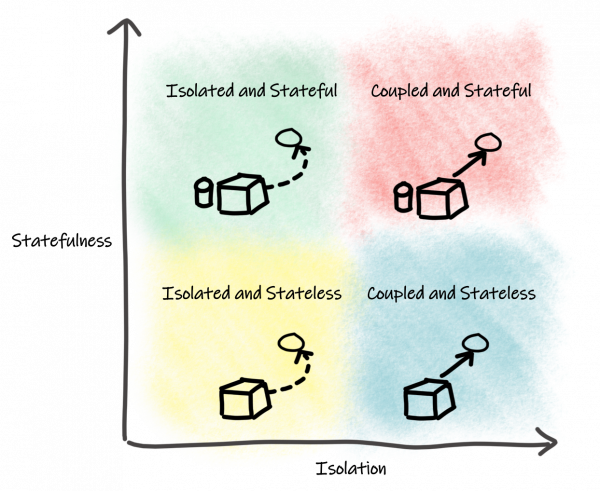

We often categorize applications as either stateless or stateful. Just as critical is the split between isolated and coupled. Determining whether an application is cloud-native is impacted by both these factors. When I say coupled, I’m not referring to the application's internal code being tightly or loosely coupled. I’m referring to whether an application relies on external applications and services at all. An application that doesn't interact with anything outside itself is isolated. An application that uses one or more external services is coupled.

Consider Figure 1, which shows statefulness and isolation on different axes.

Note that isolated and stateful applications require a state to operate, but the state is retained within the application itself, not stored externally. Not many applications fall into this category as they wouldn't handle restarts well. By contrast, coupled and stateless applications are stateless but must couple with external services to respond to requests. This type of application is more common as it includes interacting with databases, message brokers, and Apache Kafka.

Any application that is isolated, whether stateless or stateful, can adhere to cloud-native principles. Such an application can be packaged for different providers in a hybrid cloud. Applications that require coupling to external services also could be considered cloud-native, but can such applications be deployed to a hybrid cloud? The answer to that question is everyone's favorite: It depends.

A tale of two services

If the external service is a database provisioned on Kubernetes for the application to use, then the answer is yes: The application is independent of the cloud provider and can be deployed to a hybrid cloud. This type of architecture places a larger burden on operations. Requiring a database, or other data storage, to be installed and managed in a Kubernetes environment. That's far from ideal.

Another option is to use a cloud provider for database services. Doing that would reduce the operational burden, but we would be tied to a specific cloud services provider. In this case, we would not be able to deploy the application to a hybrid cloud across multiple providers.

Kubernetes provisioning for hybrid-cloud services

Now let's consider a real-world example. If an application is written to use Amazon S3 for data storage, the development team interacts with it using custom Amazon S3 APIs. If we decide to deploy the application to Google Cloud, we can't expect it to work with Google Cloud Storage without being modified for Google Cloud Storage APIs. Both versions might be cloud-native, but they’re not cloud-provider agnostic. This factor poses an issue for fully supporting the hybrid-cloud model.

The Kubernetes community is investigating a solution for object bucket storage, but that fix doesn't solve the more general issue of API portability. Even if Kubernetes has a way to generically provision a bucket-type storage mount, like Amazon S3, developers will still need to utilize Amazon S3 APIs to interact with them. Developers still require cloud provider-specific APIs in their applications.

Enterprises can protect their business code by writing generic wrappers around different services. Most enterprises don't want to expend valuable resources on developing wrappers for services, however. More importantly, service wrappers still require re-packaging applications with different dependencies for each cloud provider environment.

The missing piece, from a developer's perspective, is an application API that is sufficiently abstracted for use across cloud providers for the same type of service.

Kubernetes: The new application server?

Although Kubernetes is evolving to enable environment provisioning for services that developers need, we still need a way to fill the gap between the application's business logic and interaction with those services. That's one reason I previously argued that application servers and frameworks still have a role to play on Kubernetes.

Adopting Kubernetes-native environments ensures true portability for the hybrid cloud. However, we also need a Kubernetes-native framework to provide the "glue" for applications to seamlessly integrate with Kubernetes and its services. Without application portability, the hybrid cloud is relegated to an environment-only benefit.

That framework is Quarkus.

Quarkus: The Kubernetes-native framework

Quarkus is a Kubernetes-native Java framework that can be used to facilitate application portability for hybrid cloud environments. As an example, Quarkus lets you generate descriptors for deploying to Kubernetes from within your application. It's also easily adjusted with configuration values in the application.properties file. With the health extension, the generated descriptor contains the readiness and liveness probes crucial to Kubernetes knowing if your application is healthy or not. These features simplify life for developers by creating the application deployment in Kubernetes and knowing the right way to integrate with Kubernetes pod lifecycle handling.

Quarkus also combines build-time optimizations and reactive patterns to reduce application memory consumption. This factor has a direct impact on potential deployment density. Being able to run more pods on the same infrastructure is an important aspect of Quarkus (read more about this from Lufthansa Technik, The Asiakastieto Group, and Vodafone Greece). On a public cloud, this feature allows the use of smaller instances to run an application and, as a consequence, reduce costs.

It's still early days for Quarkus, and for our goal of fulfilling Kubernetes-native application development to the fullest extent possible. However, we've made great progress in a short amount of time, and we are committed to ensuring that Quarkus provides the best Kubernetes-native experience for all developers.

Conclusion

That Kubernetes-native is a specialization of cloud-native means that there are many similarities between them. The main difference is with cloud provider portability.

Why is that distinction important? Taking full advantage of the hybrid cloud and using multiple cloud providers requires that applications are deployable to any cloud provider. Without such a feature, you’re tied into a single cloud provider and reliant on them being up 100% of the time. Enjoying the benefits of the hybrid cloud requires the developer to embrace Kubernetes-native application development, and not just for environments, but for applications as well. Kubernetes native is the solution to cloud portability concerns. Quarkus is the conduit between applications and Kubernetes that facilitates that hybrid cloud portability. Through ongoing work to offer abstractions for various services provided, Quarkus will help take you there!

Last updated: March 29, 2023