We are extremely pleased to present the new version of the Red Hat OpenShift deployment extension (OpenShift VSTS) 1.4.0 for Microsoft Azure DevOps. This extension enables users to deploy their applications to any OpenShift cluster directly from their Microsoft Azure DevOps account. In this article, we will look at how to install and use this extension as part of a YAML-defined pipeline with both Microsoft-hosted and self-hosted agents.

Note: The OpenShift VSTS extension can be downloaded directly from the marketplace at this link.

This article offers a demonstration where we explain how easy it is to set up everything and start working with the extension. Look at the README file for further installation and usage information.

The benefits

The new OpenShift VSTS 1.4.0 extension has three major benefits:

- It allows users to use an

ocCLI already installed on their machine when using a local agent. - It supports and automatically downloads

ocversions greater than four. - It changes the way the

ocCLI is downloaded: No more "API rate limit exceeded" error from the GitHub REST API.

Installing the OpenShift VSTS extension

Before to start using the OpenShift VSTS extension, you first need a running OpenShift instance. In our demo video, we use OpenShift Online, which is hosted and managed by Red Hat. You can sign up here and start using OpenShift in the cloud for free.

You also need a Microsoft Azure DevOps account. Once you log into this account, you should see a list of your organizations on the left, and all projects related to your organization on the right. If you do not have any projects, it is time to add a new one. To do so, follow these steps:

- Clicking on New Project and fill in the required fields, as shown in Figure 1:

- Go to https://marketplace.visualstudio.com/items?itemName=redhat.openshift-vsts.

- Click on Get it free.

- Select your Azure DevOps organization and click Install. Once this process finishes, the OpenShift VSTS extension install is complete, and you can start setting up your account.

Connecting to your OpenShift cluster

Now, you need to configure the OpenShift service connection, which connects Microsoft Azure DevOps to your OpenShift cluster:

- Log into your Azure DevOps project.

- Click on Project Settings (the cogwheel icon) on the page's bottom left.

- Select Service Connections.

- Click on New service connection and search for OpenShift.

- Pick the authentication method you would like to use (basic, token, or

kubeconfig). See the details for each option in the next few sections. - Insert your own OpenShift cluster data.

Congratulations! You have connected your Azure DevOps account to your OpenShift cluster.

Now, let's look at how to set up each authentication method.

Basic authentication

When you select Basic Authentication, use the following information to fill out the dialog:

- Connection Name: The name you will use to refer to this service connection.

- Server URL: The OpenShift cluster's URL.

- Username: The OpenShift username for this instance.

- Password: The password for the specified user.

- Accept untrusted SSL certificates: Whether it is ok to accept self-signed (untrusted) certificates.

- Allow all pipelines to use this connection: Allows YAML-defined pipelines to use our service connection (they are not automatically authorized for service connections).

The result should look similar to Figure 2:

Token authentication

When you select Token Based Authentication, use the following information to fill out the dialog:

- Connection Name: The name you will use to refer to this service connection.

- Server URL: The OpenShift cluster's URL.

- Accept untrusted SSL certificates: Whether it is ok to accept self-signed (untrusted) certificates.

- API Token: The API token used for authentication.

- Allow all pipelines to use this connection: Allows YAML-defined pipelines to use our service connection (they are not automatically authorized for service connections).

The result should look similar to Figure 3:

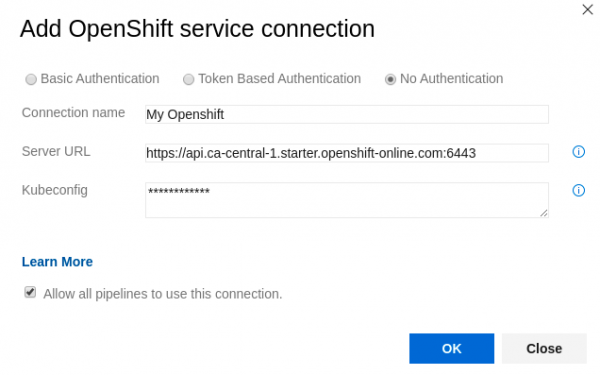

Kubeconfig

To use kubeconfig-based authentication, select No Authentication and use the following information to fill out the dialog:

- Connection Name: The name you will use to refer to this service connection.

- Server URL: The OpenShift cluster's URL.

- Kubeconfig: The contents of the

kubectlconfiguration file. - Allow all pipelines to use this connection: Allows YAML-defined pipelines to use our service connection (they are not automatically authorized for service connections).

The result should look similar to Figure 4:

Exploring the extension

Once the extension can authenticate to the Red Hat OpenShift cluster, you are ready to create your own YAML pipeline, and then perform operations in OpenShift by executing oc commands directly from Azure DevOps.

Note: This extension uses the oc OpenShift client tool to interact with an OpenShift cluster, so a minimal knowledge of this OpenShift CLI tool is required.

The extension offers three different tasks: install and set up oc, execute a single oc command, and update the ConfigMap.

Install and set up oc

This task allows you to install a specific version of the OpenShift CLI (oc), adds it to your PATH, and creates a kubeconfig file for authenticating with the OpenShift cluster. First, we download and set up oc, and then we execute oc commands through a script:

jobs:

- job: myjob

displayName: MyJob

pool:

vmImage: 'windows-latest'

steps:

# Install oc so that it can be used within a 'script' or bash 'task'

- task: oc-setup@2

inputs:

openshiftService: 'My Openshift'

version: '3.11.154'

# A script task making use of 'oc'

- script: |

oc new-project my-project

oc apply -f ${SYSTEM_DEFAULTWORKINGDIRECTORY}/openshift/config.yaml -n my-project

The installed oc binary will match your agent's OS.

Note: It is possible to use variables defined in the agent. As seen in this example, to reference a file in artefact _my_sources, you can use:

${SYSTEM_DEFAULTWORKINGDIRECTORY}/_my_sources/my-openshift-config.yamlYou can use this task as follows in the GUI:

- In Tasks, click Install and setup oc. This action opens the dialog shown in Figure 5:

- In the OpenShift service connection drop-down box, select the service connection you just created, which will be used to execute this command.

- In the Version of oc to use text box, add the version of

ocyou want to use (e.g., 3.11.154) or a direct URL to anocrelease bundle. (If left blank, the latest stable oc version is used.)

Execute single oc commands

This task allows you to execute a single oc command directly from Azure DevOps:

jobs: - job: myjob displayName: MyJob pool: name: 'Default' steps: - task: oc-cmd@2 inputs: openshiftService: 'My Openshift' version: '4.1' cmd: 'oc new-app https://github.com/lstocchi/nodejs-ex -l name=demoapp' uselocalOc: true

Note: Neither the oc-cmd or config-map tasks need to forcibly run after the setup task. If the extension does not find a valid oc CLI during the execution of an oc command, first it downloads a copy of a new oc, and then it executes the command.

To use this task in the GUI:

- In Tasks, select Execute oc command to pull up the dialog shown in Figure 6:

- In the OpenShift service connection drop-down box, select the service connection you just created, which will be used to execute this command.

- In the Version of oc to use text box, add the version of

ocyou want to use (e.g., 3.11.154) or a direct URL to anocrelease bundle. (If left blank, the latest stable oc version is used.) - In the Command to run text box, enter the actual

occommand to run.

Note: You can directly type the oc sub-command by omitting oc from the input (e.g., rollout latest dc/my-app -n production).

- Check or un-check the Ignore non success return value check box, which specifies whether the

occommand's non-success return value has to be ignored (e.g., if a task with the commandoc createoroc deletefails because the resource has already been created or deleted, the pipeline will continue its execution). - Check or un-check the use local oc executable check box, which specified whether to force the extension to use, if present, the

ocCLI found on the machine containing the agent. If no version is specified, the extension uses the localocCLI no matter what its version is. If a version is specified, then the extension checks to see if theocCLI installed has the same version requested by the user (if not, the correctocCLI will be downloaded).

Update a ConfigMap

This task allows you to update the properties of a given ConfigMap using a grid:

jobs: - job: myjob displayName: MyJob pool: name: 'Default' - task: config-map@2 inputs: openshiftService: 'my_openshift_connection' configMapName: 'my-config' namespace: 'my-project' properties: '-my-key1 my-value1 -my-key2 my-value2'

It includes six configuration options, which you can fill out in the GUI:

- In Tasks, select Update ConfigMap to access the dialog shown in Figure 7:

- In the OpenShift service connection drop-down box, select the service connection you just created, which will be used to execute this command.

- In the Version of oc text box, add the version of

ocyou want to use (e.g., 3.11.154) or a direct URL to anocrelease bundle. (If left blank, the latest stable oc version is used.) - In the Name of the ConfigMap text box, enter the name of the

ConfigMapto update. (This field is required.) - In the Namespace of ConfigMap text box, enter the namespace in which to find the

ConfigMap. The current namespace is used if none is specified. - In the ConfigMap Properties text box, enter the properties to set or update. Only the properties which need creating or updating need to be listed. Space-separated values need to be surrounded by quotes (").

- Check or un-check the use local oc executable checkbox, which specified whether to force the extension to use, if present, the

ocCLI found on the machine containing the agent. If no version is specified, the extension uses the localocCLI no matter what its version is. If a version is specified, then the extension checks to see if theocCLI installed has the same version requested by the user (if not, the correctocCLI will be downloaded).

Work with OpenShift

It is finally time to create your YAML pipeline by using the OpenShift VSTS extension. In our example, we have the application nodejs-ex already running on our OpenShift cluster, and our goal is to create a pipeline to push a new version of our application whenever our GitHub master branch is updated. Here is our task:

jobs: - job: demo displayName: MyDemo pool: name: 'Default' steps: - task: oc-cmd@2 inputs: openshiftService: 'My Openshift' cmd: 'oc start-build nodejs-ex --follow' uselocalOc: true - task: oc-cmd@2 inputs: openshiftService: 'My Openshift' cmd: 'oc status' uselocalOc: true

Every time the pipeline is triggered, a new build starts, and our application is pushed to the cluster eventually. It is important to note that because we are using a local agent to run this pipeline (which is on a machine with the oc CLI already installed, we set the flag uselocalOc to true and did not specify any version. The extension will use the oc CLI that is installed on the machine, whatever its version is.

Next, we check the status of our cluster to see if there are any misconfigured components (services, deployment configs, build configurations, or active deployments).

Note: If you want to use a specific oc version, be sure to type it correctly, otherwise the latest release will be used (e.g., if you type v3.5 as your version input, the extension will download version 3.5.5, because 3.5 does not exist in our repo. Check the README file for more information).

Wrapping up

At this point, you should be able to set up your OpenShift VSTS extension and use it to create your own YAML-defined pipeline, then deploy your application to your OpenShift cluster from Azure DevOps. OpenShift VSTS is an open source project, and we welcome contributions and suggestions. Please reach out to us if you have any requests for further deployments, ideas to improve the extension, questions, or if you encounter any issues. Contacting us is simple: Open a new issue.

Last updated: July 1, 2020