Today I want to talk about Ansible Service Broker and Ansible Playbook Bundle. These components are relatively new in the Red Hat OpenShift ecosystem, but they are now fully supported features available in the Service Catalog component of OpenShift 3.9.

Before getting deep into the technology, I want to give you some basic information (quoted below from the product documentation) about all the components and their features:

- Ansible Service Broker is an implementation of the Open Service Broker API that manages applications defined in Ansible Playbook Bundles.

- Ansible Playbook Bundles (APB) are a method of defining applications via a collection of Ansible Playbooks built into a container with an Ansible runtime with the playbooks corresponding to a type of request specified in the Open Service Broker API specification.

- Playbooks are Ansible’s configuration, deployment, and orchestration language. They can describe a policy you want your remote systems to enforce, or a set of steps in a general IT process.

So the ASB (Ansible Service Broker) is the man-in-the-middle between the APB (Ansible Playbook Bundle) and a third-party user that would like to consume the service offered through the Ansible Playbook on OpenShift.

Linking up these two components, OpenShift Service Catalog is able to offer—through OpenShift Web Portal and its API—access to these pieces of deployment/configuration to OpenShift users. This enables an entire world of possibilities from an OpenShift perspective:

- Easily define, distribute, and provision microservice(s), such as RocketChat and PostgreSQL, via Ansible Playbooks packaged in Ansible Playbook Bundles.

- Easily bind microservice(s) provisioned through Ansible Playbook Bundles, for example, as shown in this video: Using the Service Catalog to Bind a PostgreSQL APB to a Python Web App.

Getting deeper into the technology, we'll see how to use the following steps to create a MariaDB APB that will set up MariaDB on an external remote host:

- Get started with the technology by creating your first APB.

- Customize your first APB and let it configure an external remote host (through SSH).

- Build and push your first APB.

- Understand the ASB and APB operations under the hood.

- Troubleshoot an APB.

Are you ready? Let's get started!

Please note: You need to have a fully configured and functional OpenShift 3.9 cluster before continuing. Minishift and the CDK, at the moment, do not offer Service Catalog and Ansible Service Broker enabled. Please check the project documentation.

Getting Started with an APB

Starting from OpenShift 3.9, you'll not need any additional configuration or deployment to get Ansible Service Broker and the Service Catalog working. They will be set up by the OpenShift installer at first installation/update.

So, the first thing you need to do is to let the Ansible Service Broker search in the OpenShift default registry for container images. To achieve this, edit the configmap used by the Ansible Service Broker and edit the whitelist:

$ oc edit configmap broker-config -n openshift-ansible-service-broker

In the configmap, add a whitelist rule for the OpenShift registry similar to the one already set up for the Docker Hub registry:

- type: local_openshift

name: localregistry

namespaces:

- openshift

white_list:

- ".*-apb$"

With this rule in place, the Ansible Service Broker will search in the local OpenShift registry for container images ending with the string -apb.

After that we need we need to rollout a new version of the Ansible Service Broker:

$ oc rollout latest dc/asb -n openshift-ansible-service-broker

You're now ready to initialize your first APB. First, you need the apb binary. On a Red Hat Enterprise Linux (with OpenShift repos enabled), you just need to run this command:

$ yum install -y apb

Then you can initialize your first APB by running this command:

$ apb init mariadb-deployment-apb

The command will set up an initial directory tree (shown below), which is ready to be customized depending on your needs:

$ ls -la mariadb-deployment-apb/ total 12 drwxrwxr-x. 4 ocpadmin ocpadmin 85 May 18 14:08 . drwx------. 9 ocpadmin ocpadmin 255 May 18 14:33 .. -rw-rw-r--. 1 ocpadmin ocpadmin 1082 May 18 13:57 apb.yml -rw-rw-r--. 1 ocpadmin ocpadmin 1731 May 18 14:34 Dockerfile -rw-rw-r--. 1 ocpadmin ocpadmin 769 May 16 12:16 Makefile drwxrwxr-x. 2 ocpadmin ocpadmin 50 May 18 14:33 playbooks drwxrwxr-x. 6 ocpadmin ocpadmin 145 May 16 12:30 roles

As you can see, the command creates a description file called apb.yml for metadata and parameters that need to be requested from users of the playbook bundle. The metadata will be used for displaying the item in the ServiceCatalog, while the parameters will be used to prompt users of the bundle to supply necessary configuration details. We'll take a look and customize it in the next section.

Then it creates a Dockerfile for building up the final container and a Makefile, of course, that defines the method for building and pushing the container up to the OpenShift internal registry.

Finally, you'll find the two key directories containing—guess what?— Ansible "playbooks" and "roles." These directories contain pre-built playbooks for provisioning and de-provisioning and a skeleton for a custom role you may want to build.

But let's take a look to the playbook it made:

$ cat playbooks/provision.yml - name: mariadb-test-apb playbook to provision the application hosts: localhost gather_facts: false connection: local roles: - role: ansible.kubernetes-modules install_python_requirements: no - role: ansibleplaybookbundle.asb-modules - role: provision-mariadb-test-apb

As you can see, the playbook is really simple. It runs against localhost (connection: local), and it will execute two pre-defined roles: ansible.kubernetes-modules and ansibleplaybookbundle.asb-modules. These two roles will set up the basic actions for letting your container communicate with the current OpenShift platform and its underlying Kubernetes layer.

Finally, the playbook will execute a custom role, provision-mariadb-test-apb. This role is basically empty; you should fill it with your code!

Customizing Your First APB for Connecting to a Remote Host

As mentioned in the introduction, you will not use the standard behavior for your APB. Instead, you'll make it connect to an external host for installing and configuring MariaDB.

First, you need to edit the apb.yml file to add some metadata and variables that you'll use later in the playbooks:

$ cat apb.yml

version: 1.0

name: mariadb-deployment-apb

description: This is a sample application generated by apb init

bindable: False

async: optional

metadata:

displayName: MariaDB on vm (APB)

plans:

- name: default

description: This default plan deploys mariadb-deployment-apb

free: True

metadata: {}

parameters:

- name: dbname

title: Database name to create on just created mariadb

type: string

default: myappdb

required: true

- name: rootpassword

title: Database root password to set

type: string

default: P4ssw0rd!

required: true

display_type: password

- name: target_host

title: Target Host for provisioning

type: string

default: 172.16.0.7

required: true

- name: remoteuser

title: SSH Remote User

type: string

default: user

required: true

- name: sshprivkey

title: SSH Private key for connecting to the remote machine

type: string

required: true

display_type: textarea

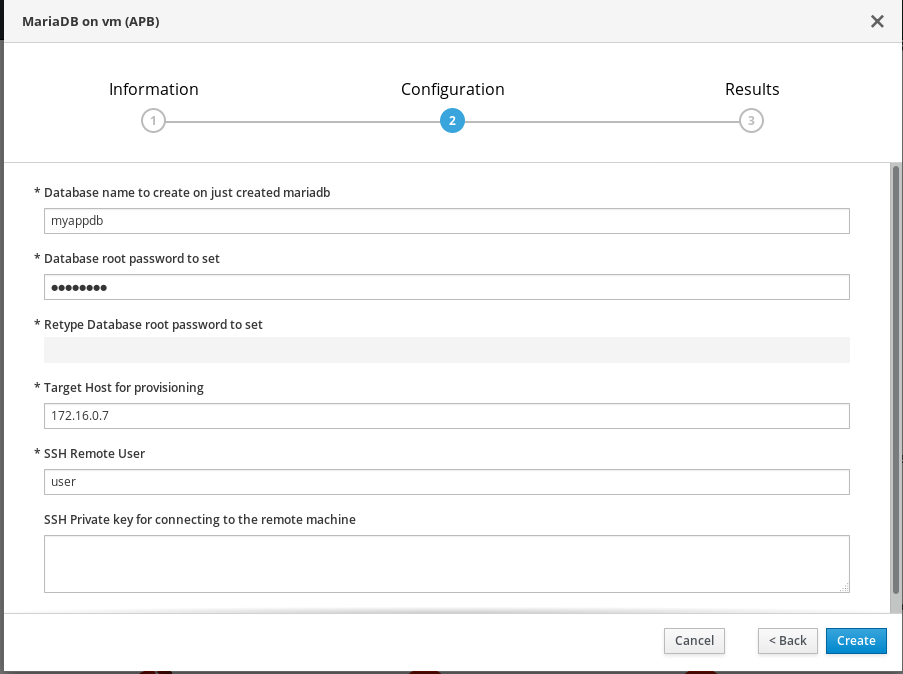

As you can see, you set up five parameters that the user will be asked to provide through the OpenShift interface:

- The database name

- The root password for the database

- The target host to connect to

- The remote user to use during SSH connection

- The SSH private key to use during SSH connection

As you may suppose, you'll not write from scratch a role for installing and configuring MariaDB on your remote system. There are tons of roles available on the Ansible Galaxy network!

For this example, I chose these two (you'll need a role for configuring the firewall, too):

Download them and place them under roles directory.

After that, you need to edit the provision.yml playbook. For connecting to an external host, you need to add the host to the inventory, dynamically.

$ cat playbooks/provision.yml

- name: mariadb-deployment-apb playbook to provision the application

hosts: localhost

gather_facts: false

connection: local

roles:

- role: ansible.kubernetes-modules

install_python_requirements: no

- role: ansibleplaybookbundle.asb-modules

- role: provision-mariadb-deployment-apb

playbook_debug: false

tasks:

- name: Adding the remote host to the inventory

add_host:

name: "{{ target_host }}"

groups: target_group

changed_when: false

- name: Adding ssh private key

shell: "mkdir -p /opt/apb/.ssh && chmod 700 /opt/apb/.ssh && echo -e \"{{ sshprivkey }}\" > /opt/apb/.ssh/id_rsa && chmod 600 /opt/apb/.ssh/id_rsa"

- name: Provision mariadb

hosts: target_group

remote_user: "{{ remoteuser }}"

become: true

vars:

firewall_allowed_tcp_ports:

- "22"

- "3306"

mariadb_bind_address: "0.0.0.0"

mariadb_root_password: "{{ rootpassword }}"

mariadb_databases:

- name: "{{ dbname }}"

roles:

- role: ansible-role-firewall

- role: ansible-role-mariadb

Inspecting the playbook, you'll see that first you add the host (from the variable) to the inventory, and then you set up the SSH private key for connecting to the remote host. To accomplish this, I use a single shell command instead of taking the command apart using all the available Ansible modules.

Then in the second playbook, you connect to the remote host to configure the firewall and MariaDB.

So, you'll also need to edit the deprovision.yml playbook:

$ cat playbooks/deprovision.yml

- name: mariadb-deployment-apb playbook to deprovision the application

hosts: localhost

gather_facts: false

connection: local

roles:

- role: ansible.kubernetes-modules

install_python_requirements: no

- role: ansibleplaybookbundle.asb-modules

- role: deprovision-mariadb-deployment-apb

playbook_debug: false

tasks:

- name: Adding the remote host to the inventory

add_host:

name: "{{ target_host }}"

groups: target_group

changed_when: false

- name: Adding ssh private key

shell: "mkdir -p /opt/apb/.ssh && chmod 700 /opt/apb/.ssh && echo -e \"{{ sshprivkey }}\" > /opt/apb/.ssh/id_rsa && chmod 600 /opt/apb/.ssh/id_rsa"

- name: Remove mariadb packages

remote_user: "{{ remoteuser }}"

become: yes

hosts: target_group

tasks:

- name: Remove the package from the host

package:

name: mariadb

state: absent

The deprovisioning is just to remove the mariadb package and nothing else.

Finally, for connecting smoothly to your remote host without SSH prompting us to add the host to the known list, you can make a little addition to the Dockerfile to disable host_key_checking:

$ cat ../mariadb-test-apb/Dockerfile

FROM ansibleplaybookbundle/apb-base

LABEL "com.redhat.apb.spec"=\

COPY playbooks /opt/apb/actions

COPY roles /opt/ansible/roles

RUN echo "host_key_checking = False" >> /opt/apb/ansible.cfg

RUN chmod -R g=u /opt/{ansible,apb}

USER apb

Building and Pushing Your First APB

First, you need to prepare the APB for the push to the registry:

$ apb prepare Finished writing dockerfile.

This command adds a signature to the Dockerfile so you can double-checking the build later.

After that, you can build the APB. Remember you need to be root (or have proper rights for accessing the Docker daemon):

$ sudo apb build Finished writing dockerfile. Building APB using tag: [mariadb-deployment-apb] Successfully built APB image: mariadb-deployment-apb

You can double-check the build by checking the list of docker images:

$ sudo docker images|grep mariadb mariadb-deployment-apb latest b4d6a95a79b7 2 days ago 604 MB

And finally, you can push the APB into the registry. But before proceeding, you should be logged in to OpenShift as an admin user with a valid token. The user system:admin doesn't have a token by default, so create an additional user and give it the cluster-admin" role.

$ sudo oc whoami

ocpadmin

$ sudo apb push

Didn't find OpenShift Ansible Broker route in namespace: ansible-service-broker. Trying openshift-ansible-service-broker

version: 1.0

name: mariadb-deployment-apb

description: This is a sample application generated by apb init

bindable: False

async: optional

metadata:

displayName: MariaDB on vm (APB)

plans:

- name: default

description: This default plan deploys mariadb-deployment-apb

free: True

metadata: {}

parameters:

- name: dbname

title: Database name to create on just created mariadb

type: string

default: myappdb

required: true

- name: rootpassword

title: Database root password to set

type: string

default: R3dh4t1!

required: true

display_type: password

- name: target_host

title: Target Host for provisioning

type: string

default: 172.16.0.7

required: true

- name: remoteuser

title: SSH Remote User

type: string

default: user

required: true

- name: sshprivkey

title: SSH Private key for connecting to the remote machine

type: string

required: true

display_type: textarea

Found registry IP at: 172.30.3.246:5000

Finished writing dockerfile.

Building APB using tag: [172.30.3.246:5000/openshift/mariadb-deployment-apb]

Successfully built APB image: 172.30.3.246:5000/openshift/mariadb-deployment-apb

Found image: docker-registry.default.svc:5000/openshift/mariadb-deployment-apb

Warning: Tagged image registry prefix doesn't match. Deleting anyway. Given: 172.30.3.246:5000; Found: docker-registry.default.svc:5000

Successfully deleted sha256:0bd78762bbf717333f1e017e3578bcd55a70877810fc7f859d04455e80df0a94

Pushing the image, this could take a minute...

Successfully pushed image: 172.30.3.246:5000/openshift/mariadb-deployment-apb

Contacting the ansible-service-broker at: https://asb-1338-openshift-ansible-service-broker.140.11.34.16.nip.io/ansible-service-broker/v2/bootstrap

Successfully bootstrapped Ansible Service Broker

Successfully relisted the Service Catalog

You did it! You successfully loaded an APB into the OpenShift registry and Ansible Service Broker.

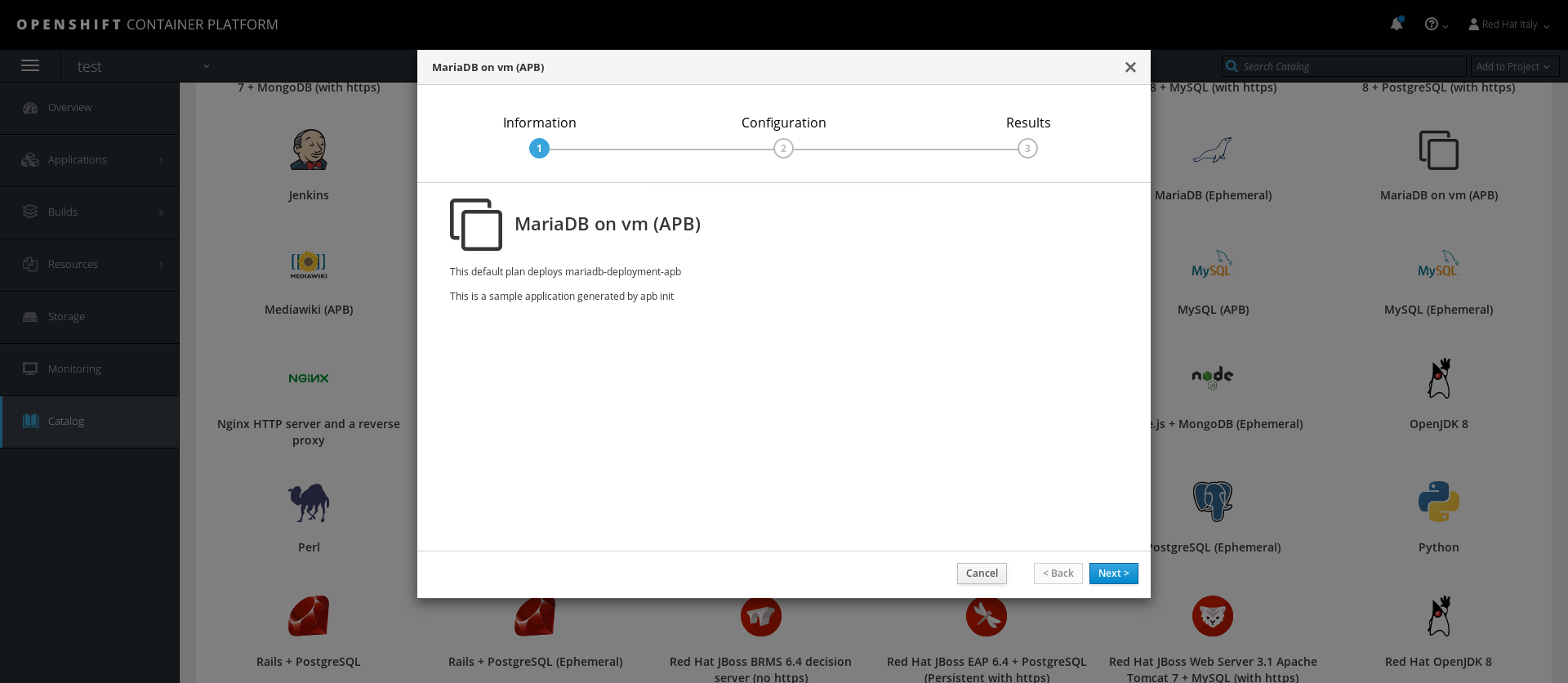

The next time you log in to OpenShift, you should find the APB in the Service Catalog:

And moving forward, in the Configuration section, you'll see the required variables you configured earlier:

Understanding the ASB and APB Operations Under the Hood

Requesting the element from the Service Catalog by clicking Create in the previous screen, will start a very special action inside the running OpenShift cluster:

$ oc get serviceinstance -n test NAME AGE localregistry-mariadb-deployment-apb-bd4cb 27s $ oc get pods --all-namespaces|grep apb localregistry-mariadb-deployment-apb-prov-6pntf apb-f34a346d-3b25-46a5-95c2-54d480ae6701 1/1 Running 0 28s

As you can see, an element of type ServiceInstance was created and linked to this, a new pod was scheduled: our Ansible playbook is just running in this container.

Looking through the logs, you can monitor the running activities:

$ oc logs -n localregistry-mariadb-deployment-apb-prov-6pntf apb-f34a346d-3b25-46a5-95c2-54d480ae6701 -f PLAY [mariadb-deployment-apb playbook to provision the application] ************ TASK [ansible.kubernetes-modules : Install latest openshift client] ************ skipping: [localhost] TASK [ansibleplaybookbundle.asb-modules : debug] ******************************* skipping: [localhost] TASK [Adding the remote host to the inventory] ********************************* ok: [localhost] TASK [Adding ssh private key] ************************************************** [WARNING]: Consider using the file module with state=directory rather than running mkdir. If you need to use command because file is insufficient you can add warn=False to this command task or set command_warnings=False in ansible.cfg to get rid of this message. changed: [localhost] PLAY [Provision mariadb] ******************************************************* TASK [Gathering Facts] ********************************************************* ok: [10.1.0.11] TASK [ansible-role-firewall : Ensure iptables is present.] ********************* ok: [10.1.0.11] TASK [ansible-role-firewall : Flush iptables the first time playbook runs.] **** ok: [10.1.0.11] TASK [ansible-role-firewall : Copy firewall script into place.] **************** ok: [10.1.0.11] TASK [ansible-role-firewall : Copy firewall init script into place.] *********** skipping: [10.1.0.11] TASK [ansible-role-firewall : Copy firewall systemd unit file into place (for systemd systems).] *** ok: [10.1.0.11] TASK [ansible-role-firewall : Configure the firewall service.] ***************** ok: [10.1.0.11] ...

Troubleshooting an APB

What happens if your tests go wrong and the Ansible pod fails and produces an error?

Of course you can look through all the APB pods (as shown before) and run the usual oc debug PODNAME command for creating a brand-new pod for troubleshooting.

If you experience some issue deleting a failed ServiceInstance, you can edit the element to remove the Kubernetes finalizer, as shown below:

$ oc get serviceinstance -n test -o yaml

apiVersion: v1

items:

- apiVersion: servicecatalog.k8s.io/v1beta1

kind: ServiceInstance

metadata:

creationTimestamp: 2018-05-20T21:14:23Z

finalizers:

- kubernetes-incubator/service-catalog

generateName: localregistry-mariadb-deployment-apb-

generation: 1

name: localregistry-mariadb-deployment-apb-bd4cb

namespace: test

...

Sometimes, some nodes can get a different version of your APB in the Docker cache, so you might experience different behaviors if you did multiple builds and pushes. You can manually log in to the various OpenShift nodes and then clean up the outdated Docker images (you may want use Ansible from bastion host for doing that).

That's all!

Feel free to ask if you have any questions!

About Alessandro

Alessandro Arrichiello is a Solution Architect for Red Hat Inc. He has a passion for GNU/Linux systems, which began at age 14 and continues today. He works with tools for automating enterprise IT: configuration management and continuous integration through virtual platforms. He’s now working on a distributed cloud environment involving PaaS (OpenShift), IaaS (OpenStack) and processes management (CloudForms), container building, instance creation, HA services management, and workflow builds.

Alessandro Arrichiello is a Solution Architect for Red Hat Inc. He has a passion for GNU/Linux systems, which began at age 14 and continues today. He works with tools for automating enterprise IT: configuration management and continuous integration through virtual platforms. He’s now working on a distributed cloud environment involving PaaS (OpenShift), IaaS (OpenStack) and processes management (CloudForms), container building, instance creation, HA services management, and workflow builds.