Software defined storage is a leading technology in our industry with more and more platforms and enterprises are using software defined storage (SDS) to store unstructured data. Today, object storage is a primary workload on SDS as organizations are looking to implement active archives for enhanced access and long term storage. Red Hat's Steve Bohac and Neil Levine covered the bright future for SDS: object storage, active archives, and how Red Hat Ceph provides a solid foundation for all of your storage needs.

First, some vocabulary:

An object is a certain piece of data and associated metadata. This is not a file!

Object storage does not include hierarchical naming system like file systems. Instead, objects are stored in a flat structure and grouped into pools. Access is provided only by a specific object ID.

An active archive is an archive that is:

- always online

- write once, read infrequently, never modified

- heterogeneous media types

- unstructured data

Active Archives, Object Storage, and Red Hat Ceph

Active archives are driven by two trends: data capacity needs are continually growing and the price per byte of storage is declining. This pushes enterprises to be aggressive in data storage. Active archives help organizations get the most utility.

OK cool, how do I use active archives to enable my business? Mobile and web apps that perform at scale use active archives to power dynamic content to millions of users, digital libraries can use active archives to store and retrieve multimedia content, and historical big data projects need someplace to store all that data. Object storage is a great mechanism for storing active archives as the functionality provided (flat system, metadata aware, distributed scale) are all requirements of an active archive system.

So you know you want active archives and object storage is the way to go, how do we get there? Red Hat Ceph is well suited for storing large data sets because of its underlying RADOS layer, which scales the object store peer-to-peer. It also has an efficient access mechanism (RGW) and can work on a variety of hardware. All of this is on top of an open source framework, which makes it a reliable storage solution that can scale to meet the needs of the most extreme workloads.

Best practices

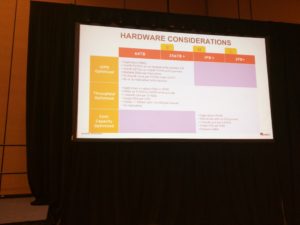

The biggest hardware issue is the ratio of storage to CPU power in single machines for varying workloads. Some might be IOPS optimized and others Capacity optimized. Neil covered several metrics the Ceph team has worked on developing that can help you estimate your hardware needs from your requirements. Check it out below.

Also, make sure to use a load balancer (like an HA Proxy) to help distribute calls to the underlying RADOS layer. You can even distribute calls via client metadata, for example, routing all of your priority callers to SSD storage and everyone else to tape backed storage.

Roadmap

Look to the Ceph project to provide:

- data tiering (move or maintain data in disparate sources like AWS, Tape, RADOS, etc).

- metadata searching capabilities.

- enhanced security (soon to include cloud and server-side encryption on an object-by-object basis).

This was a great presentation and looking forward to hearing more from the Red Hat Ceph team.

Last updated: March 16, 2018