This blog is a continuation of "Creating custom Atomic trees, images, and installers - Part 1." In part one, we learned how to compose our own atomic trees and consume them in a guest. In part two, we will learn how to create our own disk images and installer media.

This blog is a continuation of "Creating custom Atomic trees, images, and installers - Part 1." In part one, we learned how to compose our own atomic trees and consume them in a guest. In part two, we will learn how to create our own disk images and installer media.

Creating custom disk images

As mentioned in the previous blog, the subcommand imagefactory can be used to create disk images. As of this writing, the imagefactory subcommand can output the following image types:

- kvm - results in a qcow2 image perfect for importing with KVM

- raw - a raw image, which can be used as it or converted to another type

- vsphere -

- rhevm - suitable image for importing into RHEV-M

These output image types are controlled by the -i switch to rpm-ostree-toolbox. If you do not pass one or more images on the command line, rpm-ostree-toolbox will output all of the images. There are also more input items that are worth knowing about and the --help switch exposes them.

[baude@localhost ~]$ sudo rpm-ostree-toolbox imagefactory --help

usage: rpmostreecompose-main [-h] -c CONFIG [--ostreerepo OSTREEREPO]

[-i IMAGES] [--name NAME] [--tdl TDL]

[--virtnetwork VIRTNETWORK] -o OUTPUTDIR

[--overwrite] [-k KICKSTART] [-p PROFILE] [-v]

Use ImageFactory to create a disk image

optional arguments:

-h, --help show this help message and exit

-c CONFIG, --config CONFIG

Path to config file

--ostreerepo OSTREEREPO

Path to OSTree repository (default: ${pwd}/repo)

-i IMAGES, --images IMAGES

Output image formats in list format

--name NAME Image name

--tdl TDL TDL file

--virtnetwork VIRTNETWORK

Optional name of libvirt network

-o OUTPUTDIR, --outputdir OUTPUTDIR

Path to image output directory

--overwrite If true, replace any existing output

-k KICKSTART, --kickstart KICKSTART

Path to kickstart

-p PROFILE, --profile PROFILE

Profile to compose (references a stanza in the config

file)

-v, --verbose verbose outputMany of these options are automatically found if you are using a properly configured git repository but are worth noting if you want to customize further. The --tdl file is used by the imagefactory application to determine where to find the correct tree to construct the image. This tree must contain an atomic-based Anaconda among other things. The kickstart file is also used by imagefactory to correctly create the disk image, partition it, put in our customized tree, and finally to run several post-install scripts.

When rpm-ostree-toolbox imagefactory is run it will use libvirt to create a new KVM guest. It will then download the kernel and initrd from the TDL repository and feed in the kickstart file to anaconda which is run inside the guest. During this process, rpm-ostree-toolbox also creates a trivial-httpd process (much like we did earlier when rebasing our atomic image) so that the guest can obtain the customized tree. Note here the same considerations can block this from occurring such as firewalld or iptables. Also, if the KVM host and the customized tree are are one and the same, the default libvirt networking does not allow host and guest communications. Adding an iptables rule like:

iptables -I INPUT -s 192.168.122.0/24 -j ACCEPT

can resolve this depending on your network setup. You may also find the --virtnetwork command line switch helpful should you have a complex or non-standard libvirt set up. You can pass a libvirt network name (sudo virsh net-list --all) with this switch to force rpm-ostree-toolbox to use a specific network. Otherwise, if you only have one libvirt network it will use that; and finally, if you have multiple networks, it will first try to use the one called 'default'.

My current set up is neither complex nor non-standard. I am going to create two new disk images (kvm and raw) using the defaults and my customized tree.

[baude@localhost ]$ sudo rpm-ostree-toolbox imagefactory -c /home/baude/sig-atomic-buildscripts/config.ini -i kvm -i raw --ostreerepo /srv/rpm-ostree/centos-atomic-host/7/ -o /srv/rpm-ostree/centos-atomic-host/7/images

('outputdir', '/srv/rpm-ostree/centos-atomic-host/7')

('workdir', None)

('rpmostree_cache_dir', '/srv/rpm-ostree/cache')

('pkgdatadir', '/usr/share/rpm-ostree-toolbox')

('ostree_repo', '/srv/rpm-ostree/centos-atomic-host/7/repo')

... [committed for space]

Waiting for factory-build-a292ff3e-fb00-4e5f-a09a-332ce9dfbfac to finish installing, 3320/3600

... [omitted for space]

Created: /srv/rpm-ostree/centos-atomic-host/7/images/centos-atomic-host-7.qcow2

Processing image from qcow2 to raw

/var/lib/imagefactory/storage/a292ff3e-fb00-4e5f-a09a-332ce9dfbfac.body

/srv/rpm-ostree/centos-atomic-host/7/images/centos-atomic-host-7.raw

Created: /srv/rpm-ostree/centos-atomic-host/7/images/centos-atomic-host-7.rawThe length of the imagefactory process is largely determined by your available system resources, the speed of your network connection to the TDL repository, and how many images types you want. The vsphere and rhev images add considerable time to the process. During most of the process, you will see a "Waiting for factory-build..." message counting down. If you are curious what is going on during that time (or need to debug the process), you can use virt-manager or virt-viewer to observe the process. Eventually you will see a message like above which shows you the output images and their location. Remember the /srv/rpm-ostree/centos-atomic-host/7 was our our outputdir value in the config.ini.

And for the purpose of verification, we can take a look at those files. Remember that because these are disk images, they are sparse. Note below where we can list both the effectively used and maximum spaces of the disk images:

[baude@localhost ~]$ ls -lsh /srv/rpm-ostree/centos-atomic-host/7/images/ total 2.2G 1.1G -rw-r--r--. 1 root root 1.1G Dec 10 16:17 centos-atomic-host-7.qcow2 1.1G -rw-r--r--. 1 root root 10G Dec 10 16:17 centos-atomic-host-7.raw

Testing our custom disk image

The images we made are ready to be booted. We can boot these as a KVM guest rather easily. First, I like to copy the image into the default images location for libvirt.

[baude@localhost ~]$ sudo cp -v /srv/rpm-ostree/centos-atomic-host/7/images/centos-atomic-host-7.qcow2 /var/lib/libvirt/images/ ‘/srv/rpm-ostree/centos-atomic-host/7/images/centos-atomic-host-7.qcow2’ -> ‘/var/lib/libvirt/images/centos-atomic-host-7.qcow2’

Once the qcow2 image itself is in the correct location, you can use virt-install to test boot it. In the example below, I am booting it with 1 CPU and 2GB of memory. Also note I am attaching my custom atomic ISO which does the cloud-init initialization for me. To learn more about this ISO, see Getting started with cloud-init.

[baude@localhost ~]$ sudo virt-install --name=centos7_custom --memory=2048 --vcpus=1 --disk=/var/lib/libvirt/images/centos-atomic-host-7.qcow2 --disk=/var/lib/libvirt/images/atomic0-cidata.iso --bridge=virbr0 --import WARNING KVM acceleration not available, using 'qemu' Starting install... Creating domain... | 0 B 00:00:00 Connected to domain centos7_custom Escape character is ^] [ 0.000000] Initializing cgroup subsys cpuset [ 0.000000] Initializing cgroup subsys cpu [ 0.000000] Initializing cgroup subsys cpuacct [ 0.000000] Linux version 3.10.0-123.9.3.el7.x86_64 (builder@kbuilder.dev.centos.org) (gcc version 4.8.2 20140120 (Red Hat 4.8.2-16) (GCC) ) #1 SMP Thu Nov 6 15:06:03 UTC 2014 [ 0.000000] Command line: BOOT_IMAGE=/ostree/centos-atomic-host-bcafef71611f8c4753815ad44db31c0a66e8e7bec2159997e3262a17a299db97/vmlinuz-3.10.0-123.9.3.el7.x86_64 no_timer_check console=ttyS0,115200n8 console=tty1 vconsole.font=latarcyrheb-sun16 vconsole.keymap=us rd.lvm.lv=atomicos/root root=/dev/mapper/atomicos-root ostree=/ostree/boot.0/centos-atomic-host/bcafef71611f8c4753815ad44db31c0a66e8e7bec2159997e3262a17a299db97/0 [ 0.000000] e820: BIOS-provided physical RAM map: [ 0.000000] BIOS-e820: [mem 0x0000000000000000-0x000000000009fbff] usable [ 0.000000] BIOS-e820: [mem 0x000000000009fc00-0x000000000009ffff] reserved [ 0.000000] BIOS-e820: [mem 0x00000000000f0000-0x00000000000fffff] reserved [ 0.000000] BIOS-e820: [mem 0x0000000000100000-0x000000007fffdfff] usable [ 0.000000] BIOS-e820: [mem 0x000000007fffe000-0x000000007fffffff] reserved [ 0.000000] BIOS-e820: [mem 0x00000000fffc0000-0x00000000ffffffff] reserved ... [ omitted for space] CentOS Linux 7 (Core) Kernel 3.10.0-123.9.3.el7.x86_64 on an x86_64 localhost login:

The image has now successfully booted to the login screen.

Creating a custom install ISO

As has been mentioned earlier, you can also use rpm-ostree-toolbox to create custom install media. The output of this process will give you an ISO as well as PXE-boot images. These images all will have your custom tree embedded in them, so when anaconda is running, your content will be installed to the filesystem

The process of building the install media can be done two ways currently: using a container or using libvirt. Each method has its advantages but we have set the container method as the default. Before we actually attempt to create the image, it is worth looking at the help for 'rpm-ostree-toolbox installer'.

[baude@localhost ]$ sudo rpm-ostree-toolbox installer --help usage: rpmostreecompose-main [-h] -c CONFIG [-b YUM_BASEURL] [-p PROFILE] [--util_uuid UTIL_UUID] [--util_tdl UTIL_TDL] [-v] [--skip-subtask SKIP_SUBTASK] [--virtnetwork VIRTNETWORK] [--virt] [--post POST] -o OUTPUTDIR Create an installer image optional arguments: -h, --help show this help message and exit -c CONFIG, --config CONFIG Path to config file -b YUM_BASEURL, --yum_baseurl YUM_BASEURL Full URL for the yum repository -p PROFILE, --profile PROFILE Profile to compose (references a stanza in the config file) --util_uuid UTIL_UUID The UUID of an existing utility image --util_tdl UTIL_TDL The TDL for the utility image -v, --verbose verbose output --skip-subtask SKIP_SUBTASK Skip a subtask (currently: docker-lorax) --virtnetwork VIRTNETWORK Optional name of libvirt network --virt Use libvirt --post POST Run this %post script in interactive installs -o OUTPUTDIR, --outputdir OUTPUTDIR Path to image output directory

Unlike previous commands, you must specific the outputdir when you run this command. Also of note, you can enable the libvirt method of creating installer images with the --virt command-line switch. And if you are creating install media repetitively, you should also explore the --skip-subtask and --util_uuid options to speed up the process. Following our previous examples, to start the creation process, we would issue:

Author's Note: Be sure to make sure the docker daemon is running.

[baude@localhost ]$ sudo systemctl start docker

[baude@localhost ]$ sudo rpm-ostree-toolbox installer -c /home/baude/sig-atomic-buildscripts/config.ini --ostreerepo /srv/rpm-ostree/centos-atomic-host/7/ -o /srv/rpm-ostree/centos-atomic-host/7/images

('workdir', None)

('rpmostree_cache_dir', '/srv/rpm-ostree/cache')

('pkgdatadir', '/usr/share/rpm-ostree-toolbox')

('os_name', 'centos-atomic-host')

('os_pretty_name', 'CentOS Atomic Host')

('tree_name', 'standard')

('tree_file', 'centos-atomic-host.json')

('arch', 'x86_64')

('release', '7')

('ref', 'centos-atomic-host/7/x86_64/standard')

('yum_baseurl', 'http://mirror.centos.org/centos/7/os/x86_64/')

('lorax_additional_repos', 'http://cbs.centos.org/repos/atomic7-testing/x86_64/os/ , http://mirror.centos.org/centos/7/updates/x86_64/')

('lorax_exclude_packages', None)

('local_overrides', None)

... {omitted for space}

Once complete, the newly created content will land in the subdirectory 'lorax' within the outputdir you specified when you ran the command. The ISO itself is then in the 'images' directory.

[baude@localhost sig-atomic-buildscripts]$ ls -l /srv/rpm-ostree/centos-atomic-host/7/lorax total 16 drwxr-xr-x. 3 root root 4096 Dec 11 09:23 EFI drwxr-xr-x. 3 root root 4096 Dec 11 09:23 images drwxr-xr-x. 2 root root 4096 Dec 11 09:23 isolinux drwxr-xr-x. 2 root root 4096 Dec 11 09:23 LiveOS [baude@localhost sig-atomic-buildscripts]$ ls -l /srv/rpm-ostree/centos-atomic-host/7/lorax/images/ total 771140 -rw-r--r--. 1 root root 784334848 Dec 11 09:23 boot.iso -rw-r--r--. 1 root root 6443008 Dec 11 09:23 efiboot.img drwxr-xr-x. 2 root root 4096 Dec 11 09:23 pxeboot

Testing our custom installer

We can test our custom installer in very much the same way we tested our custom disk image earlier. The easiest method is to again use virt-install to boot the iso in KVM guest.

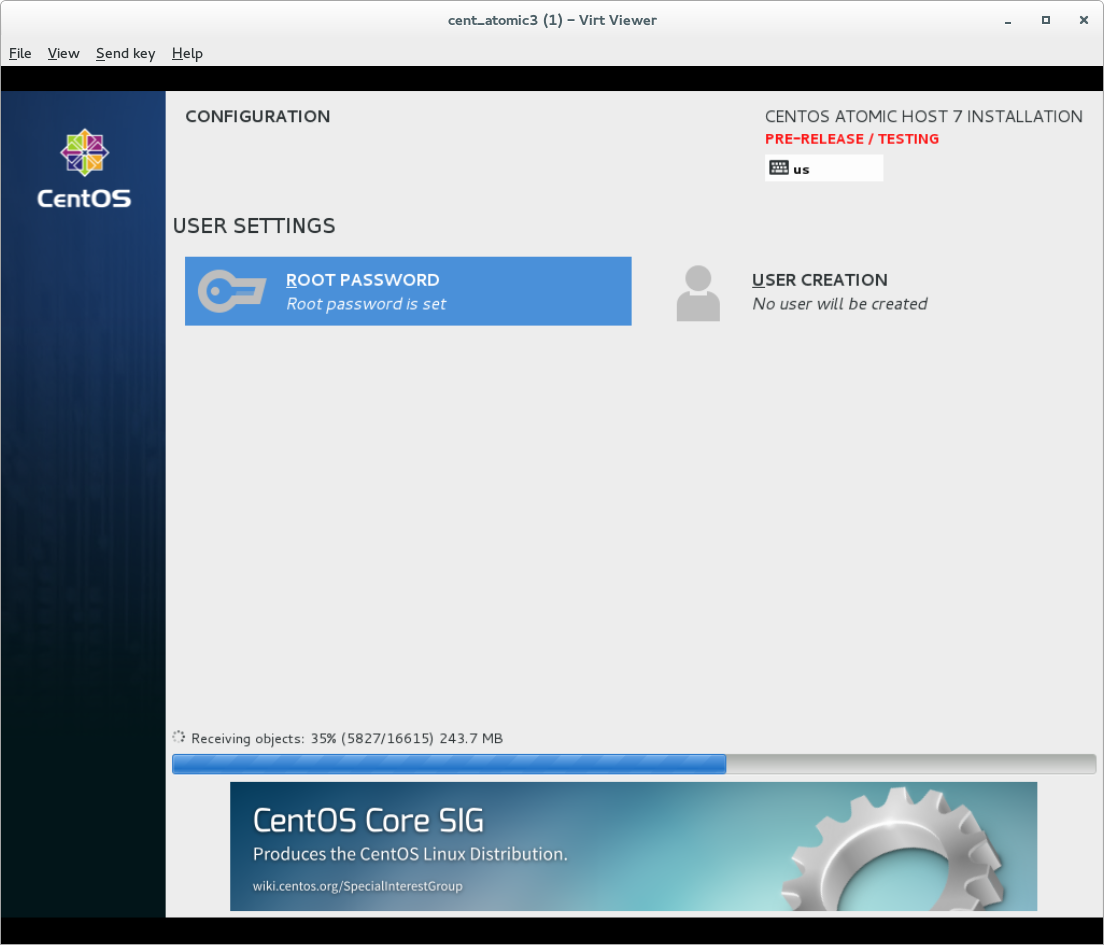

sudo virt-install --name=cent_atomic3 --memory=2048 --vcpus=2 --disk=/var/lib/libvirt/images/test.qcow2,size=5 --cdrom /var/lib/libvirt/images/boot.iso Starting install... Allocating 'test.qcow2' | 5.0 GB 00:00:00 Creating domain... | 0 B 00:00:00 ... [omitted for space]

Eventually the boot up will transition into anaconda, where you can set up your installation and watch it complete.

Using existing media to install your custom tree

During the creation of these images, you may have noticed I specifically called out that we were embedding our custom tree into our install media. We can, however, use the existing media and point to our own custom tree. This is somewhat like using the dracut/anaconda option for repo= which directs the installer where to get its content. For atomic, however, the repo= does not apply so we actually need to use a kickstart file to influence this.

If you look in the git repository we have been using to build our images, there is a file called 'centos-atomic-host-7.ks'. This file was used in the creation of our virtual disk, which you may remember was created by creating a KVM guest. During the install, anaconda then grabbed the tree content from its host which was running the trivial-httpd. In the kickstart file, there is a crucial line like:

ostreesetup --osname="@OSTREE_OSNAME@" --remote="@OSTREE_OSNAME@" --ref="@OSTREE_REF@" --url="http://@OSTREE_HOST_IP@:@OSTREE_PORT@" --nogpg

This is the line that instructed the installer as to where to get its content. Therefore, if you want to use the existing installer but your composed tree, you can feed it a similar kickstart as to what is in the git repository but change the above to match your environment. Every value in between @'s will need to be defined. And remember, you will need to use trivial-httpd or a webserver to host that content.

Final thoughts

The development for atomic is still ongoing. And its introduction into CentOS is still quite new. Furthermore, development on rpm-ostree-toolbox continues as bugs and new features are defined. Things will continue to change so keep your eyes out for major changes or enhancements.

Last updated: April 5, 2018