As html5 and client side solutions become more prevalent, the need for handling more and more data through javascript will increase. One such challenge, and the focus of this article, is a strategy for handling hundreds of megabytes of data through Web Workers.

What created this need for me personally was the development of Log Reaper [1] which is a client side approach to parsing log files with no server side upload or processing. Log Reaper identifies and parses log files (of currently accepted types) in a Web Worker, then communicates the structured objects back to the browser where they are further map reduced and visualized.

My initial challenge was essentially how to communicate potentially hundreds of megabytes from the Web Worker to main browser. Considering that the FileReader can be accessed in Web Workers, there is no concern from browser thread -> Web Worker, only Web Worker -> browser thread.

If you know a little about this topic you may jump to the conclusion to just use Transferable Objects. I can tell you right now that may not be the best approach for larger objects. And when I say larger I mean approaching 500 megabytes.

Strategies

- Pass the entire object array back using

self.postMessage(JSON.stringify(array)) - Transfer the object back using

self.postMessage(arrayBuffer, [arrayBuffer]) - Iteratively pass each individual item in the object array back using

self.postMessage(JSON.stringify(item))for each item in the array

Problems with the 1st strategy

First off, stringifying the entire Object and passing it back is a fine means of communication. In fact, typically you will find it is very fast for smaller datasets around 100 megabytes or less. The problem comes in as the size of the data increases. Once reaching in the hundreds of megabytes of data, the browser begins to have issues not with the passing of the data, but with the parsing of the returned data.

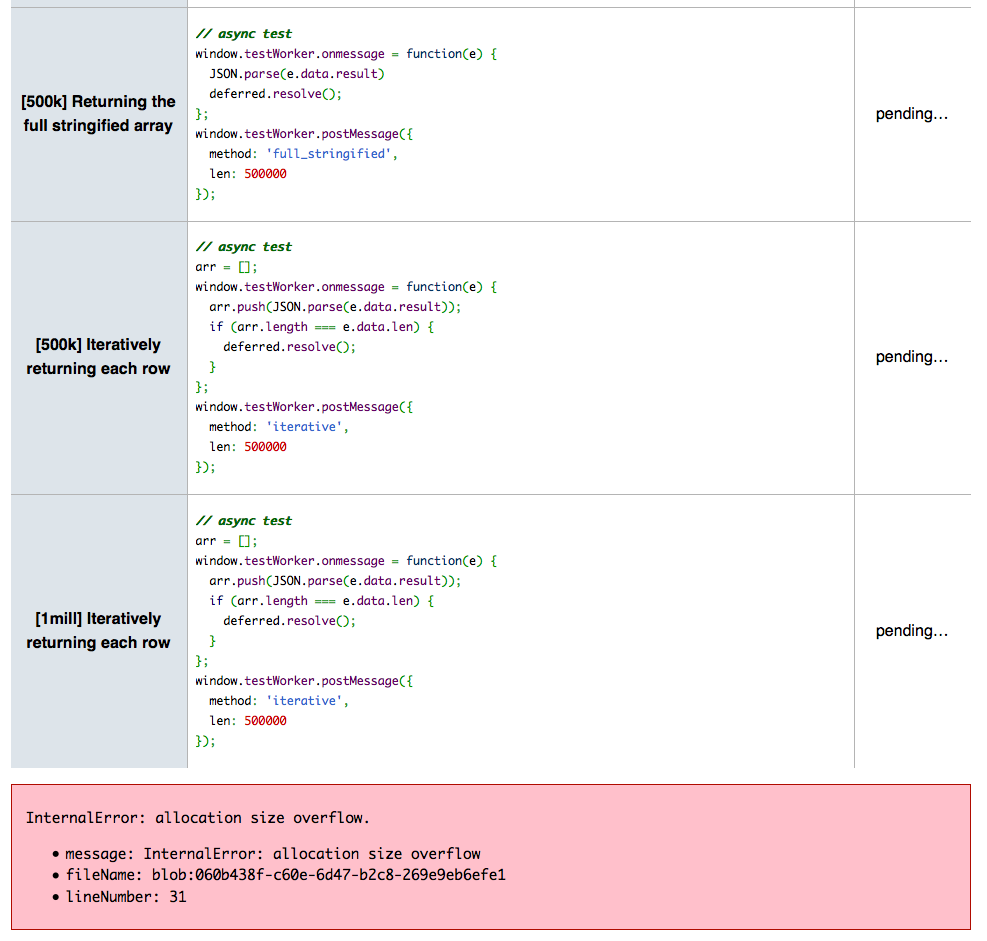

A few examples of failures when JSON.parse'ing larger stringified objects:

The error message may be a bit different depending on your browser version. In this particular test case 100k array items is anywhere from 200-500 megabytes. The native JSON.parse, as you can see, begins to give problems around this size range. Do note that the number given is not a scientific measurement, it is simply a display that there is a break point where JSON.parse is no longer effective.

Problems with the 2nd strategy

Transferable Objects are billed as the answer to performance issues with worker communication when you have a handle on an ArrayBuffer or you can create one from the data. Don't mistake me, Transferable Objects are an excellent options for datasets up to 1-200 megabytes, but they aren't reliable as you increase the size of the object transferred.

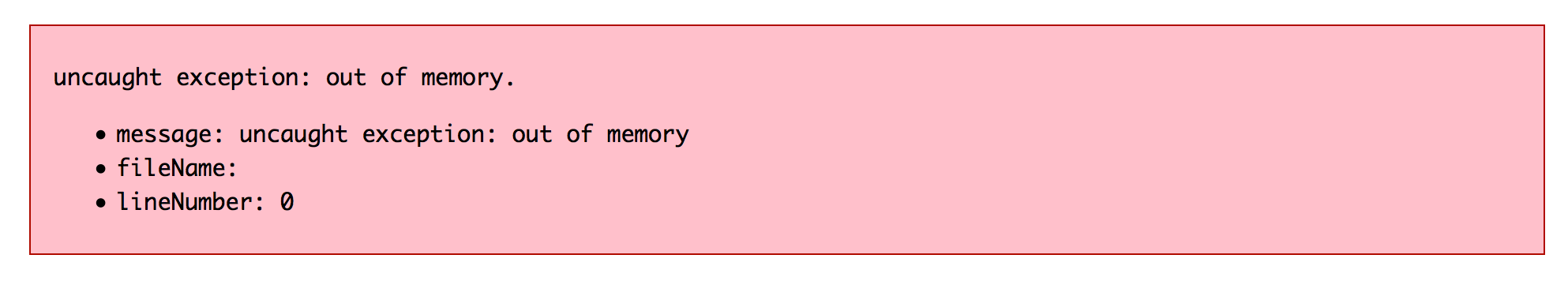

Here are a few issues you may see once you hit a larger file transferred:

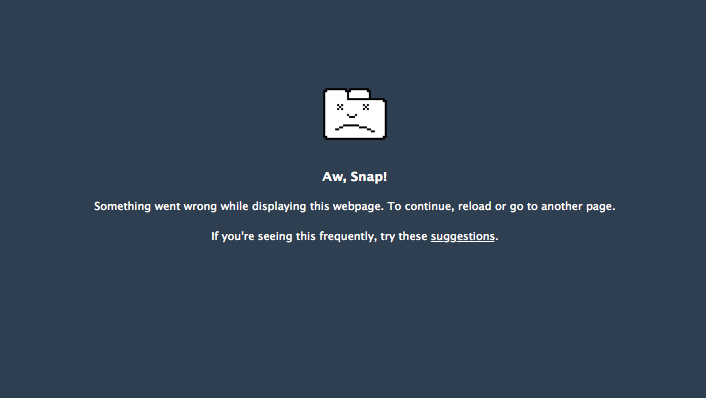

(The above image is from the Chrome "Aw, Snap!" page after failure from transferring a large ArrayBuffer)

These issues were seen around 10k-100k array items. That would roughly translate to between 20 and 200 megabytes in the testcase I am using. You can run this yourself at Transferable Objects with Web Workers

If you have a sharp eye you will notice I'm not JSON.parsing the results, that is because this test is purely to demonstrate the fallibility of transferring ArrayBuffers, not of the failing of JSON.parse.

The safe strategy

From much research, trial and error, and testing, the safest strategy I've come to is iteratively returning the array from the Web Worker. This isn't a fancy solution, and isn't the best use of the available APIs, but it works, and is consistent, and doesn't cause various Out Of Memory and browser failings. When dealing with a public facing app like Log Reaper it needs to work consistently to provide a stable user experience.

For example, where every other strategy fails when approaching 500k array items, iteratively posting back each line works fine:

Let's take a quick look at the flow of code here for this iterative approach.

Message is posted to a Web Worker

someWorker.postMessage({action: 'doSomething'});

Worker Receives the message

In the jsperf testcase attempted to remain faithful to the real world use-case of Log Reaper as I generate an object that would closely resemble, in size, what you might see in a JBoss log.

self.onmessage = function(e) {

obj = {

timestamp: +(new Date()),

severity: sevs[Math.floor(Math.random()*sevs.length)],

category: randomString(50),

thread: randomString(50),

message: randomString(500)

};

for(var i = 0; i < e.data.len; i++) {

postMessage({method: e.data.method, result: JSON.stringify(obj), len: e.data.len});

}

};

In the above code each and every line in the array is posted back to the main browser thread.

Worker Receives each line

arr = [];

someWorker.onmessage = function(e) {

arr.push(JSON.parse(e.data.result));

if (arr.length === e.data.len) {

console.debug("Complete!");

}

};

Conclusion

The modern browser is surprisingly capable of handling large amounts of data, in the hundreds of megabytes and higher. When using Web Workers combined with an iterative parsing technique, you can have a stable means of parsing and handling large object arrays.

Notes

- The jsperf testcases are not being used as a micro-benchmarks but for establishing usable strategies for handling large objects.

- In the real world example, Log Reaper, the message field can vary from 0,1 length to n. For this article, I choose a length of 500 randomly generated characters, for the message field, to simulate dense amount of data.

[1] https://access.redhat.com/site/labsinfo/logreaper

Last updated: January 10, 2023