Red Hat OpenShift Virtualization enables the deployment of virtual machines alongside containerized workloads. This article discusses how confidential computing technology can safeguard data-in-use and maintain the integrity of these virtual machines. Additionally, we present the highlights of a proof of concept (PoC) for deploying a confidential virtual machine within this environment.

In this article, we will introduce techniques for supporting confidential computing within the OpenShift Virtualization environment, specifically focusing on Red Hat Enterprise Linux and KubeVirt. We will also provide details about the attestation flow that validates the integrity of the confidential environment, discuss common use cases, and conclude with insights on future developments in the field. First, we'll provide an overview of how this technology integrates into the virtual machine lifecycle and creation process.

Confidential computing overview

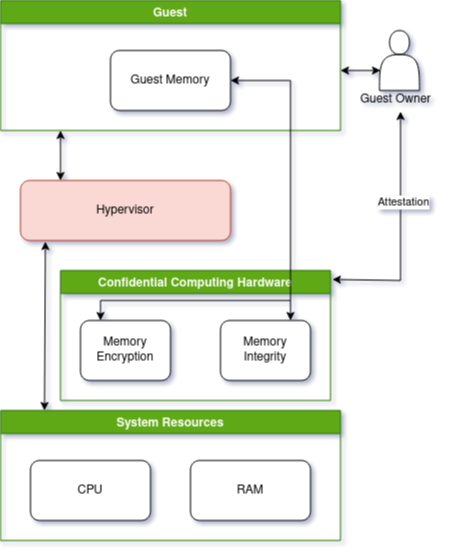

Confidential computing is a security paradigm focused on protecting data in use. It achieves this by performing computations within a hardware-based, attested Trusted Execution Environment (TEE). This approach ensures that sensitive information remains isolated and secure from unauthorized access, even during processing. Confidential computing is instrumental in advancing the zero trust model and enhances data privacy and integrity across various platforms, particularly those managed by third parties.

Confidential computing provides the following key features:

- Confidentiality: The virtual machine’s state is encrypted and cannot be snooped upon even by the host hypervisor. The confidential computing hardware decrypts guest memory on the fly and makes it available in the virtual machine environment.

- Integrity: It protects the virtual machine’s memory and state from external modifications. For example, an adversary may randomly alter a virtual machine’s memory even though they may not be able to view the contents, thereby compromising its integrity. Confidential computing hardware provides integrity guarantees to prevent this, and it applies even to the attacker being the host hypervisor.

- Attestation: It allows the VM owner to confirm the integrity and authenticity of their computing environment before executing sensitive workloads.

The diagram in Figure 1 illustrates the high-level components in confidential computing.

RHEL support

Confidential computing utilizes an additional security processor to protect guest environments from malicious access by external entities. On x86 platforms, Intel’s Trusted Domain Extensions (TDX) and AMD’s Secure Encrypted Virtualization suite of features (SEV-SNP) are the latest offerings that offer confidential computing features to guest environments.

RHEL supports these features as follows:

- AMD SEV-SNP: This has been available as a tech-preview feature in RHEL since version 9.5.

- Intel TDX: These extensions are actively being developed upstream and are planned for introduction in RHEL once the necessary changes are accepted. As an interim step, interested users can experiment with TDX images available through the CentOS Stream Virt SIG.

In the absence of SEV-SNP and TDX support in KubeVirt and OpenShift Virtualization, we have enabled SEV-SNP guests and demonstrated attestation within such guests.

The lifecycle of a virtual machine

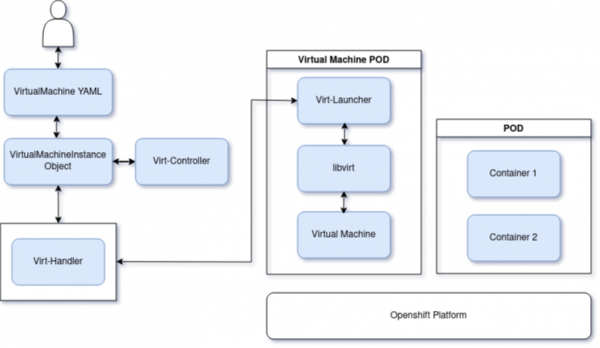

OpenShift Virtualization enables traditional virtual machines to exist as containerized workloads. The feature is available as an operator for OpenShift.

To achieve this, Kubevirt introduces a custom resource called VirtualMachine. When a VirtualMachine object is created, Kubevirt components create a set of pods and utilize libvirt APIs to create a virtual machine.

The major components Kubevirt introduces to manage a virtual machine:

- Virt Controller: The virt-controller monitors for new VM definitions submissions and creates a virt-launcher pod that serves as the container for the virtual machine.

- Virt Handler: The virt-handler acts as a daemon set and manages virtual machines on a specific node. Unlike virt launcher, it is a privileged component that prepares the node for running the VM.

- Virt Launcher: The virt-launcher pod uses the virtual machine definition to run a guest utilizing libvirt APIs.

Figure 2 shows the virtualization components that KubeVirt introduces to OpenShift.

OpenShift Virtualization custom features

The Kubevirt project is actively working on exposing confidential computing features to guests.

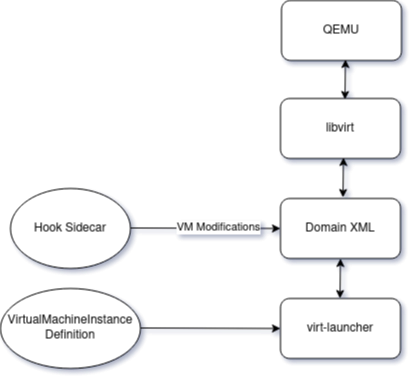

However, the current lack of support for these features in the VirtualMachine object definitions limits customization options. For our PoC, we have used KubeVirt’s hook sidecar feature to address this limitation.

A hook sidecar is a container that applies customizations before a virtual machine is initialized. Although it is not recommended for production, it is beneficial for experimenting with unsupported features in OpenShift Virtualization. This makes it ideal for creating a proof-of-concept for enabling SEV-SNP in the guest. Figure 3 illustrates this concept.

A hook sidecar is typically introduced via a configmap object. It provides us the option to update the libvirt XML before virtual machine instantiation via a Go binary, a shell or a python script. For example, the following configmap definition snippet introduces a onDefineDomain hook to modify the virtual machine’s XML.

apiVersion: v1

kind: ConfigMap

metadata:

name: sidecar-sev

data:

my_script.py: |

#!/usr/bin/env python3

...

os = root.find('os')

os_loader = os.find('loader')

if os_loader is None:

os_loader = ET.Element('loader')

os.append(os_loader)

os_loader.text = "/usr/share/edk2/ovmf/OVMF.amdsev.fd"

os_loader.set('type', 'rom')

os_loader.set('stateless', 'yes')

os_loader.attrib.pop('readonly', None)

os_loader.attrib.pop('secure', None)

for nvram in os.findall('nvram'):

os.remove(nvram)To summarize, this replaces the default firmware OVMF_CODE.secboot.fd to a SNP aware firmware namely, OVMF.amdsev.fd. It also changes some default options in the following lines that are required to run a SEV-SNP guest. Further, we also introduce sev-snp as the launchSecurity type for libvirt to create a SEV-SNP guest for us. The following code block achieves this:

launch_security = root.find('launchSecurity')

if launch_security is None:

launch_security = ET.Element('launchSecurity')

root.append(launch_security)

launch_security.set('type', 'sev-snp')

cbitpos = launch_security.find('cbitpos')

if cbitpos is None:

cbitpos = ET.Element('cbitpos')

launch_security.append(cbitpos)

cbitpos.text = '51'

...Finally, the VM yaml is updated with the hook sidecar annotation for these changes to take effect before the virtual machine is launched. Notice the onDefineDomain hook path that is called when a virtual machine is created.

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

labels:

kubevirt.io/os: linux

name: sev-vm2

spec:

...

architecture: amd64

running: true

template:

metadata:

annotations:

hooks.kubevirt.io/hookSidecars: '[{"args": ["--version", "v1alpha2"],

"configMap": {"name": "sidecar-sev", "key": "my_script.py", "hookPath": "/usr/bin/onDefineDomain"}}]'

spec:

domain:

...Besides the sidecar modifications, we also use a modified Hyper Converged Cluster Operator (HCO) configuration. The HCO operator acts as the entry point and the single source of truth for OpenShift Virtualization. It also updates the virt-launcher and the virt-install images with our custom versions. The updated HCO configuration is also used to enable the hook sidecar and the WorkloadEncryptionSEV feature gates. While the hook sidecar feature gate is needed to apply the sidecar customizations we described, we need the WorkloadEncryptionSEV to expose /dev/sev to the virt-launcher pod so it can configure the SEV-SNP enabled guest.

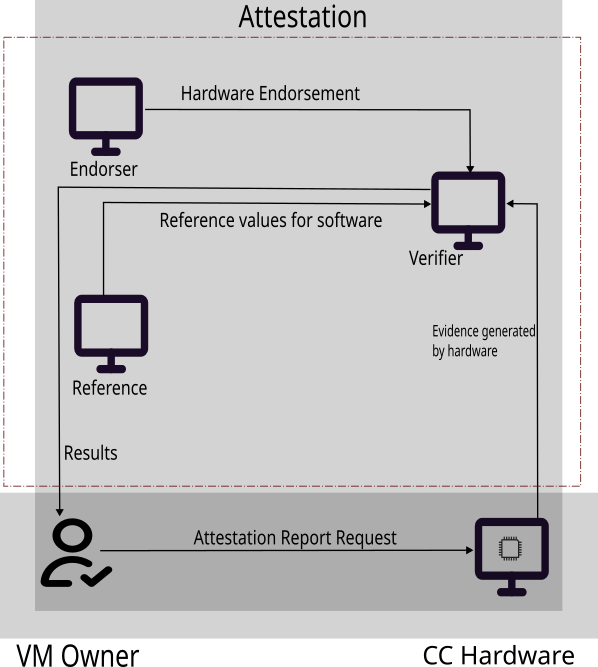

Attestation verifies the confidential computing environment

Attestation is a vital tool for validating that a guest is running in a trusted execution environment. As part of this process, the guest environment fetches trustworthiness evidence from the hardware and verifies it with either a local or a remote attestation server based on user requirements. Figure 4 illustrates the idea of attestation.

In this process, the owner of the virtual machine initiates a request to fetch an attestation report either manually or via prebuilt logic in the virtual machine environment.

The report usually consists of two parts:

- Evidence that can prove the trust worthiness of the hardware enclave. This is generated by the confidential computing hardware and indicates identifying pieces about the hardware such as hardware information and versions, firmware versions, characteristics of the hardware, such as memory encryption.

- Optionally, evidence that can prove that software is tamper proof (i.e., measurement of the boot image).

The verifier can verify the evidence token by getting an endorsement, such as from the processor manufacturer that the hardware report is valid and by comparing software measurement against known reference values. Depending on the results, the virtual machine owner can decide to continue execution or abort.

TEE dependant system boot policy

Applications of attestation come in various forms. In this article, we explore an example where a virtual machine (VM) owner controls the virtual machine boot flow and allows it to proceed only if system attestation can prove that the environment is indeed a Trusted Execution Environment (TEE). The VM owner can achieve such control by the use of a remote attestation server.

For our attestation demonstration, we refer to Trustee, an open source remote attestation server that provides various essential components for attesting confidential computing environments as described below:

- Key Broker Service (KBS): KBS is built as a server that is designed to support remote attestation and delivering secrets. It corresponds to the Relying Party in the RATS attestation model.

- Attestation Service (AS): AS verifies the TEE evidence by interacting with the Endorser, an external component that verifies the hardware evidence, and the Reference Value Provider Service (RVPS).

- RVPS: This component provides reference values for software and hardware TEE evidence. For example, RVPS can provide the acceptable firmware versions for processor firmware or message digests for critical software pieces.

- Trustee-attester: The trustee-attester serves as the client side endpoint for attestation. While it is decoupled from the components of the trustee server, it communicates with the trustee server to provide client evidence and fetch guest secrets on successful attestation.

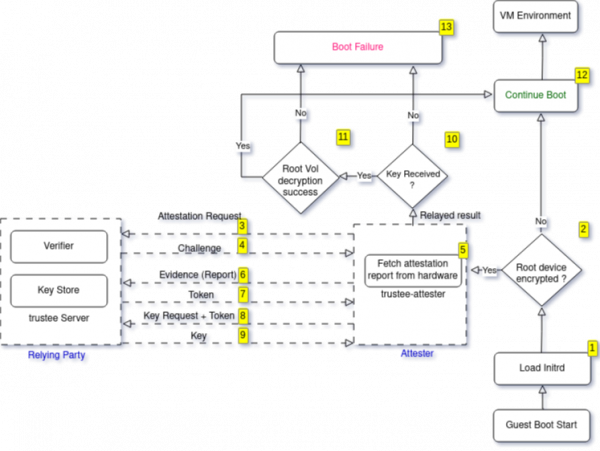

The flowchart in Figure 5 for our PoC depicts how we integrated remote attestation to validate the platform’s identity and control virtual machine boot.

In attestation, there are two main components that enable policy decisions based on evidence. First, the attester signifies the component that collects hardware evidence. Typically, this is local to the system which is responsible for creating the trusted execution environment. Second, is the relying party, that collects the said evidence, verifies it (by a verifier) and then makes policy decisions.

In this section, we describe our attestation example and signify the roles played by the main components of attestation.

- As the system powers up, the kernel boots, the initrd loads required modules and attempts to mount the root volume.

- The custom initrd has logic baked in it so that if the root volume is encrypted, the trustee-attester binary is invoked to fetch the decryption key from the remote trustee server. The custom initrd also enables networking so that the guest can communicate with the relying party.

- The trustee-attester binary plays the role of attester in this scenario. It sends an attestation request to the trustee server.

- As the trustee server challenges this request, the trustee-attester performs a local attestation of the hardware provided confidential computing environment.

- Then the trustee-attester sends the results of attestation to the trustee server for verification.

- Upon verifying the evidence and making sure that it’s in compliance with the loaded policy, the trustee server delivers results back to the trustee-attester component in the form of a token. For example, the policy can check for a specific firmware version or a specific CPU model.

- The trustee-attester sends a request for the key. The token is also sent back to signify that this request has been authenticated by the trustee-server.

- The trustee-server processes the received request and returns back the results. If the right key is received, the root volume is decrypted and system boot continues.

- If no key or an incorrect key is received, system boot fails.

In summary, we propose the following modifications to successfully run a SEV-SNP-enabled confidential virtual machine with attestation capabilities.

- Host Components: These components include the host kernel that supports the SEV-SNP feature along with additional pieces of the virtualization stack included in the virt-launcher image such as QEMU, libvirt and OVMF.

- Guest Components: The guest environment includes the guest kernel that should be SEV-SNP enabled so that it can effectively use hardware provided isolation features. The guest also includes userland programs that enable it to request an attestation of the underlying confidential computing hardware.

- Attestation Components: For attestation, we rely on an already configured service that verifies the attestation report from the hardware and is also responsible for storing and releasing secrets meant for our virtual machine. For our discussion, we rely on the trustee attestation server.

Use cases

We provide the following deployment scenarios for confidential computing in OpenShift Virtualization:

- In multi-tenant environments, typically, the IT department owns the infrastructure while another department such as finance utilizes them. Data owned by finance can remain confidential with the help of confidential computing enabled OpenShift Virtualization.

- As a related example, third-party infrastructure involves an external entity owning the computing hardware. With features provided by confidential computing enabled OpenShift Virtualization, an organization can run their confidential workloads on top of that infrastructure without necessarily having to trust the third-party owner.

Future work

In this article, we presented a brief summary of our proof-of-concept design that explores the idea of exposing confidential computing features to a guest running in an OpenShift Virtualization environment. We also demonstrated an example that highlights the importance of attestation to verify a hardware-based trusted execution enclave utilizing the trustee attestation server.

There is already ongoing work to integrate support for SEV-SNP features to Kubevirt as the first step so that we can avoid the hook sidecar mechanism altogether. In the future, we intend to develop more proof-of-concepts and articles to explore how we can improve attestation and support more complex scenarios, such as supporting GPUs with confidential computing for AI and edge workloads.

Other related work also includes creating configurable virtual machine images via utilities, such as Red Hat Enterprise Linux image-builder tool or image mode for RHEL and evaluating the placement of various software pieces to maintain the security of the overall architecture.