vLLM has rapidly become the go-to solution for efficient inference of large language and multimodal models. In this post, we'll demonstrate the substantial performance and usability improvements introduced in vLLM 0.8.1 compared to version 0.7.3, emphasizing crucial architectural overhauls and multimodal inference optimizations.

Terms

- Highest sustained request rate: The highest request rate that the server can sustain without hitting pre-emptions. Pre-emptions can cause sudden spikes in TTFT and ITLs and is considered an unsustainable scenario.

- Time to First Token (TTFT): Measures the time it takes for a language model to produce the first token of its output after receiving a prompt. We measure it on a per-prompt basis. This can be perceived as how quickly the model starts responding once the prompt is received.

- Inter-token latency (ITL): The time between consecutive tokens. Inter-token latency is also interchangeably called Time per Output Token (TPOT). This can be perceived as the speed at which the model responds.

- Tensor parallel size (TP): The number of GPUs that the model is sharded over in a tensor parallel fashion.

Architectural changes and simplifications

Some of the more significant performance improvements include:

- Engine re-architecture

- Simplified scheduler

- Prefix caching on by default

- Enhanced multimodal performance

1. Engine re-architecture (V1 engine)

The vLLM team has been building a massive change in the architecture of the core engine. While this has existed since the v0.6.x days, the v1 engine is now the default since version v0.8.0. The v1 engine allows for significantly better performance and higher compute utilization by isolating the scheduler and the EngineCore execution loop to a separate process and other CPU intensive tasks like preparing inputs, detokenizing outputs and server responsibilities to different processes making them completely non-blocking to the execution loop. The CPU overhead was more noticeable with smaller models where the GPU computation time was a significantly lower proportion of the execution time vs with larger models where the workload is more intensive on the GPU.

The other key improvements to the engine are in the input preparation. With the v0 engine, the input preparation was part of the worker 0 only and would be broadcast to all other workers from there. With the v1 engine, the request states are cached on the worker and only diffs are communicated between the workers resulting in much lower inter-process communication. [1]

2. Simplified scheduler: Deprecation of num-scheduler-steps and enable-chunked-prefill

In the v0 engine, parameters num-scheduler-steps and enable-chunked-prefill required manual tuning to deliver the best numbers on a given hardware and cannot be used in combination. With the v1 architecture, these parameters are not needed anymore. Chunked prefill and multistep scheduling are all active always with each engine step's token budget dynamically adjusted between the prefill and decode stages on the fly. This leads to the best performance out of the box without any tuning tuning from the user. Providing these parameters in CLI would force vLLM to fall back to the v0 engine.

3. Prefix caching on by default

The new zero overhead prefix caching in the v1 engine can benefit most users with no additional configuration nor performance degradation due to the now constant-time eviction and low object creation overhead even in scenarios with the lowest cache hit rates. It is now enabled by default and requires no intervention from the user.

4. Enhanced multimodal performance

The v0.8.0/1 releases also introduce substantial optimizations specifically tailored for multimodal models. The v1 engine moves the input preprocessing into a separate process preventing it from blocking the GPU worker. The v1 engine also adds caching at multiple levels:

- Preprocessing cache: Re-use the preprocessed input across requests.

- Enabling prefix caching for multimodal inputs.

- Encoder cache temporarily storing vision embeddings to allow for text input chunking.

Multimodal model performance benchmarks

We serve the Pixtral-12B model with the following command:

vllm serve \

mistralai/Pixtral-12B-2409 \

--tensor-parallel-size 1 \

--port 8000 \

--tokenizer_mode mistral \

--no-enable-prefix-caching \

--limit_mm_per_prompt 'image=4'limit_mm_per_prompt is used to control the number of multimodal elements that will be allowed to be processed by the model. While this is tunable, it should usually be set to the recommendation from the model card.

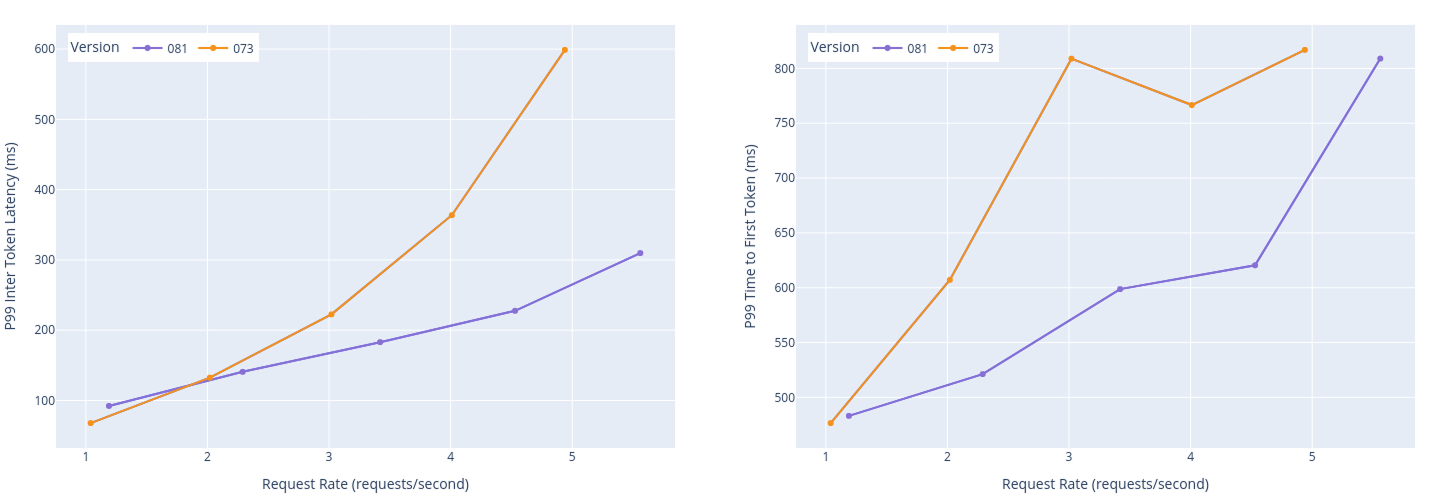

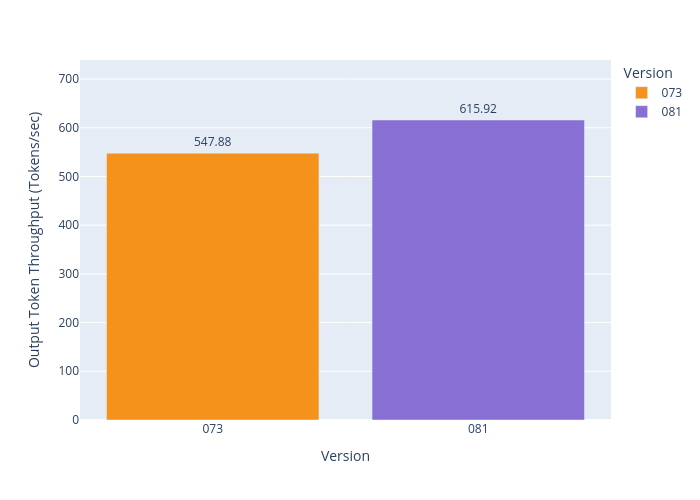

Pixtral-12B model performance (tensor parallel = 1)

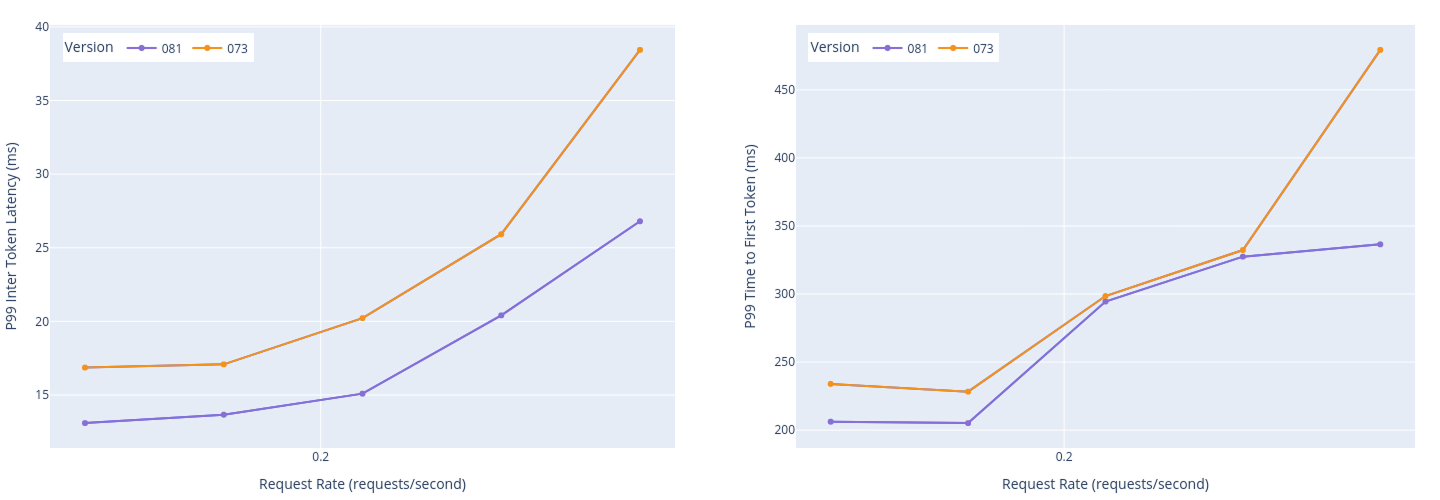

Pixtral-12B was tested on lmarena Vision Dataset (lmarena-ai/vision-arena-bench-v0.1). The results are shown in Figures 1 and 2.

Model: mistralai/Pixtral-12B-2409.

Model: mistralai/Pixtral-12B-2409.

We see consistently lower p99 ITLs and TTFTs that correspond to much better user experience on the client side. The improvement in TTFTs can be primarily attributed to the v1 architecture running prefill and decode stages in the same step. The higher throughput is a combination of the v1 engine architecture and the improvements in the multimodal input processing. The performance degradation in the v1 engine is also gradual in place of the sudden spikes that can be seen in the v0 engine.

Text model performance benchmarks

We benchmarked several popular text-only models, highlighting their improved performance across common input/output length scenarios. We test on random token data of input/output lengths given here to simulate different use cases.

For text models, we serve vLLM with the command:

vllm serve \

<MODEL_NAME> \

--tensor-parallel-size <TENSOR_PARALLEL_SIZE> \

--port 8000 \

--no-enable-prefix-caching \

--max-model-len <(1.1 * (INPUT_LEN + OUTPUT_LEN))>The max-model-len parameter does not affect performance but setting it to a value not too much higher than the maximum expected input length can help improve stability of the serving. vLLM requires that it must be able to fit at least one sequence of said length completely in device (in this case, GPU) memory to launch.

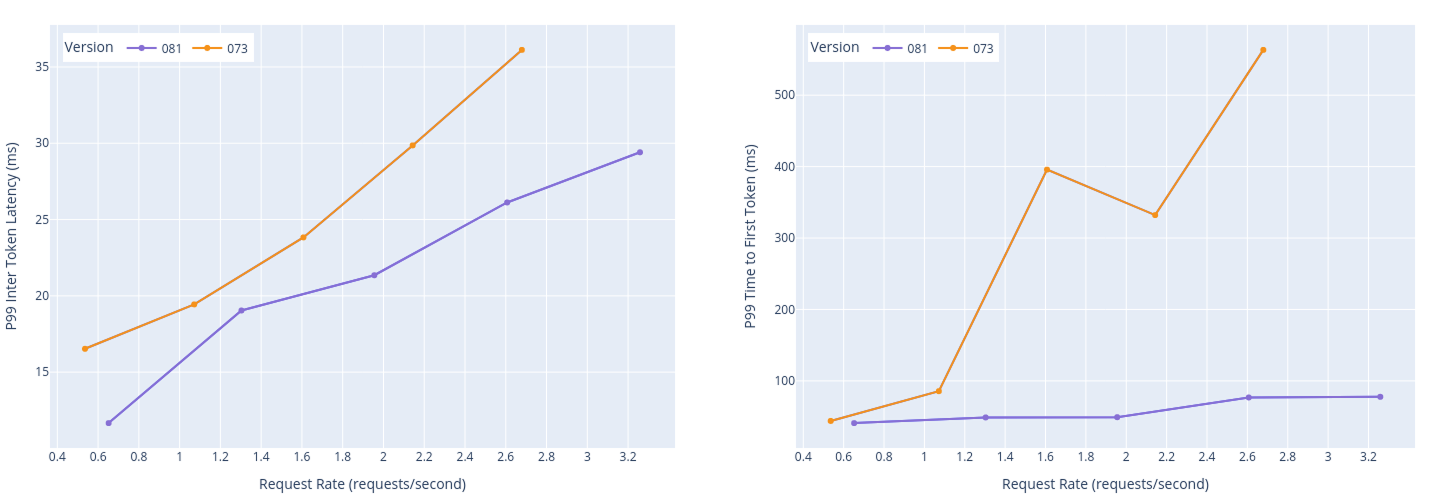

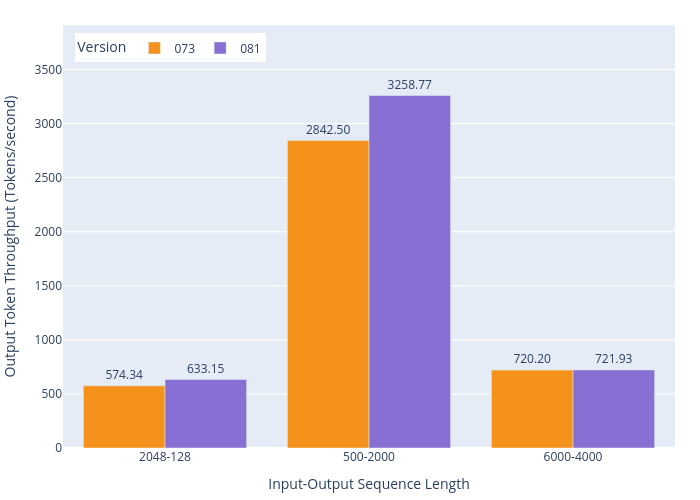

Meta Llama 3.1 8B (tensor parallel = 1)

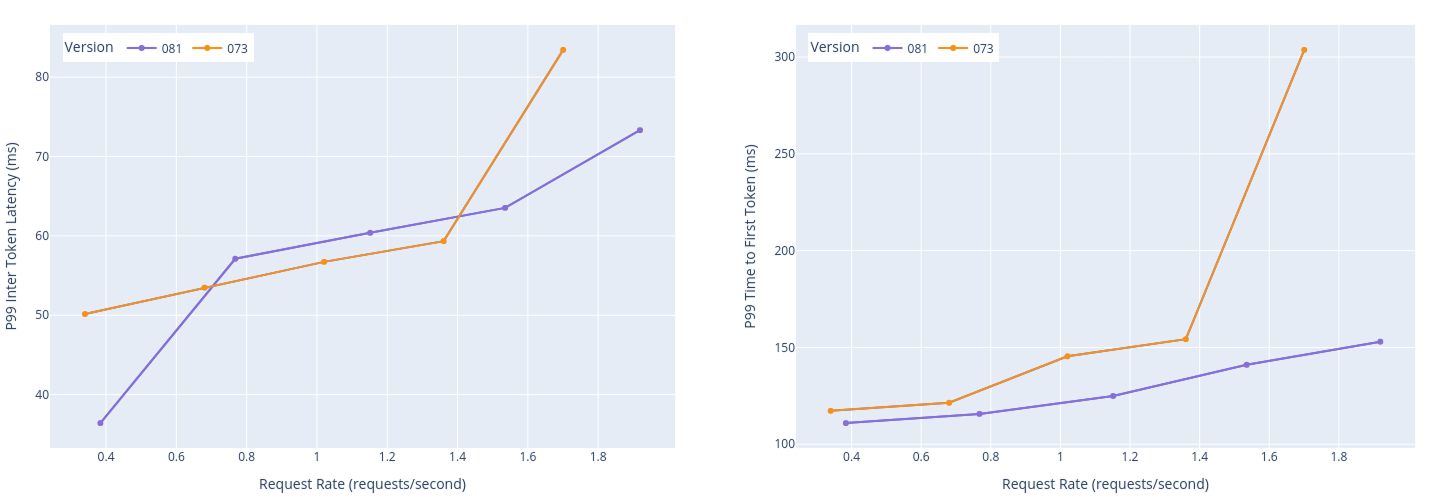

Figures 3, 4, 5, and 6 show the performance data for Meta Llama 3.1 8B.

Input/Output: (500, 2000)

Model: meta-llama/Meta-Llama-3.1-8B-Instruct @ TP1. Preset: 500 In 2000 Out.

The TTFT remains consistently lower due to no request waiting for prefill for too long.

Input/Output: (2048, 128)

Model: meta-llama/Meta-Llama-3.1-8B-Instruct @ TP1. Preset: 2048 In 128 Out.

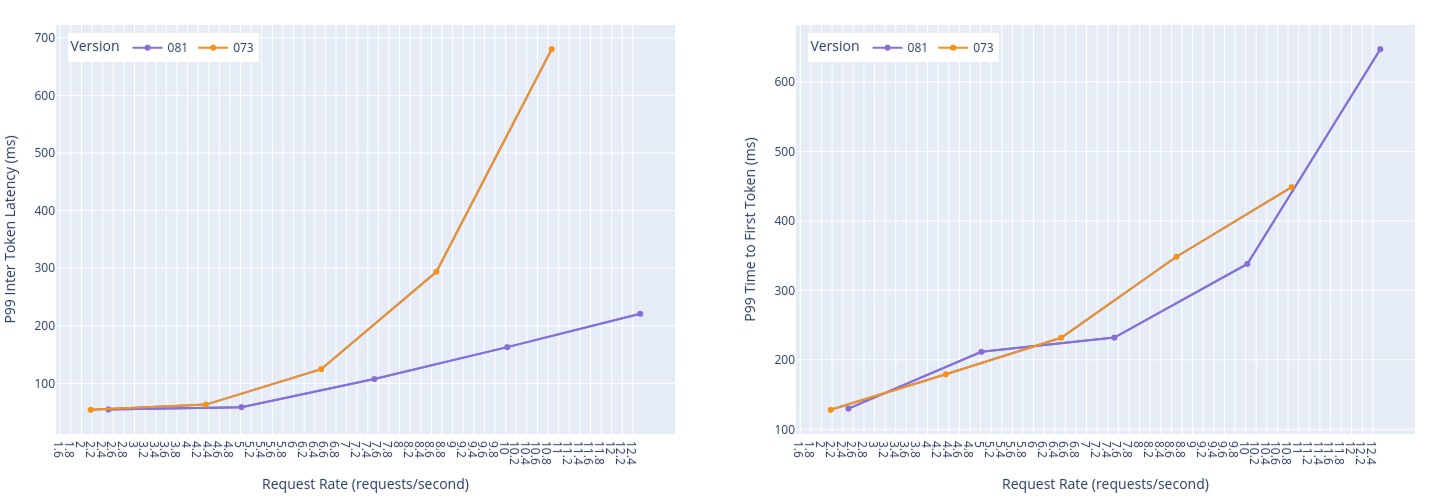

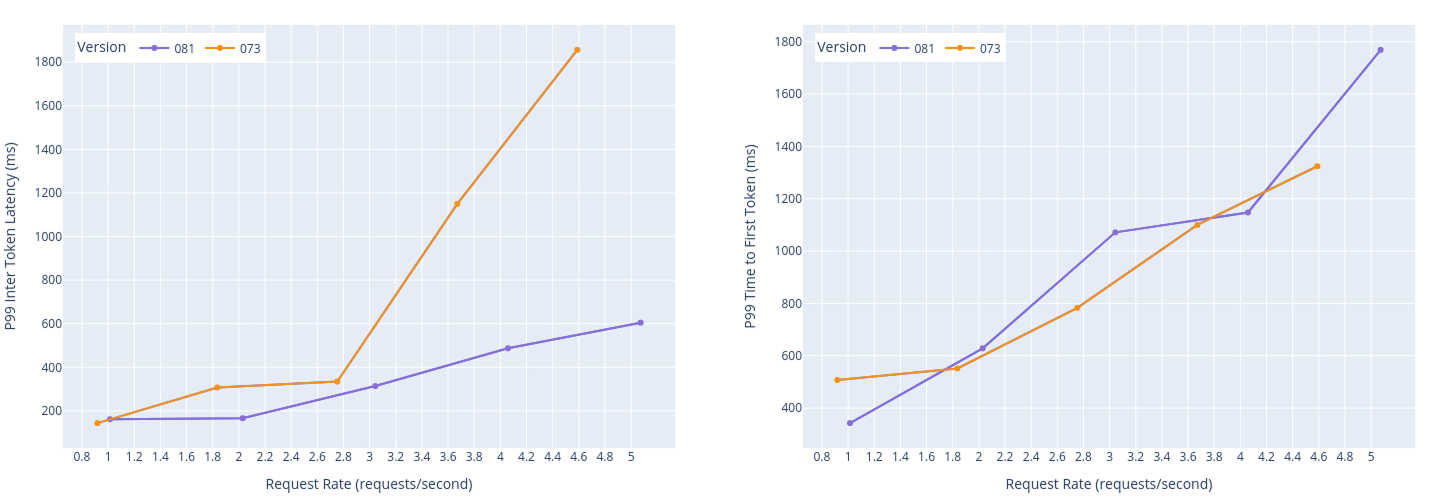

Input/Output: (6000, 4000)

Model: meta-llama/Meta-Llama-3.1-8B-Instruct @ TP1. Preset: 6000 In 4000 Out.

Model: meta-llama/Meta-Llama-3.1-8B-Instruct @ TP1.

Text models also see a considerable boost in performance over the v0 engine. We are seeing a remarkable performance improvement of 24% in the generation heavy workload.

Meta Llama 3.3 70B (tensor parallel = 4)

Figures 7, 8, 9, and 10 show the performance data for Meta Llama 3.3 70B.

Input/Output: (500, 2000)

Model: meta-llama/Meta-Llama-3.1-70B-Instruct @ TP4. Preset: 500 In 2000 Out.

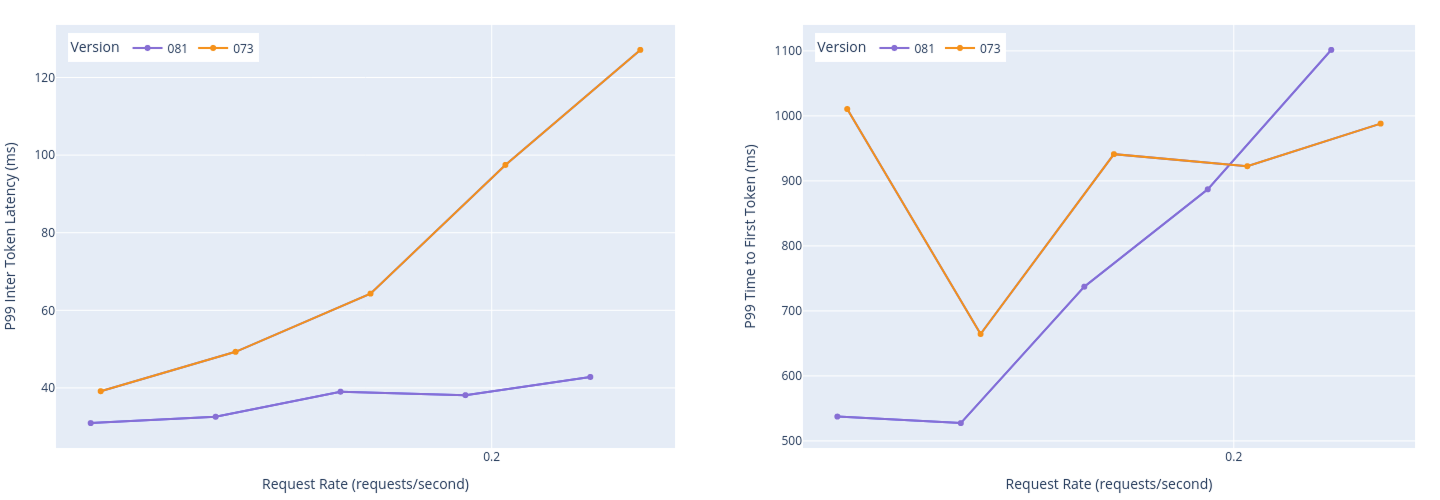

Input/Output: (2048, 128)

Model: meta-llama/Meta-Llama-3.1-70B-Instruct @ TP4. Preset: 2048 In 128 Out.

Input/Output: (6000, 4000)

Model: meta-llama/Meta-Llama-3.1-70B-Instruct @ TP4. Preset: 6000 In 4000 Out.

The user experience sees a significant boost in generation-heavy workloads—such as story generation (500 input / 2000 output tokens)—compared to prefill-dominated tasks like question answering (2048 input / 128 output).

Notably, scenarios like document summarization (6000 input / 4000 output) also benefit, with substantial improvements in P99 inter-token latency, resulting in a smoother and more consistent interaction. Additionally, under high load, the v1 engine degrades in a much more predictable and controlled manner, avoiding the abrupt latency spikes often observed with the v0 engine in v0.7.3.

Model: meta-llama/Meta-Llama-3.1-70B-Instruct @ TP4.

Benchmark settings

- We disabled prefix-caching to demonstrate the reasonable minimum performance improvements achievable in a close to worst case dataset with no common prefix hits.

- Note: Production stacks should not disable it to see the best performance.

- The benchmarks were run with the vLLM built-in benchmarks (

benchmark_serving.py). - All benchmarks run on NVIDIA H100 GPUs (at tensor parallel size 1 or 4, depending on the model size).

- Upstream images on Docker Hub (v0.8.1) were used for this testing.

- Testing platform:

- Red Hat OpenShift Container Platform 4.17.9

- 4 x H100 SXM5

Conclusion and next steps

vLLM 0.8.1 on v1 engine offers up to a 24% improvement in throughput over 0.7.3 on v0 engine for generation-heavy workloads, delivering better server responsiveness without any performance degradation from transitioning to the v1 engine. Although the v1 engine is still under active development with frequent updates, many supported models and configurations are already seeing substantial benefits, and support for more is being added rapidly. This release also simplifies deployment by reducing user effort in performance tuning, introduces significant performance enhancements, and optimizes support for multimodal models, with ongoing development ensuring continued improvements in upcoming minor versions.

Explore the latest version and share your feedback on the vLLM GitHub repository!