Serverless: Code, not infrastructure

Serverless is a cloud computing model that lets you build and manage applications without having to worry about managing the underlying infrastructure.

What is serverless computing?

In simple terms, serverless takes care of scaling, resource allocation, and other operational tasks, so you can focus on your code. The open source Knative project bridges the gap between the power of Kubernetes and the simplicity of serverless computing and event-driven applications. On Knative, you can deploy any modern application workload, such as monolithic applications, microservices, or even tiny functions.

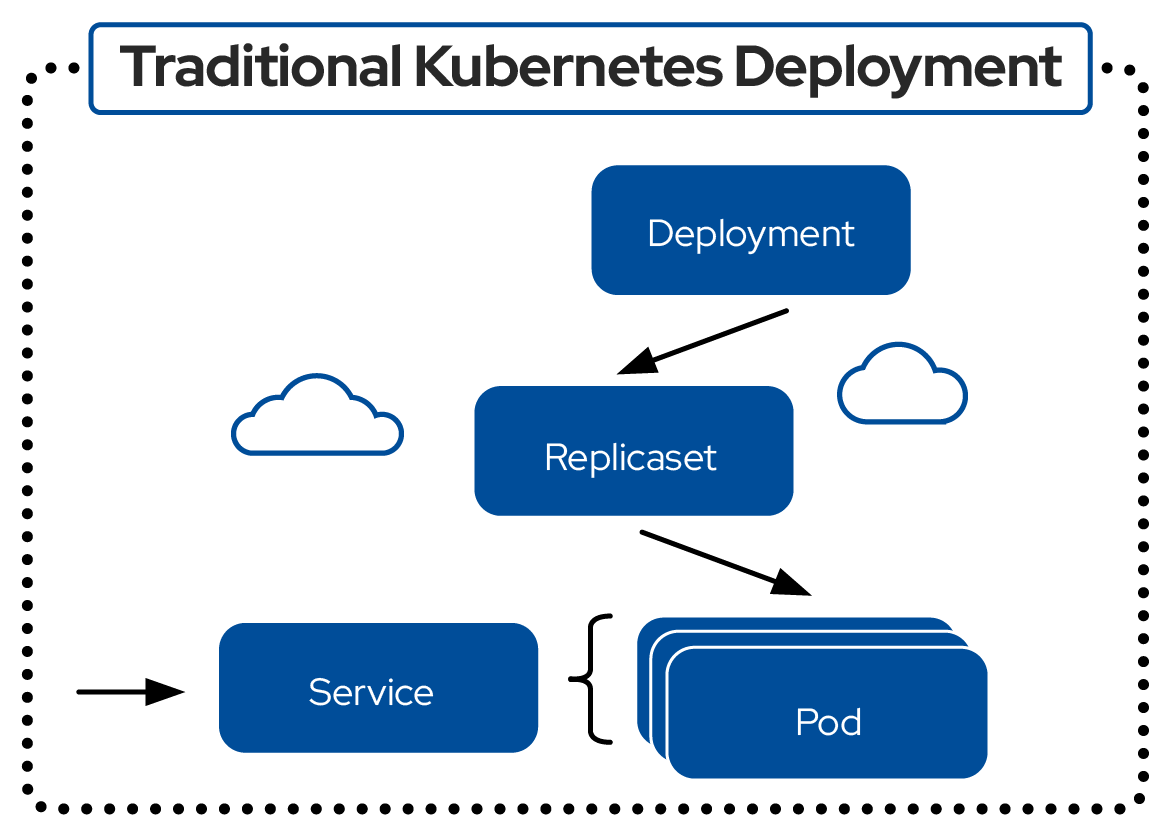

Traditional Kubernetes deployment

In a traditional Kubernetes deployment, a container image is hosted somewhere, and a YAML file describes the deployment. That image is applied to the cluster, and the deployment creates a ReplicaSet that then creates the number of pods specified. Then, a service is created that matches the labels of the pods.

Additional steps might be required for various situations, such as having to create routes if the service can’t access a cloud balancer. All in all, a traditional Kubernetes deployment involves quite a few steps, including long YAML files and many moving parts.

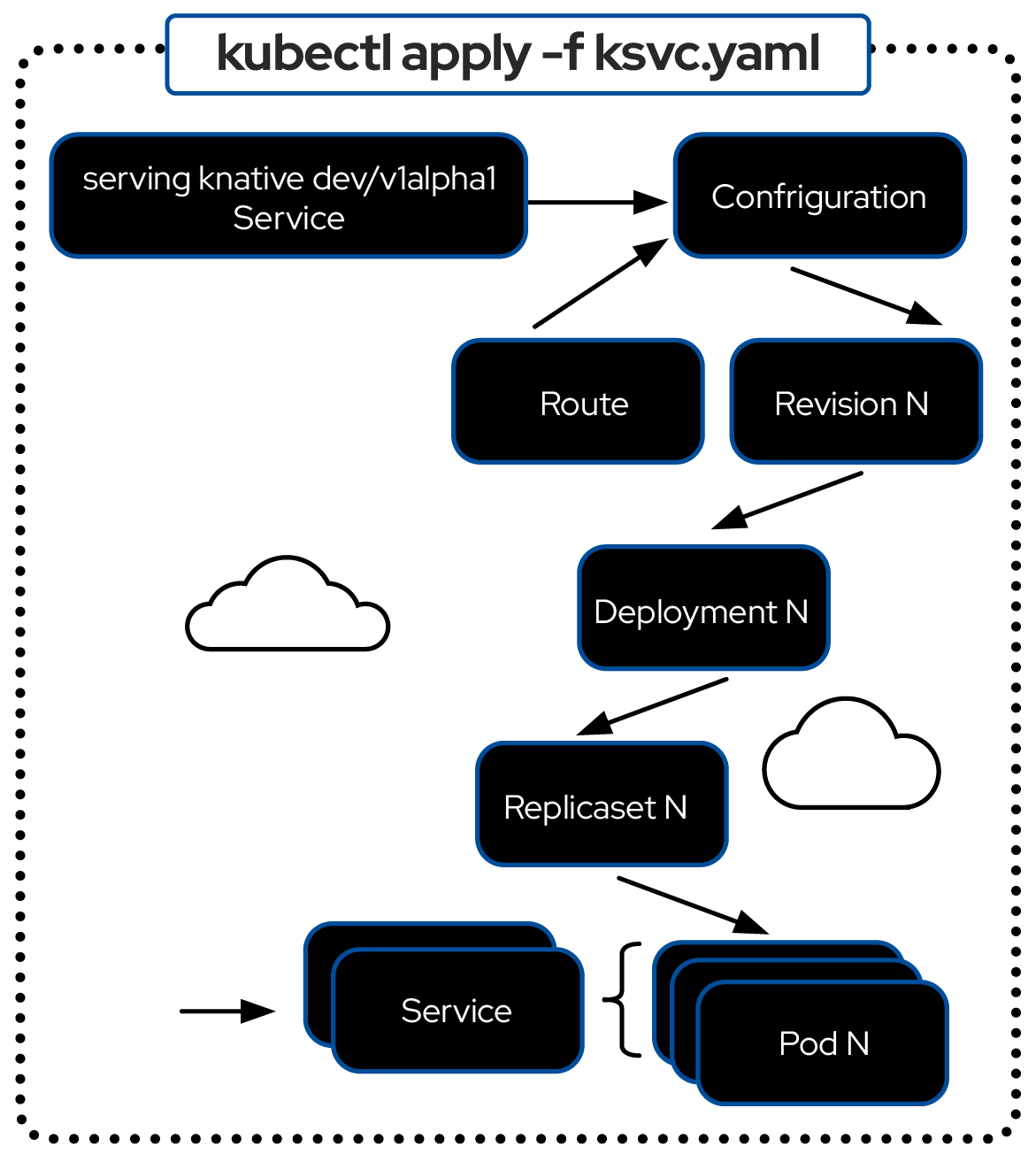

Knative deployment

With serverless, you don’t have to worry about infrastructure at all. You provide a container image, run it on a cluster, and that’s it—everything else is taken care of for you.

With Knative, deploying is even simpler: you write a small resource file, called a Knative Service, that tells the system “I want to run this image.” Once applied to your cluster, the Knative Operator automatically creates all the necessary resources: the deployment, service, route (if needed), and a configuration resource. The configuration resource can also manage revisions, making it easy to roll back to a previous version when needed.

Evolve an application into a serverless model using Red Hat OpenShift. Learn...

Learn how to deploy and run applications that scale up, or scale to zero,...

Java is an ideal language to create serverless functions because of its...