Highlights from BackstageCon at KubeCon 2024

The Red Hat Developer Hub team recently joined the vibrant Backstage community

The Red Hat Developer Hub team recently joined the vibrant Backstage community

Join us as we get ready for the holidays with a few AI holiday treats! We will demo AI from laptop to production using Quarkus and LangChain4j with ChatGPT, Dall-E, Podman Desktop AI and discover how we can get started with Quarkus+LangChain4j, use memory, agents and tools, play with some RAG features, and test out some images for our holiday party.

Come and join us for our year-end review as we enjoy the company of a few guests, discuss things that happened in 2024, and talk about what we think 2025 will bring. Feel free to bring your topics to the discussion and we’ll make sure to ask the guests what their thoughts are on your favorite topics.

Meet some of the winners from the 2024 Red Hat and Intel AI Hackathon. They will review their Gen AI or an AI Retrieval Augmented Generation (RAG) application built on the Red Hat OpenShift AI environment on AWS with Intel’s Xeon Processor AMX features. Learn more about their process and how they did this!

Learn how a developer can work with RAG and LLM leveraging their own data chat for queries.

Using a chatbot to call a Node.js function inside a Large Language Model.

Download this 15-page e-book to explore 5 key ways OpenShift benefits developers, including integrated tools and workflows and simplified AI app development.

Explore the evolution and future of Quarkus, Red Hat’s next-generation Java framework designed to optimize applications for cloud-native environments.

The RamaLama project simplifies AI model management for developers by using OCI containers to automatically configure and run AI models.

This article details new Python performance optimizations in RHEL 9.5.

This guide walks through how to create an effective qna.yaml file and context file for fine-tuning your personalized model with the InstructLab project.

A list of curated posts of essential AI tutorials from our Node.js team at Red

Discover how you can use RHEL AI to fine-tune and deploy Granite LLM models, leveraging techniques like retrieval-augmented generation and model fine-tuning.

Learn how to configure Testing Farm as a GitHub Action and avoid the work of setting up a testing infrastructure, writing workflows, and handling PR statuses.

Red Hat was recently at NodeConf EU, which was held on November 4-6th 2024. This

Improving Chatbot result with Retrieval Augmented Generation (RAG) and Node.js

Cataloging AI assets can be useful for platform engineers and AI developers. Learn how to use Red Hat Developer Hub to catalog AI assets in an organization.

Learn how to use Red Hat Developer Hub to easily create and deploy applications to your image repository or a platform like Red Hat OpenShift AI.

Use AI and Node.js to generate a JSON response that contains a summarized email

Learn how to safely deploy and operate AI services without compromising on...

A practical example to deploy machine learning model using data science...

Learn how to set up a cloud development environment (CDE) using Ollama, Continue

Podman Desktop provides a graphical interface for application developers to work seamlessly with containers and Kubernetes in a local environment.

Artificial intelligence (AI) and large language models (LLMs) are becoming

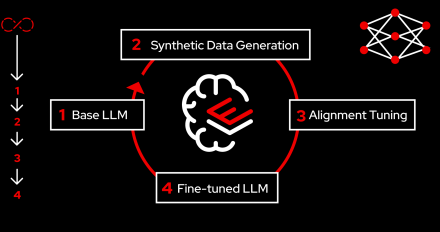

The rapid advancement of generative artificial intelligence (gen AI) has unlocked incredible opportunities. However, customizing and iterating on large language models (LLMs) remains a complex and resource intensive process. Training and enhancing models often involves creating multiple forks, which can lead to fragmentation and hinder collaboration.