Red Hat publishes Docker Hub images for Granite 7B LLMs and InstructLab

OCI images are now available on the registries Docker Hub and Quay.io, making it even easier to use the Granite 7B large language model (LLM) and InstructLab.

OCI images are now available on the registries Docker Hub and Quay.io, making it even easier to use the Granite 7B large language model (LLM) and InstructLab.

Quantized LLMs achieve near-full accuracy with minimal trade-offs after 500K+ evaluations, providing efficient, high-performance solutions for AI model deployment.

Announcing the General Availability of Red Hat Enterprise Linux AI (RHEL AI)

Machete, Neural Magic’s optimized kernel for NVIDIA Hopper GPUs, achieves 4x memory savings and faster LLM inference with mixed-input quantization in vLLM.

Get started with AMD GPUs for model serving in OpenShift AI. This tutorial guides you through the steps to configure the AMD Instinct MI300X GPU with KServe.

Learn how developers can use prompt engineering for a large language model (LLM) to increase their productivity.

Learn how to deploy a coding copilot model using OpenShift AI. You'll also discover how tools like KServe and Caikit simplify machine learning model management.

This tutorial gives you a unique chance to learn, hands-on, some of the basics of large language models (LLMs) in the Developer Sandbox for Red Hat OpenShift.

Explore AMD Instinct MI300X accelerators and learn how to run AI/ML workloads using ROCm, AMD’s open source software stack for GPU programming, on OpenShift AI.

Learn how to apply supervised fine-tuning to Llama 3.1 models using Ray on OpenShift AI in this step-by-step guide.

Understand how retrieval-augmented generation (RAG) works and how users can

Experimenting with a Large Language Model powered Chatbot with Node.js

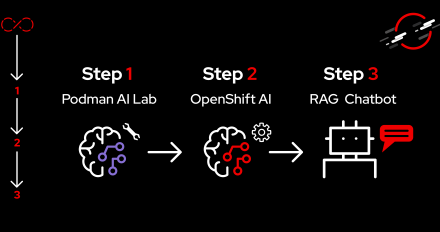

Learn how to rapidly prototype AI applications from your local environment with

Learn how to generate word embeddings and perform RAG tasks using a Sentence Transformer model deployed on Caikit Standalone Serving Runtime using OpenShift AI.

In today's fast-paced IT landscape, the need for efficient and effective

Add knowledge to large language models with InstructLab and streamline MLOps using KitOps for efficient model improvement and deployment.

Learn how a platform engineering team streamlined the deployment of edge kiosks by leveraging key automation components of Red Hat Ansible Automation Platform.

With GPU acceleration for Podman AI Lab, developers can inference models faster and build AI-enabled applications with quicker response times.

This blog post summarizes an experiment to extract structured data from

Use the Stable Diffusion model to create images with Red Hat OpenShift AI running on a Red Hat OpenShift Service on AWS cluster with NVIDIA GPU enabled.

Get an overview of Explainable and Responsible AI and discover how the open source TrustyAI tool helps power fair, transparent machine learning.

This short guide explains how to choose a GPU framework and library (e.g., CUDA vs. OpenCL), as well as how to design accurate benchmarks.

Learn how to write a GPU-accelerated quicksort procedure using the algorithm for prefix sum/scan and explore other GPU algorithms, such as Reduce and Game of Life.

This article explores the installation, usage and benefits of Red Hat OpenShift Lightspeed on Red Hat OpenShift Local.

Red Hat OpenShift AI is an artificial intelligence platform that runs on top of Red Hat OpenShift and provides tools across the AI/ML lifecycle.