Happy birthday, Repo! A look back on our mascot’s first year

Celebrate our mascot Repo's first birthday with us as we look back on the events that shaped Red Hat Developer and the open source community from the past year.

Celebrate our mascot Repo's first birthday with us as we look back on the events that shaped Red Hat Developer and the open source community from the past year.

Learn how to deploy multimodal AI models on edge devices using the RamaLama CLI, from pulling your first vision language model (VLM) to serving it via an API.

Discover SDG Hub, an open framework for building, composing, and scaling synthetic data pipelines for large language models.

Learn about the 5 common stages of the inference workflow, from initial setup to edge deployment, and how AI accelerator needs shift throughout the process.

Learn how to implement spec coding, a structured approach to AI-assisted development that combines human expertise with AI efficiency.

Get a comprehensive guide to profiling a vLLM inference server on a Red Hat Enterprise Linux system equipped with NVIDIA GPUs.

This learning path explores running AI models, specifically large language

Move larger models from code to production faster with an enterprise-grade

Learn how to scale machine learning operations (MLOps) with an assembly line approach using configuration-driven pipelines, versioned artifacts, and GitOps.

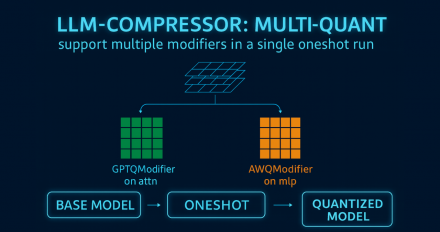

The LLM Compressor 0.8.0 release introduces quantization workflow enhancements, extended support for Qwen3 models, and improved accuracy recovery.

Learn how llm-d's KV cache aware routing reduces latency and improves throughput by directing requests to pods that already hold relevant context in GPU memory.

Learn how to deploy LLMs like Qwen3-Coder-30B-A3B-Instruct on less infrastructure using Red Hat AI Inference Server's LLM Compressor and OpenShift AI.

DeepSeek-V3.2-Exp offers major long-context efficiency via vLLM on Day 0, deploying easily on the latest leading hardware and Red Hat AI platforms.

Implement cost-effective LLM serving on OpenShift AI with this step-by-step guide to configuring KServe's Serverless mode for vLLM autoscaling.

Learn how to deploy Model Context Protocol (MCP) servers on OpenShift using ToolHive, a Kubernetes-native utility that simplifies MCP server management.

See how vLLM’s throughput and latency compare to llama.cpp's and discover which tool is right for your specific deployment needs on enterprise-grade hardware.

Integrate the Kubernetes MCP server with OpenShift and VS Code to give AI assistants a safe, intelligent way to interact with your clusters.

Deploy DialoGPT-small on OpenShift AI for internal model testing, with step-by-step instructions for setting up runtime, model storage, and inference services.

Walk through how to set up KServe autoscaling by leveraging the power of vLLM, KEDA, and the custom metrics autoscaler operator in Open Data Hub.

AI agents are where things get exciting! In this episode of The Llama Stack Tutorial, we'll dive into Agentic AI with Llama Stack—showing you how to give your LLM real-world capabilities like searching the web, pulling in data, and connecting to external APIs. You'll learn how agents are built with models, instructions, tools, and safety shields, and see live demos of using the Agentic API, running local models, and extending functionality with Model Context Protocol (MCP) servers.Join Senior Developer Advocate Cedric Clyburn as we learn all things Llama Stack! Next episode? Guardrails, evals, and more!

Learn how to install Red Hat OpenShift AI to enable an on-premise inference service for Ansible Lightspeed in this step-by-step guide.

Discover the benefits of using Rust for building concurrent, scalable agentic systems, and learn how it addresses the GIL bottleneck in Python.

Learn how to deploy Red Hat AI Inference Server using vLLM and evaluate its performance with GuideLLM in a fully disconnected Red Hat OpenShift cluster.

Learn how to deploy the Qwen3-Next model on vLLM using Red Hat AI. This guide covers the steps for serving the model with Podman.

Enhance your Python AI applications with distributed tracing. Discover how to use Jaeger and OpenTelemetry for insights into Llama Stack interactions.