Overview: Deploy OpenShift Data Foundation across availability zones using Multus

Deploying Red Hat OpenShift backed by Red Hat OpenShift Data Foundation (ODF) across availability zones using the Multus container network interface (CNI) can improve performance, availability, and security. To effectively implement this, careful planning is essential prior to deployment to ensure a successful implementation. This approach allows organizations to build highly available and performant solutions that can withstand infrastructure failures and meet demanding application requirements.

Prerequisites:

- Red Hat OpenShift Data Foundation operator (included in ODF install)

- Kubernetes NMState operator

- Red Hat Local storage operator

- DHCP server

In this learning path, you will:

- Implement a network node configuration policy (NNCP) to configure VLAN interfaces.

- Apply multiple network attachment definitions (NAD) to a cluster.

- Learn how the Multus validation tool functions to verify connectivity.

- Add a StorageCluster configuration to a test cluster.

Separate storage traffic

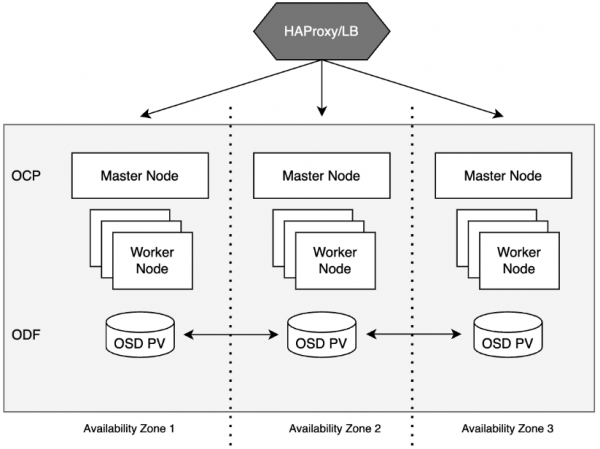

Deploying Red Hat OpenShift across availability zones provides high availability, fault tolerance, and improved performance. With this deployment architecture, hardware failures or data center outages are transparent to the applications and allow for continued operations (Figure 1).

A multi-availability zone architecture can easily be accomplished with Red Hat OpenShift, but when considering storage requirements, it becomes a bit more complicated. Beginning with Red Hat OpenShift Data Foundation (ODF) v4.15 (Dev preview), the ability to deploy a Ceph cluster across availability zones was introduced using topology labels. Using the topology.kubernetes.io/{region,zone} label on worker nodes will allow for topology-aware storage provisioning by ODF.

For more information on using topology labels with ODF, see the Knowledge Centered Service (KCS) article, OpenShift Data Foundation internal topology aware failure domain configuration. The drawback to this deployment is that ODF, by default, is limited to using the pod network.

In ODF deployments, separating the storage traffic onto dedicated network interfaces can substantially improve performance. For instance, the high-bandwidth, low-latency data replication traffic between ODF nodes can be isolated on a dedicated network, preventing it from contending with application/pod traffic. This isolation minimizes network congestion and ensures consistent data replication performance, which is crucial for maintaining data consistency and resiliency.

Using isolated networks for the storage traffic enhances security. By assigning network interfaces and using Virtual Local Area Networks (VLAN), network segmentation can be enforced at a granular level, limiting the potential impact and scope of security breaches. Data isolation minimizes the risk of unauthorized access as well.

Using the Multus Container Network Interface (CNI), a plugin for Kubernetes, network isolation can be achieved. For ODF, the public (client interaction and pods) and cluster (replication and heartbeat) storage traffic can be restricted to specific reserved interfaces on the worker nodes and specific subnets/VLANs. This is accomplished using Multus and is configured using Network Node Configuration Policies (NNCP) and Network Attachment Definitions (NAD). The NNCP will be used to configure the reserved interfaces on the worker nodes, bonds, VLANs, and the ODF multus shim that is required for the worker nodes to connect to the networks defined by the NADs. The NADs are used to configure the additional networks on the ODF pods.

Combining multiple availability zones and multus for network isolation, the overall robustness of the ODF deployment is significantly improved. By distributing ODF components across multiple availability zones and providing them with independent network paths, the solution becomes more resilient to network outages or disruptions affecting a single availability zone. If one network path experiences issues, the ODF components can continue to communicate and operate using their alternative network interfaces in other availability zones, ensuring high availability and business continuity.

When using Multus, the connection is made using MACVLAN, which limits the networking to layer 2 and does not provide forwarding and routing. This introduces the challenge of overcoming the distinct layer 3 requirement for each zone when deploying the cluster across availability zones. Using a DHCP server as the IPAM instead of using the whereabouts typically used will address this issue. Using the DHCP server to provide the appropriate static routes to each availability zone addresses the routing issue as well.

Careful planning of your networks must be done before deployment. Please see the Multi network plug-in (Multus) support section in the Planning Your Deployment guide for all prerequisites.

Ready to learn more about network planning? Planning your deployment