With the latest release of Red Hat Integration now available, we’ve introduced some exciting new capabilities. Along the enhancements for Apache Kafka-based environments, Red Hat announced the Technical Preview of the Red Hat Integration service registry to help teams to govern their services schemas. Developers can now use the registry to query for the schemas and artifacts required by each service endpoint or register and store new structures for future use.

Registry for event-driven architecture

Red Hat Integration’s service registry, based on the Apicurio project registry, provides a way to decouple the schema used to serialize and deserialize Kafka messages with the applications that are sending/receiving them. The service registry is a store for schema (and API design) artifacts providing a REST API and a set of optional rules for enforcing content validity and evolution. The registry handles data formats like Apache Avro, JSON Schema, Google Protocol Buffers (protobuf), as well as OpenAPI and AsyncAPI definitions.

To make it easy to transition from Confluent, the service registry added compatibility with the Confluent Schema Registry REST API. This means that applications using Confluent client libraries can replace Schema Registry and use Red Hat Integration service registry instead.

Replacing Confluent Schema Registry

For the sake of simplicity in this article, I will use an existing Avro client example already available to show you how to switch from Confluent Schema Registry to the Red Hat Integration service registry.

You will need to use docker-compose for starting a local environment and Git for cloning the repository code.

1. Clone the example GitHub repository:

$ git clone https://github.com/confluentinc/examples.git $ cd examples $ git checkout 5.3.1-post

2. Change to the avro example folder:

$ cd clients/avro/

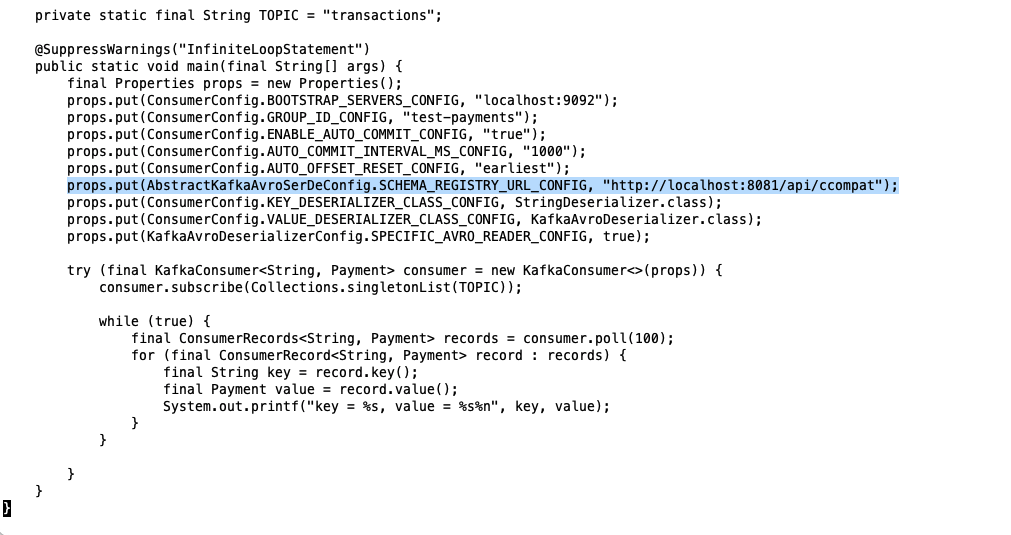

3. Open the ConsumerExample.java file under src/main/java/io/confluent/examples/clients/basicavro.

4. Replace the SCHEMA_REGISTRY_URL_CONFIG property with the following:

... props.put(AbstractKafkaAvroSerDeConfig.SCHEMA_REGISTRY_URL_CONFIG, "http://localhost:8081/api/ccompat"); ...

5. Repeat the last step with the ProducerExample.java file.

6. Download this docker-compose.yaml file example to deploy a simple Kafka cluster with the Apicurio registry.

7. Start the Kafka cluster and registry.

$ docker-compose -f docker-compose.yaml up

8. To run the producer, compile the project:

$ mvn clean compile package

9. Run ProducerExample.java:

$ mvn exec:java -Dexec.mainClass=io.confluent.examples.clients.basicavro.ProducerExample

10. After a few moments you should see the following output:

... Successfully produced 10 messages to a topic called transactions [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ ...

11. Now run the consumer:

$ mvn exec:java -Dexec.mainClass=io.confluent.examples.clients.basicavro.ConsumerExample

The messages should be displayed on your screen:

...

offset = 0, key = id0, value = {"id": "id0", "amount": 1000.0}

offset = 1, key = id1, value = {"id": "id1", "amount": 1000.0}

offset = 2, key = id2, value = {"id": "id2", "amount": 1000.0}

offset = 3, key = id3, value = {"id": "id3", "amount": 1000.0}

offset = 4, key = id4, value = {"id": "id4", "amount": 1000.0}

offset = 5, key = id5, value = {"id": "id5", "amount": 1000.0}

offset = 6, key = id6, value = {"id": "id6", "amount": 1000.0}

offset = 7, key = id7, value = {"id": "id7", "amount": 1000.0}

offset = 8, key = id8, value = {"id": "id8", "amount": 1000.0}

offset = 9, key = id9, value = {"id": "id9", "amount": 1000.0}

...12. To check the schema that the producer added to the registry you can issue the following curl command:

$ curl --silent -X GET http://localhost:8081/api/ccompat/schemas/ids/1 | jq .

13. The result should show you the Avro schema:

{

"schema": "{\"type\":\"record\",\"name\":\"Payment\",\"namespace\":\"io.confluent.examples.clients.basicavro\",\"fields\":[{\"name\":\"id\",\"type\":\"string\"},{\"name\":\"amount\",\"type\":\"double\"}]}"

}Done!

As you can see, you can just change the URL for the registry to use Red Hat service registry instead without the need to change any code in your applications.

If you are interested in other features of the Red Hat Integration service registry, you can see a full-fledged example using Quarkus Kafka extension in my amq-examples GitHub repository.

Summary

The Red Hat Integration service registry is a central data store for schemas and API artifacts. Developers can query, create, read, update, and delete service artifacts, versions, and rules to govern the structure of their services. The service registry could be also used as a drop-in replacement for Confluent Schema Registry with Apache Kafka clients. With just a change to the URL for the registry, you can use Red Hat service registry without needing to change code in your applications.

See also:

Last updated: April 13, 2021