In this blog, I would like to show you how you can create your fully software-defined data center with two amazing Red Hat products: Red Hat OpenStack Platform and Red Hat CloudForms. Because of the length of this article, I have broken this down into two parts.

As you probably know, every organization needs to evolve itself becoming a Tech Company, leveraging its own Digital Transformation, embracing or enhancing existing processes, evolving people's mindset, people’s soft/hard skills and of course using new technologies.

Remember we are living in a digital era where if you don’t change yourself and your organization someone will disrupt your business!

So, how can I become disruptive in my business?

Well, speaking from a purely technical perspective a good approach should consider cloud technologies.

These kinds of technologies can be the first brick of your digital transformation strategy because they can grant business and technologies values.

For instance, you could:

- Build new services on demand in a fast way

- Doing it in a self-service fashion

- Reduce manual activities

- Scale services and workloads

- Respond to requests peak

- Grants services quality and SLAs to your customers

- Respond to customer demands in a short way

- Reduce time to market

- Improve TCO

- ...

In my experience as a Solution Architect, I saw many different kinds of approaches when you want to build your Cloud.

For sure, you need to identify your business needs, then evaluate your use cases, build your user stories and then start thinking about tech stuff and their impacts.

Remember, don’t start approaching this kind of project from a technical perspective. There is nothing worse. My suggestion is, start thinking about values you want to deliver to your customers/end users.

Usually, the first technical questions coming to customers' minds are:

What kind of services would I like to offer?

- What use cases do I need to manage?

- Do I need a public cloud, a private cloud, or a mix of them (hybrid)?

- Do I like to use an instance based environment (Iaas) or containers based (Paas for friends) or a Saas?

- How is complex is it to build and manage a cloud environment?

- What products will help my organization to reach these objectives?

- …

The good news is that Red Hat can help you with amazing solutions, people, and processes.

Hands-on - Part 1

Now let’s start thinking about a cloud environment based on an instance/VM concept.

Here, we are speaking about Red Hat OpenStack Platform 11 (RHOSP).

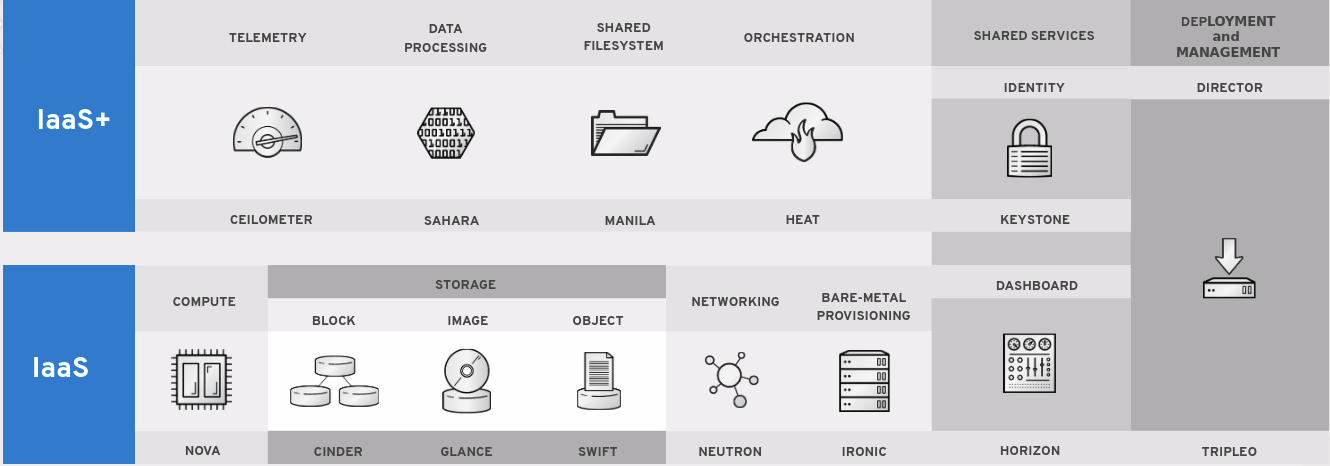

Now I don’t want to explain in details all modules available in RHOSP 11 but basically, just to give you a brief overview, you can see them in the next figure.

Picture 1

In order to build an SDDC in a fast, fully automated way, in this blog, you'll see how you can reach the goal using almost all these modules with a focus on the Orchestration Engine HEAT.

HEAT is very powerful orchestration engine that it’s able to build from a single instance to a very complex architecture composed by instances, networks, routers, load balancers, security groups, storage volumes, floating IPs, alarms, and more or less all objects managed by OpenStack.

The only thing you have to do is start to write a Heat Orchestration Template (HOT) written in yaml [1] and ask HEAT to execute it for you.

HEAT will be our driver to build our software-defined data center managing all needed OpenStack components leveraging all your requests.

So, the first thing is to build a HOT template right? Well, let’s start cloning my git repo:

https://gitlab.com/miken/rhosp_heat_stack.git

This heat stack will create a three-tier application with 2 web servers, 2 app servers, 1 db, some dedicated private network segments, virtual routers to interconnect private segments to floating IPs and to segregate networks. Two lbaas v2 (one for the FE and 1 for the APP layer), auto scaling groups, cinder-volumes (boot-from-volumes), ad hoc security groups, and Aodh alarms scale up/scale down policies and so on and so forth.

What? Yes, all these stuff :-)

In this example, web servers will run httpd 2.4, app servers will load just a simple python http server on port 8080 and db server right now is a placeholder.

In the real world, of course, you will take care of installing and configuring your application servers and db servers in an automatic, reproducible, and idempotent way with heat or for instance with an ansible playbook.

In this repo you’ll find:

- stack-3tier.yaml

-

- The main HOT template which defines the skeleton of our stack (input parameters, resources type, and output).

- lb-resource-stack-3tier.yaml

- Hot template to configure our lbaas v2 (load balancer as a service: namespace based HA proxy). This file will be retrieved by the main HOT template via http.

-

- Run.sh

- Bash script to perform:

- OpenStack project creation

- User management

- Heat stack creation under pre-configured OpenStack tenant

- Deletion of previous points (in case you need it)

- Bash script to perform:

As prerequisites to build your environment, you need to prepare:

A laptop or Intel NUC with RHEL 7.X + kvm able to host two virtual machines (1 all-in-one OpenStack vm and 1 CloudForms vm).

I suggest using a server with 32 GB of ram and at least 250 GB of SSD disk.

- OpenStack 11 all-in-one VM on rhel 7.4 (installed with packstack usable ONLY for test/demo purpose) with:

- 20 GB of ram

- 4-8 vcpu ⇒ better 8 :-)

- 150 GB of disk (to store cinder-volumes as a file)

- 1 vnic (nat is ok)

- Pre-configured external shared network available for all projects

OpenStack network create --share --external --provider-network-type flat \ --provider-physical-network extent FloatingIpWeb \

OpenStack subnet create --subnet-range 192.168.122.0/24 \ --allocation-pool start=192.168.122.30,end=192.168.122.50 --no-dhcp --gateway 192.168.122.1 --network FloatingIpWeb --dns-nameserver \ 192.168.122.1 FloatingIpWebSubnet

-

- Rhel 7.4 images loaded on glance and available for your projects/tenants (public).

- Apache2 image loaded on glance (based on rhel 7.4 + httpd installed and enabled). You’ll have to define a virtual host pointing to your web server Document Root.

- A dedicated flavor called "x1.xsmall" with 2 GB of ram, 1 vcpu and 10 GB of disk.

- A new Apache virtual host on the rhosp vm to host our load balancer yaml file.

root@osp conf.d(keystone_admin)]# cat /etc/httpd/conf/ports.conf | grep 8888

Listen 8888

[root@osp conf.d(keystone_admin)]# cat /etc/httpd/conf.d/heatstack.conf

<VirtualHost *:8888>

ServerName osp.test.local

ServerAlias 192.168.122.158

DocumentRoot /var/www/html/heat-templates/

ErrorLog /var/log/httpd/heatstack_error.log

CustomLog /var/log/httpd/heatstack_requests.log combined

</VirtualHost>

- Iptables firewall rule to permit network traffic to tcp port 8888.

iptables -I INPUT 13 -p tcp -m multiport --dports 8888 -m comment --comment "heat stack 8888 port retrieval" -j ACCEPT

That’s all.

Let’s clone the git repo, doing some modifications and start.

- Modify stack-3tier.yaml

- Uncomment tenant_id rows

If you are executing the heat stack through the bash script run.sh, don’t worry. Run.sh will take care of updating the tenant_id parameter.

Otherwise, if you are executing it through CloudForms or manually via heat please update the tenant_id accordingly to your environment configuration.

-

- Modify management_network and web_provider_network pointing out your floating ip networks. If you are using just a single external network, you can put here the same value. In a true production environment, you’ll probably use more than one external networks with different floating ip pools.

- Modify str_replace of web_asg (autoscaling group for our web servers) accordingly to what you want to modify on your web-landing page.

We’ll see later why I’ve done some little modification using str_replace. :-)

Let’s source our keystonerc_admin (so as admin) and run our bash script on our rhosp server:

root@osp ~]# source /root/keystonerc_admin

[root@osp heat-templates(keystonerc_admin)]# bash -x run.sh create

After the automatic creation of the tenant (demo-tenant) you’ll see in the output that heat is creating our resources.

2017-10-05 10:06:14Z [demo-tenant]: CREATE_IN_PROGRESS Stack CREATE started

2017-10-05 10:06:15Z [demo-tenant.web_network]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:16Z [demo-tenant.web_network]: CREATE_COMPLETE state changed

2017-10-05 10:06:16Z [demo-tenant.boot_volume_db]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:17Z [demo-tenant.web_subnet]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:18Z [demo-tenant.web_subnet]: CREATE_COMPLETE state changed

2017-10-05 10:06:18Z [demo-tenant.web-to-provider-router]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:19Z [demo-tenant.internal_management_network]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:19Z [demo-tenant.internal_management_network]: CREATE_COMPLETE state changed

2017-10-05 10:06:20Z [demo-tenant.web_sg]: CREATE_IN_PROGRESS state changed

2017-10-05 10:06:20Z [demo-tenant.web-to-provider-router]: CREATE_COMPLETE state changed

2017-10-05 10:06:20Z [demo-tenant.web_sg]: CREATE_COMPLETE state changed

2017-10-05 10:06:21Z [demo-tenant.management_subnet]: CREATE_IN_PROGRESS state changed

… Output truncated

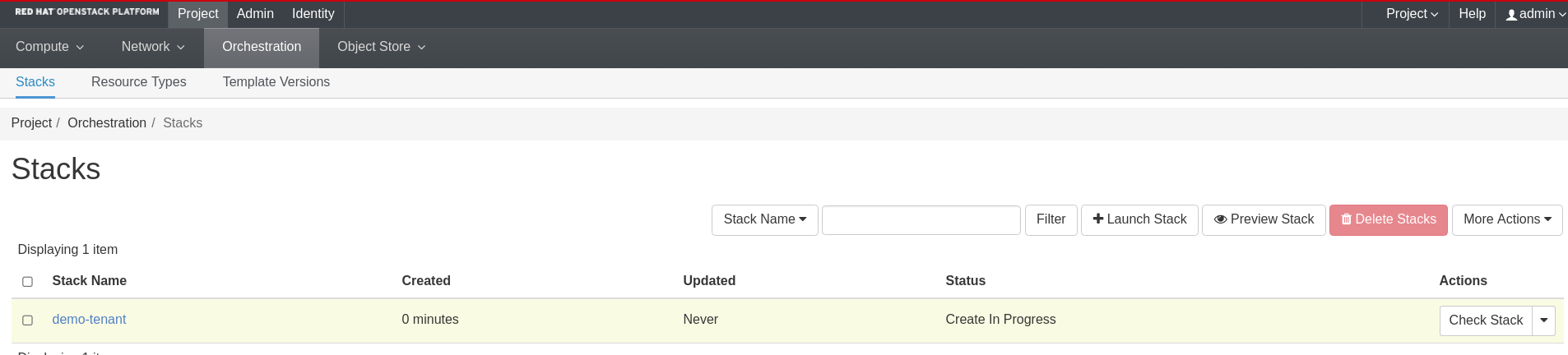

You can also login to the Horizon dashboard and check the status of the heat stack from Orchestration -> Stack

Picture 2

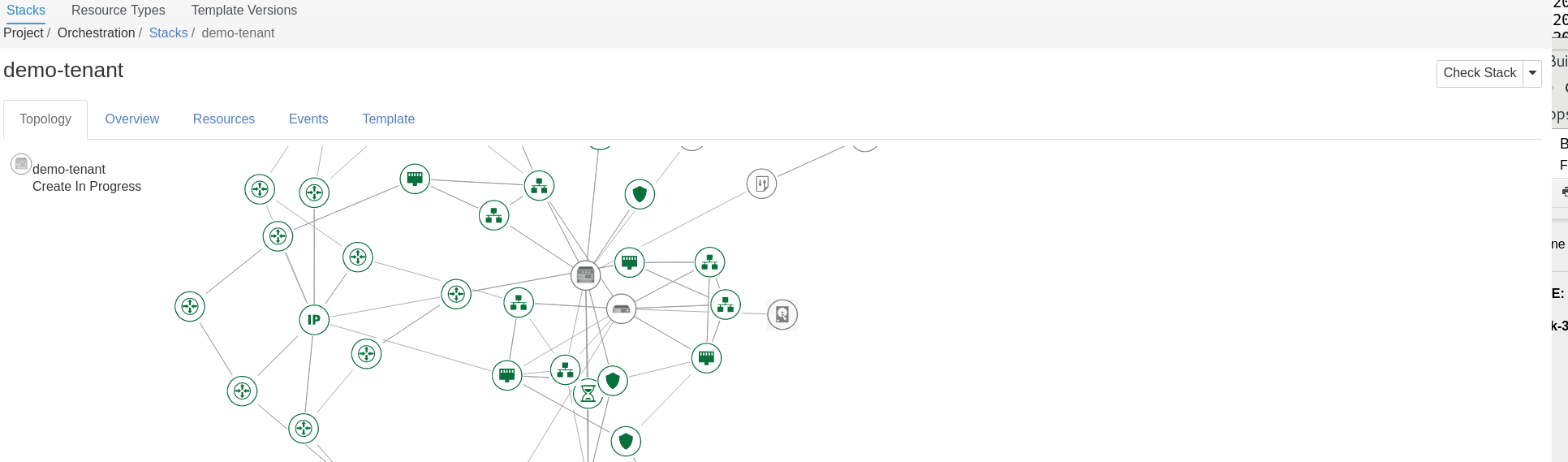

Clicking on our stack name you will be able to see also all resources managed by heat and their current status (Picture 2).

Picture 3

After 10-12 minutes, your heat stack will be completed. In a production environment, you’ll reach the same goal in 2 minutes! Yes, 2 minutes in order to have a fully automated software-defined data center!

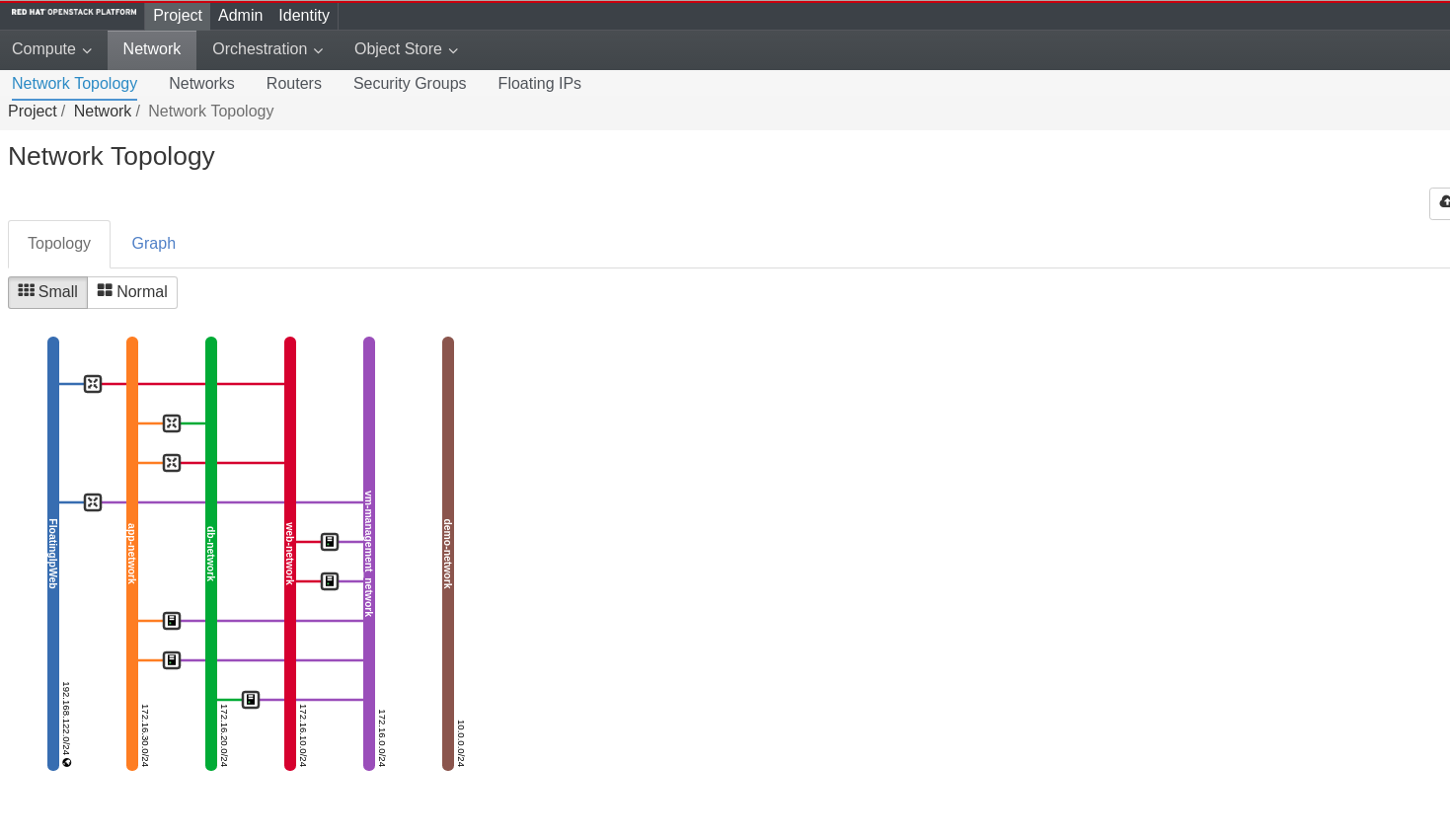

Let’s check what our stack has deployed going to Network Topology tab.

Cool! Everything was deployed as expected.

Picture 4

Now you are probably wondering what kind of services are managed by this environment.

Let’s see if Lbaas which are exposing our services is up and running:

[root@osp ~(keystone_admin)]# neutron lbaas-loadbalancer-list -c name -c vip_address -c provisioning_status

Neutron CLI is deprecated and will be removed in the future. Use OpenStack CLI instead.

+------------------------------------------------+--------------+---------------------+

| name | vip_address | provisioning_status |

+------------------------------------------------+--------------+---------------------+

| demo-3tier-stack-app_loadbalancer-kjkdiehsldkr | 172.16.30.4 | ACTIVE |

| demo-3tier-stack-web_loadbalancer-ht5wirjwcof3 | 172.16.10.12 | ACTIVE |

+------------------------------------------------+--------------+---------------------+

Now get the floating ip address associated to our web lbaas.

[root@osp ~(keystone_admin)]# OpenStack floating ip list -f value -c "Floating IP Address" -c "Fixed IP Address" | grep 172.16.10.12

192.168.122.37 172.16.10.12

So, our external floating IP is 192.168.122.37.

Let’s see what is exposing :-)

Wow, Red Hat Public website hosted our OpenStack!

Picture 5

I have cloned our Red Hat company site as a static website so our app and db server is not really exposing a service but of course, you can extend this stack installing on your app/db server whatever is needed to expose your services.

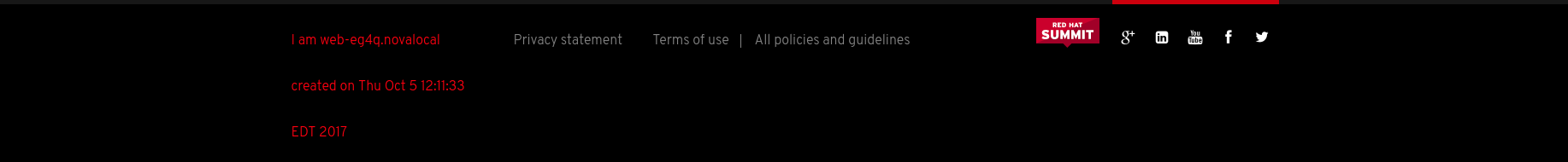

Now, I want to show you that this website is really running on our instances so let’s scroll the page until the footer

Picture 6

Here you can see what instance is presenting you the website:

I am web-eg4q.novalocal created on Thu Oct 5 12:11:33 EDT 2017

Doing a refresh, our Lbaas will perform a round robin to other web instances;

I am web-9hhm.novalocal created on Thu Oct 5 12:12:51 EDT 2017

This is why I suggested to you to modify str_replace accordingly to what you want to modify on your web page.

In this case, I’ve changed the footer to clearly show you what server is answering to our http requests.

[1]https://docs.openstack.org/heat/latest/template_guide/index.html

Download this Kubernetes cheat sheet for automating deployment, scaling, and operations of application containers across clusters of hosts, providing container-centric infrastructure.

Last updated: October 30, 2017