Red Hat Enterprise Linux AI

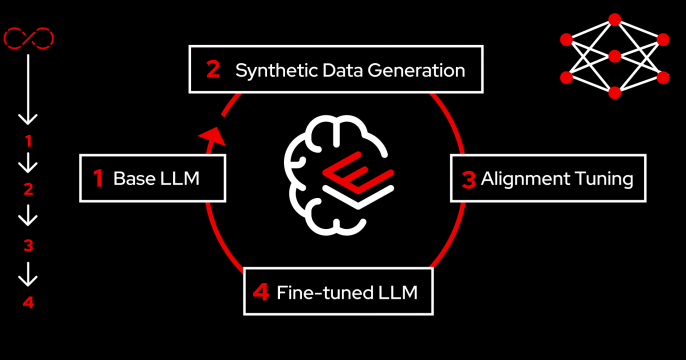

Develop, deploy, and run large language models (LLMs) in individual server environments. The solution includes Red Hat AI Inference Server, delivering fast, cost-effective hybrid cloud inference by maximizing throughput, minimizing latency, and reducing compute costs.

Learning Exercises

Learn how Red Hat Enterprise Linux AI provides a security-focused, low-cost...

In this course, you’ll explore how to configure your RHEL AI machine,...