Meet Repo, Red Hat Developer’s new mascot

Repo, Red Hat Developer's new mascot, is curious, helpful, and eager to guide

Repo, Red Hat Developer's new mascot, is curious, helpful, and eager to guide

Artificial intelligence (AI) and large language models (LLMs) are becoming

The rapid advancement of generative artificial intelligence (gen AI) has unlocked incredible opportunities. However, customizing and iterating on large language models (LLMs) remains a complex and resource intensive process. Training and enhancing models often involves creating multiple forks, which can lead to fragmentation and hinder collaboration.

OCI images are now available on the registries Docker Hub and Quay.io, making it even easier to use the Granite 7B large language model (LLM) and InstructLab.

Get started with AMD GPUs for model serving in OpenShift AI. This tutorial guides you through the steps to configure the AMD Instinct MI300X GPU with KServe.

Learn how developers can use prompt engineering for a large language model (LLM) to increase their productivity.

Learn how to deploy a coding copilot model using OpenShift AI. You'll also discover how tools like KServe and Caikit simplify machine learning model management.

Explore AMD Instinct MI300X accelerators and learn how to run AI/ML workloads using ROCm, AMD’s open source software stack for GPU programming, on OpenShift AI.

Learn how to apply supervised fine-tuning to Llama 3.1 models using Ray on OpenShift AI in this step-by-step guide.

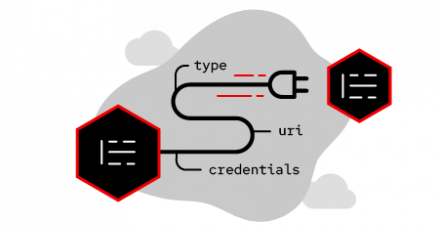

Understand how retrieval-augmented generation (RAG) works and how users can

Learn how to rapidly prototype AI applications from your local environment with

Learn how to generate word embeddings and perform RAG tasks using a Sentence Transformer model deployed on Caikit Standalone Serving Runtime using OpenShift AI.

In today's fast-paced IT landscape, the need for efficient and effective

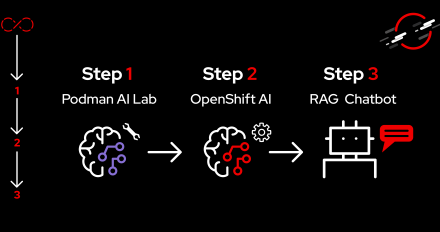

As enterprises seek to utilize the power of generative AI, they often struggle with the transition from experimentation to production-ready solutions. This talk will guide you through the steps needed to build an enterprise-grade generative AI application, starting with local experimentation using Podman AI Lab and finishing in a scalable, secure deployment on Red Hat OpenShift AI.

Ollama recently announced tool support and like many popular libraries for using

Use the Stable Diffusion model to create images with Red Hat OpenShift AI running on a Red Hat OpenShift Service on AWS cluster with NVIDIA GPU enabled.

Get an overview of Explainable and Responsible AI and discover how the open source TrustyAI tool helps power fair, transparent machine learning.

In this blog we look at how we use OpenShift AI with Ray Tune to perform

Red Hat OpenShift AI is an artificial intelligence platform that runs on top of Red Hat OpenShift and provides tools across the AI/ML lifecycle.

Red Hat OpenShift AI is an artificial intelligence platform that runs on top of Red Hat OpenShift and provides tools across the AI/ML lifecycle.

Red Hat OpenShift AI provides tools across the full lifecycle of AI/ML experiments and models for data scientists and developers of intelligent applications.

Learn how to prevent large language models (LLMs) from generating toxic content during training using TrustyAI Detoxify and Hugging Face SFTTrainer.

Learn how to deploy and use the Multi-Cloud Object Gateway (MCG) from Red Hat OpenShift Data Foundation to support development and testing of applications and Artificial Intelligence (AI) models which require S3 object storage.

Train and deploy an AI model using OpenShift AI, then integrate it into an application running on OpenShift.