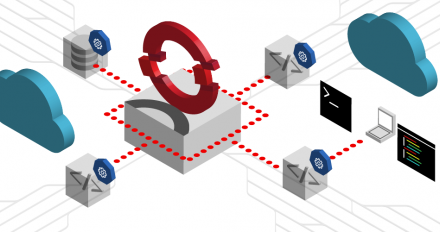

Agentic AI: Design reliable workflows across the hybrid cloud

Learn how to design agentic workflows, and how the Red Hat AI portfolio supports production-ready agentic systems across the hybrid cloud.

Learn how to design agentic workflows, and how the Red Hat AI portfolio supports production-ready agentic systems across the hybrid cloud.

One conversation in Slack and email, real tickets in ServiceNow. Learn how the multichannel IT self-service agent ties them together with CloudEvents + Knative.

Explore how Red Hat OpenShift AI uses LLM-generated summaries to distill product reviews into a form users can quickly process.

Deploy an enterprise-ready RAG chatbot using OpenShift AI. This quickstart automates provisioning of components like vector databases and ingestion pipelines.

Explore the pros and cons of on-premises and cloud-based language learning models (LLMs) for code assistance. Learn about specific models available with Red Hat OpenShift AI, supported IDEs, and more.

Explore the architecture and training behind the two-tower model of a product recommender built using Red Hat OpenShift AI.

Discover the self-service agent AI quickstart for automating IT processes on Red Hat OpenShift AI. Deploy, integrate with Slack and ServiceNow, and more.

Learn how to build AI-enabled applications for product recommendations, semantic product search, and automated product review summarization with OpenShift AI.

Deploy an Oracle SQLcl MCP server on an OpenShift cluster and use it with the OpenShift AI platform in this AI quickstart.

Discover the AI Observability Metric Summarizer, an intelligent, conversational tool built for Red Hat OpenShift AI environments.

This article compares the performance of llm-d, Red Hat's distributed LLM inference solution, with a traditional deployment of vLLM using naive load balancing.

Discover the advantages of using Java for AI development in regulated industries. Learn about architectural stability, performance, runtime guarantees, and more.

Whether you're just getting started with artificial intelligence or looking to deepen your knowledge, our hands-on tutorials will help you unlock the potential of AI while leveraging Red Hat's enterprise-grade solutions.

Learn how Model Context Protocol (MCP) enhances agentic AI in OpenShift AI, enabling models to call tools, services, and more from an AI application.

Learn how to deploy and test the inference capabilities of vLLM on OpenShift using GuideLLM, a specialized performance benchmarking tool.

Learn how to fine-tune a RAG model using Feast and Kubeflow Trainer. This guide covers preprocessing and scaling training on Red Hat OpenShift AI.

Learn how to implement retrieval-augmented generation (RAG) with Feast on Red Hat OpenShift AI to create highly efficient and intelligent retrieval systems.

Learn how to implement identity-based tool filtering, OAuth2 Token Exchange, and HashiCorp Vault integration for the MCP Gateway.

Optimize AI scheduling. Discover 3 workflows to automate RayCluster lifecycles using KubeRay and Kueue on Red Hat OpenShift AI 3.

Use SDG Hub to generate high-quality synthetic data for your AI models. This guide provides a full, copy-pasteable Jupyter Notebook for practitioners.

This performance analysis compares KServe's SLO-driven KEDA autoscaling approach against Knative's concurrency-based autoscaling for vLLM inference.

Learn how to deploy and manage Models-as-a-Service (MaaS) in Red Hat OpenShift AI, including rate limiting for resource protection.

This in-depth guide helps you integrate generative AI, LLMs, and machine learning into your existing Java enterprise ecosystem. Download the e-book at no cost.

Learn why prompt engineering is the most critical and accessible method for customizing large language models.

Learn how to automatically transfer AI model metadata managed by OpenShift AI into Red Hat Developer Hub’s Software Catalog.