Red Hat AI

Why Models-as-a-Service architecture is ideal for AI models

Explore the architecture of a Models-as-a-Service (MaaS) platform and how enterprises can create a secure and scalable environment for AI models. (Part 2 of 4)

Integrate vLLM inference on macOS/iOS using OpenAI APIs

Discover how to communicate with vLLM using the OpenAI spec as implemented by the SwiftOpenAI and MacPaw/OpenAI open source projects.

The hidden cost of large language models

Discover how model compression slashes LLM deployment costs for technical practitioners, covering quantization, pruning, distillation, and speculative decoding.

6 benefits of Models-as-a-Service for enterprises

This article introduces Models-as-a-Service (MaaS) for enterprises, outlining the challenges, benefits, key technologies, and workflows. (Part 1 of 4)

GuideLLM: Evaluate LLM deployments for real-world inference

Learn how to evaluate the performance of your LLM deployments with the open source GuideLLM toolkit to optimize cost, reliability, and user experience.

Unleashing multimodal magic with RamaLama

RamaLama's new multimodal feature integrates vision-language models with containers. Discover how it helps developers download and serve multimodal AI models.

Integrate Red Hat AI Inference Server & LangChain in agentic workflows

Integrate Red Hat AI Inference Server with LangChain to build agentic document processing workflows. This article presents a use case and Python code.

Repo at Red Hat Summit 2025

Explore Red Hat Summit 2025 with Dan Russo and Repo, the Red Hat Developer mascot!

Axolotl meets LLM Compressor: Fast, sparse, open

Discover how to deploy compressed, fine-tuned models for efficient inference with the new Axolotl and LLM Compressor integration.

How to run vLLM on CPUs with OpenShift for GPU-free inference

Learn how to run vLLM on CPUs with OpenShift using Kubernetes APIs and dive into performance experiments for LLM benchmarking in this beginner-friendly guide.

How Kafka improves agentic AI

Discover why Kafka is the foundation behind modular, scalable, and controllable AI automation.

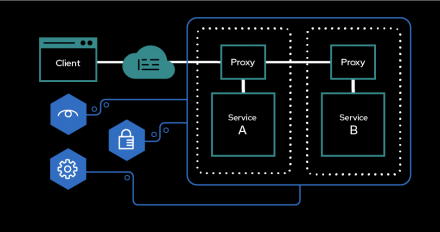

How to use service mesh to improve AI model security

Learn how to secure, observe, and control AI models at scale without code changes to simplify zero-trust deployments by using service mesh.

How to implement observability with Node.js and Llama Stack

Enhance your Node.js AI applications with distributed tracing. Discover how to use Jaeger and OpenTelemetry for insights into Llama Stack interactions.

Speech-to-text with Whisper and Red Hat AI Inference Server

Learn how to deploy a Whisper model on Red Hat AI Inference Server within a RHEL 9 environment using Podman containers and NVIDIA GPUs for speech recognition.

Integrate vLLM inference on macOS/iOS with Llama Stack APIs

Learn to build a chatbot leveraging vLLM for generative AI inference. This guide provides source code and steps to connect to a Llama Stack Swift SDK server.

Optimize model serving at the edge with RawDeployment mode

Deploy AI at the edge with Red Hat OpenShift AI. Learn to set up OpenShift AI, configure storage, train models, and serve using KServe's RawDeployment.

Containers and Kubernetes made easy: Deep dive into Podman Desktop and new AI capabilities

Dive into the world of containers and Kubernetes with Podman Desktop, an open-source tool to empower your container development workflow, and seamlessly deploy applications to local and remote Kubernetes environments. For developers, operations, and those looking to simplify building and deploying containers, Podman Desktop provides an intuitive interface compatible with container engines such as Podman, Docker, Lima, and more.

Building AI-enabled applications with Podman AI Lab

Learn about the Podman AI Lab and how you can start using it today for testing and building AI-enabled applications. As an extension for Podman Desktop, the container & cloud-native tool for application developers and administrators, the AI Lab is your one-stop-shop for popular generative AI use cases like summarizers, chatbots, and RAG applications. In addition, from the model catalog, you can easily download and start AI models as local services on your machine. We'll cover this and more, and be sure to try out the Podman AI Lab today!

Empowering apps with natural language processing using Apache Camel and LLM tools

Learn to harness the power of natural language processing by creating LLM tools with Apache Camel's low-code UI. Engage with this interactive tutorial in the Developer Sandbox for a hands-on experience.

Neural networks demo: A machine learning model

In this video, Maarten demonstrates a neural network and how it works in AI/ML models. Neural networks are a class of ML models inspired by the human brain, made up of interconnected units of neurons, or nodes. Neural networks are the foundation of many AI applications, including image recognition, speech processing, and natural language understanding.

Smarter compression: Tailoring AI with LLM Compressor in OpenShift AI

In this recording, we demonstrate how to compose model compression experiments, highlighting the benefits of advanced algorithms requiring custom data sets and how evaluation results and model artifacts can be shared with stakeholders.

How we improved AI inference on macOS Podman containers

Podman enables developers to run Linux containers on MacOS within virtual machines, including GPU acceleration for improved AI inference performance.

Structured outputs in vLLM: Guiding AI responses

Learn how to control the output of vLLM's AI responses with structured outputs. Discover how to define choice lists, JSON schemas, regex, and more.

Red Hat at WeAreDevelopers World Congress 2025

Headed to WeAreDevelopers World Congress 2025? Visit the Red Hat Developer booth on-site to speak to our expert technologists.