Deploying a pod on Kubernetes

This video by Stevan Le Meur is an illustration of how to deploy a pod on Kubernetes.

This video by Stevan Le Meur is an illustration of how to deploy a pod on Kubernetes.

In this session, we are going to conduct a live demonstration of hybrid cloud-native Java microservices, distributed across Amazon Web Services, Google Cloud Platform, and Microsoft Azure, with real-time load-balancing and fail-over.

Is your computer getting tired from running multiple kind clusters? Learn how to run remote clusters as if they were local.

How to manage Kubernetes applications on Red Hat OpenShift using basic command-line and graphical tools.

Learn how to easily generate Helm charts using Dekorate, how to map properties when installing or updating your charts, and how to use Helm profiles.

Discover how Kubernetes improves developer agility by managing storage for containerized applications. (Part 1 of 3)

This second article in a series shows how to create AWS services and interact with them via Operators. See how it works.

This article explains the use of controllers and Operators for AWS services, and sets up your environment for them. This is the first of two articles.

Containers without Docker | DevNation Tech Talk

DevOps Culture and Practice with OpenShift provides a roadmap for building empowered product teams within your organization.

This cheat sheet covers how to create a Kubernetes Operator in Java using Quarkus.

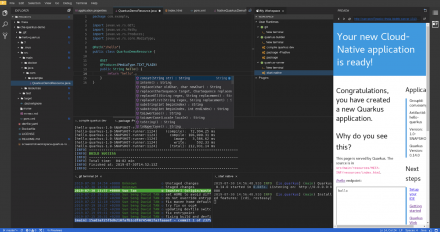

We explore the improvements to Quarkus Tools for Visual Studio Code version 1.3.0, which was released on the VS Code Marketplace to start off the new year.

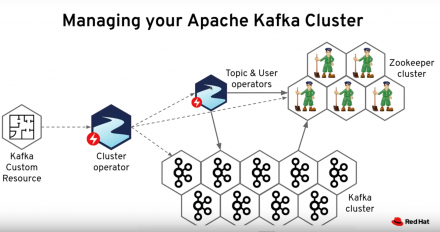

In this session, we will walk through an end-to-end demo, showing the lifecycle of an event-driven application based on Apache Kafka.

In this video, we'll show how to move your APIs into the serverless era using the super duo of Camel K and Knative.

This article will attempt to demystify the powerful and complex devfile.yaml in CodeReady Workspaces.

See new features of CodeReady Workspaces, a Red Hat OpenShift-native developer environment enabling cloud-native development for developer teams.

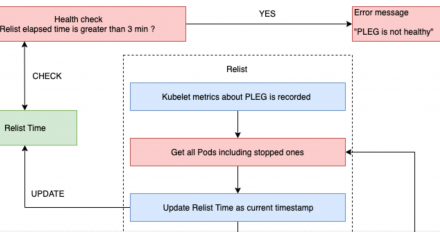

We look at the Pod Lifecycle Event Generator (PLEG) module in Kubernetes and show how to troubleshoot various issues.

What's new in OpenShift Connector -- experience the seamless inner-loop developer experience using VS Code for Red Hat OpenShift 4.2.

We'll show how to get started with Golang Operators and the Operator SDK in this article.

Using OpenShift image streams with Kubernetes Deployments allows automatic updates without sacrificing compatibility with standard Kubernetes.

Learn about the new release of Eclipse Che 7, the Kubernetes-native IDE, that lets developers code, build, test, and run cloud-native applications.

We show how to install four very useful Kubernetes command-line tools for Linux.

Istio Pool Ejection allows you to temporarily block under- or non-performing pods from your system.

Learn about cloud native architecture, including principles and techniques for building and deploying Java microservices via Spring Boot, Wildfly Swarm and Vert.x.

In this session, Diógenes gives an introduction of the basic concepts that make OpenShift, giving special attention to its relationship with Linux containers and Kubernetes.