Dipika Sikka

Dipika Sikka's contributions

Article

Accelerating large language models with NVFP4 quantization

Shubhra Pandit

+3

Learn about NVFP4, a 4-bit floating-point format for high-performance inference on modern GPUs that can deliver near-baseline accuracy at large scale.

Article

LLM Compressor 0.9.0: Attention quantization, MXFP4 support, and more

Kyle Sayers

+3

Explore the latest release of LLM Compressor, featuring attention quantization, MXFP4 support, AutoRound quantization modifier, and more.

Article

Run Mistral Large 3 & Ministral 3 on vLLM with Red Hat AI on Day 0: A step-by-step guide

Saša Zelenović

+6

Run the latest Mistral Large 3 and Ministral 3 models on vLLM with Red Hat AI, providing day 0 access for immediate experimentation and deployment.

Article

Speculators: Standardized, production-ready speculative decoding

Alexandre Marques

+7

Speculators standardizes speculative decoding for large language models, with a unified Hugging Face format, vLLM integration, and more.

Article

LLM Compressor 0.8.0: Extended support for Qwen3 and more

Dipika Sikka

+2

The LLM Compressor 0.8.0 release introduces quantization workflow enhancements, extended support for Qwen3 models, and improved accuracy recovery.

Article

LLM Compressor 0.7.0 release recap

Dipika Sikka

+3

LLM Compressor 0.7.0 brings Hadamard transforms for better accuracy, mixed-precision FP4/FP8, and calibration-free block quantization for efficient compression.

Article

Axolotl meets LLM Compressor: Fast, sparse, open

Rahul Tuli

+3

Discover how to deploy compressed, fine-tuned models for efficient inference with the new Axolotl and LLM Compressor integration.

Article

Optimize LLMs with LLM Compressor in Red Hat OpenShift AI

Brian Dellabetta

+1

Optimize model inference and reduce costs with model compression techniques like quantization and pruning with LLM Compressor on Red Hat OpenShift AI.

Accelerating large language models with NVFP4 quantization

Learn about NVFP4, a 4-bit floating-point format for high-performance inference on modern GPUs that can deliver near-baseline accuracy at large scale.

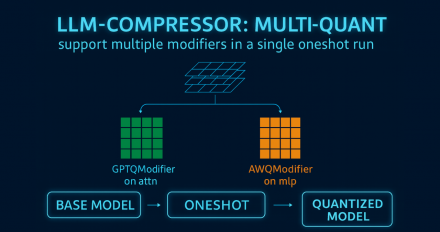

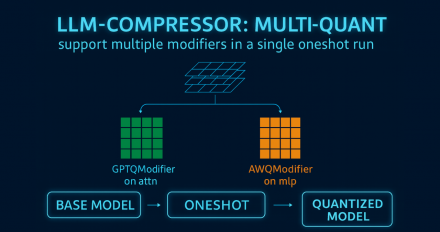

LLM Compressor 0.9.0: Attention quantization, MXFP4 support, and more

Explore the latest release of LLM Compressor, featuring attention quantization, MXFP4 support, AutoRound quantization modifier, and more.

Run Mistral Large 3 & Ministral 3 on vLLM with Red Hat AI on Day 0: A step-by-step guide

Run the latest Mistral Large 3 and Ministral 3 models on vLLM with Red Hat AI, providing day 0 access for immediate experimentation and deployment.

Speculators: Standardized, production-ready speculative decoding

Speculators standardizes speculative decoding for large language models, with a unified Hugging Face format, vLLM integration, and more.

LLM Compressor 0.8.0: Extended support for Qwen3 and more

The LLM Compressor 0.8.0 release introduces quantization workflow enhancements, extended support for Qwen3 models, and improved accuracy recovery.

LLM Compressor 0.7.0 release recap

LLM Compressor 0.7.0 brings Hadamard transforms for better accuracy, mixed-precision FP4/FP8, and calibration-free block quantization for efficient compression.

Axolotl meets LLM Compressor: Fast, sparse, open

Discover how to deploy compressed, fine-tuned models for efficient inference with the new Axolotl and LLM Compressor integration.

Optimize LLMs with LLM Compressor in Red Hat OpenShift AI

Optimize model inference and reduce costs with model compression techniques like quantization and pruning with LLM Compressor on Red Hat OpenShift AI.