In the previous articles in this series, we first covered the basics of Red Hat AMQ Streams on OpenShift and then showed how to set up Kafka Connect, a Kafka Bridge, and Kafka Mirror Maker. Here are a few key points to keep in mind before we proceed:

- AMQ Streams is based on Apache Kafka.

- AMQ Streams for the Red Hat OpenShift Container Platform is based on the Strimzi project.

- AMQ Streams on containers has multiple components, such as the Cluster Operator, Entity Operator, Mirror Maker, Kafka connect, and Kafka Bridge.

Now that we have everything set up (or so we think), let's look at monitoring and alerting for our new environment.

Kafka Exporter

A Kafka cluster, by default, does not export all of its metrics. Hence, we need to use Kafka Exporter to collect the cluster's broker state, usage, and performance. It is important to have more insight so you can understand if the consumer's message consumption is at the same rate as the producer's message pushes. If not, this slow consumption behavior could cost the system. Catching these issues as early as possible is recommended.

To set up Kafka Exporter, begin by editing the existing Kafka cluster config, or creating a new one to include the Kafka Exporter. For example:

apiVersion: kafka.strimzi.io/v1beta1

kind: Kafka

metadata:

name: simple-cluster

spec:

kafka:

version: 2.3.0

replicas: 5

listeners:

plain: {}

tls: {}

config:

offsets.topic.replication.factor: 5

transaction.state.log.replication.factor: 5

transaction.state.log.min.isr: 2

log.message.format.version: "2.3"

storage:

type: jbod

volumes:

- id: 0

type: persistent-claim

size: 5Gi

deleteClaim: false

zookeeper:

replicas: 3

storage:

type: persistent-claim

size: 5Gi

deleteClaim: false

entityOperator:

topicOperator: {}

userOperator: {}

kafkaExporter: {}

Next, apply the new changes to the existing cluster:

$ oc apply -f amq-kafka-cluster-kafka-exporter.yml

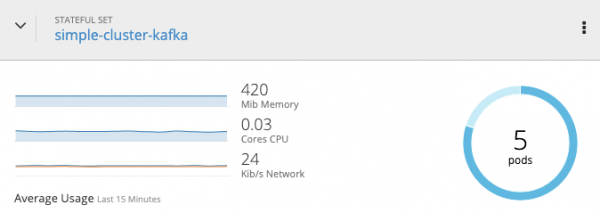

You can see the result in Figure 1:

Prometheus and Grafana

Prometheus is a system monitoring and alerting toolkit that scrapes metrics from the Kafka cluster. The downside of this tool is that it does not have a good GUI. Hence, we create operational dashboards using Grafana for the interface and Prometheus for the data feeds.

Let's get started with the metrics and creating the dashboard:

- Add Kafka metrics to the Kafka resource. This snippet was referenced from the

examples/metrics/kafka-metrics.yamlfile provided as part of the Red Hat AMQ Streams product:

$ oc project amq-streams

$ oc edit kafka simple-cluster

# Add the below in the spec->kafka

metrics:

# Inspired by config from Kafka 2.0.0 example rules:

# https://github.com/prometheus/jmx_exporter/blob/master/example_configs/kafka-2_0_0.yml

lowercaseOutputName: true

rules:

# Special cases and very specific rules

- pattern : kafka.server<type=(.+), name=(.+), clientId=(.+), topic=(.+), partition=(.*)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

topic: "$4"

partition: "$5"

- pattern : kafka.server<type=(.+), name=(.+), clientId=(.+), brokerHost=(.+), brokerPort=(.+)><>Value

name: kafka_server_$1_$2

type: GAUGE

labels:

clientId: "$3"

broker: "$4:$5"

# Some percent metrics use MeanRate attribute

# Ex) kafka.server<type=(KafkaRequestHandlerPool), name=(RequestHandlerAvgIdlePercent)><>MeanRate

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>MeanRate

name: kafka_$1_$2_$3_percent

type: GAUGE

# Generic gauges for percents

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

- pattern: kafka.(\w+)<type=(.+), name=(.+)Percent\w*, (.+)=(.+)><>Value

name: kafka_$1_$2_$3_percent

type: GAUGE

labels:

"$4": "$5"

# Generic per-second counters with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*, (.+)=(.+)><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)PerSec\w*><>Count

name: kafka_$1_$2_$3_total

type: COUNTER

# Generic gauges with 0-2 key/value pairs

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Value

name: kafka_$1_$2_$3

type: GAUGE

# Emulate Prometheus 'Summary' metrics for the exported 'Histogram's.

# Note that these are missing the '_sum' metric!

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

"$6": "$7"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*), (.+)=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

"$6": "$7"

quantile: "0.$8"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

labels:

"$4": "$5"

- pattern: kafka.(\w+)<type=(.+), name=(.+), (.+)=(.*)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

"$4": "$5"

quantile: "0.$6"

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>Count

name: kafka_$1_$2_$3_count

type: COUNTER

- pattern: kafka.(\w+)<type=(.+), name=(.+)><>(\d+)thPercentile

name: kafka_$1_$2_$3

type: GAUGE

labels:

quantile: "0.$4"

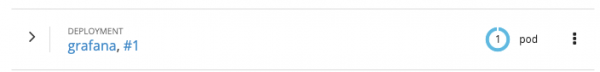

This process will restart the simple-cluster-kafka pods one by one, as shown in Figure 2:

- Confirm you have Prometheus running in the cluster:

$ oc get pod -n openshift-monitoring | grep prometheus prometheus-k8s-0 4/4 Running 140 3d prometheus-k8s-1 4/4 Running 140 3d prometheus-operator-687784bd4b-56vsk 1/1 Running 127 3d

In case the above does not return any Prometheus pods, check with your infrastructure team to know where they are installed. If they are not installed, then check the Prometheus installation steps from the docs.

- Install Grafana:

$ oc create -f https://raw.githubusercontent.com/strimzi/strimzi-kafka-operator/master/metrics/examples/grafana/grafana.yaml

You can see the results in Figure 3:

- Create a route for the Grafana service:

$ oc expose svc grafana --name=grafana-route

- Log into the admin console (shown in Figure 4):

$ oc get route | grep grafana grafana-route grafana-route-amq-streams.apps.redhat.demo.com grafana

Note: The default credentials are admin and admin.

When you log in for the first time, Grafana will ask you to change the password.

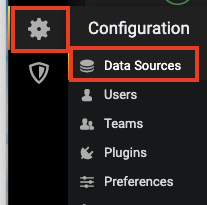

- Add Prometheus as a data source. Go to the settings icon and select Data Sources, and then Prometheus, as shown in Figure 5:

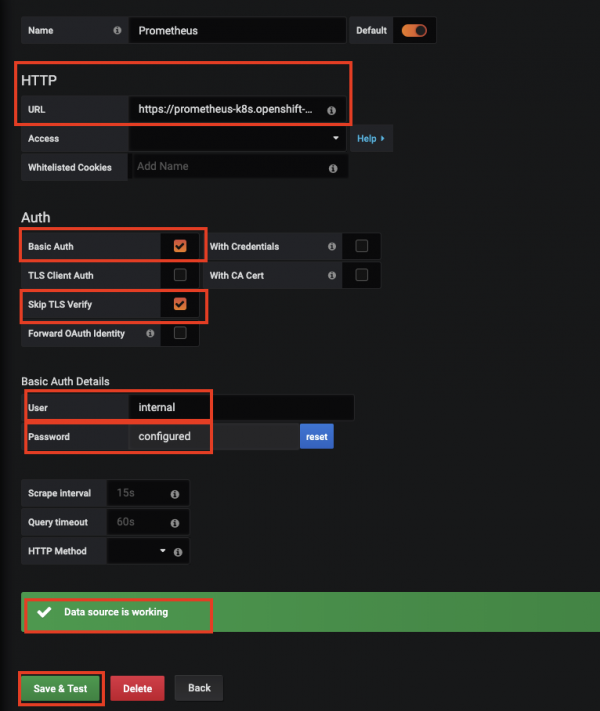

- Enter the Prometheus details and save them:

- URL: https://prometheus-k8s.openshift-monitoring.svc:9091

- Basic Auth: Enabled

- Skip TLS Verify: Enabled

- User: Internal

- Pass: Get these details from the cluster admin.

The message "Data source is working" should appear at the bottom before you continue, as shown in Figure 6:

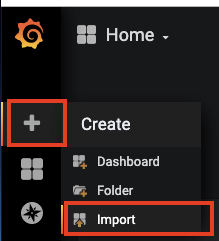

- Open the Import options by selecting + and then Import, as shown in Figure 7:

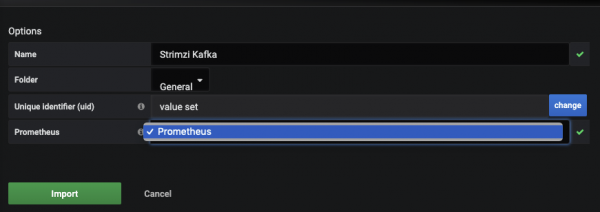

- Click Upload .json file to add the

strimzi-kafka.jsonfile fromexamples/metrics/grafana-dashboards/strimzi-kafka.json. - Select Prometheus as a data source and then click Import:

Doing this should result in the sample Grafana dashboard.

Conclusion

In this article, we explored tools that can help us monitor Red Hat AMQ Streams. This piece fully rounds out our series, where we covered:

- Zookeeper, Kafka, and Entity Operator creation.

- Kafka Connect, Kafka Bridge, and Mirror Maker.

- Monitoring and admin (this article).

Hopefully, you now have a better understanding of how to run AMQ Streams in a container ecosystem. Work through our examples and see the results for yourself.