How to store large amounts of data in a program

We explain how to include large data files into the body of an executable program so that it's there when the program runs.

We explain how to include large data files into the body of an executable program so that it's there when the program runs.

Check out the instructor-led labs in the Emerging Technologies track of Red Hat Summit 2019, coming up May 7-9 in Boston.

This presentation will cover two projects from sig-big-data: Apache Spark on Kubernetes and Apache Airflow on Kubernetes. We will give an overview of the current state and present the roadmap of both projects, and give attendees opportunities to ask questions and provide feedback on roadmaps.

Video of Kubecon 2018, explore the various integrations that have enabled Kubeflow to quickly emerge as the de-facto machine learning toolkit for Kubernetes.

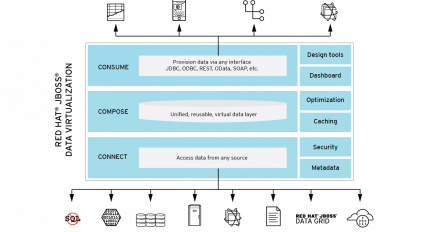

Integrate Cloudera's Apache Impala implementation as a Data Source in Red Hat's JBoss Data Virtualization. The goal of this post is to import data from a Cloudera Impala instance, manipulate it and expose that data as a data service