The Konveyor community has developed Konveyor AI (Kai), a tool that uses generative AI to accelerate application modernization. Kai integrates large language models (LLMs) with static code analysis to facilitate code modifications within a developer's IDE, helping transition to other modern programming languages and frameworks efficiently.

Using a retrieval-augmented generation (RAG) approach, Kai enhances LLM outputs with historical code changes and analysis data, ensuring context-specific guidance. This method is model agnostic and does not require model fine-tuning, making Kai a versatile tool for large-scale modernization projects, demonstrated by updating a Java EE application to Quarkus using a Visual Studio Code (VS Code) plug-in. This unique approach will enable organizations to shorten the time and cost of modernization at scale. A developer can see a list of issues in their IDE that need to be addressed to migrate to a new technology, and Kai will work with an LLM to generate the required source code changes.

What is Konveyor?

Konveyor is an upstream Cloud Native Computing Foundation (CNCF) sandbox project that helps organizations manage large-scale modernization engagements for their entire portfolio of applications. Much of the work in Konveyor is centered on surfacing information about legacy applications to empower enterprise architects to make better-informed decisions (see Figure 1).

One way Konveyor surfaces information is through static code analysis using a tool called analyzer-lsp. This tool enables Konveyor to analyze code written in various languages and technologies. It does so by using the Language Server Protocol (LSP), language providers, and analysis rules, which include both community-contributed rules and the option of custom rules an organization creates for their specific components.

Through static code analysis, an organization can identify potential areas of concern in a given application. This report, for example, examines issues that should be considered when migrating a sample Java EE application (coolstore) to Quarkus.

What is Konveyor AI (Kai)?

Konveyor AI is a Konveyor component that implements a retrieval-augmented generation (RAG) pattern applied to the application modernization domain. Kai enables an organization to use an LLM of their choice and augment the model's knowledge by gathering related information to aid in modernization tasks, thereby avoiding fine-tuning or training the model.

The typical RAG pattern involves gathering relevant information about a given question and bundling it with the request to an LLM. This way, an LLM can potentially answer questions it hasn’t seen in training. It is a popular approach to making an LLM more powerful without fine-tuning or training on new data.

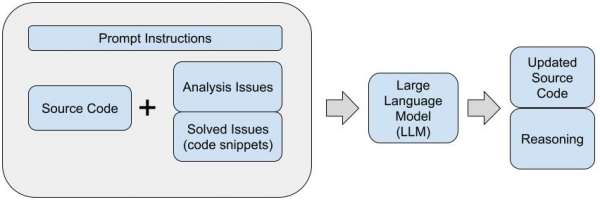

Kai uses this RAG approach to provide the LLM with 2 types of information found within a Konveyor instance, as shown in Figure 2:

- Analysis information: Static code analysis information with hints of how to solve an issue.

- Solved issues: Code snippets of how similar problems were solved in the past by this organization.

RAG approach: Analysis information

Analysis information from analyzer-lsp identifies specific issues that should be considered when migrating an application to a new technology.

This information consists of what we call an "incident." An "incident" will include:

- The file path of the impacted file.

- Line number of the incident.

- Message which describes the issue and potentially a hint of how to address.

Konveyor provides a web UI-formatted report that is easier for end users to consume, and it also provides a raw data in a YAML file.

We can look at the raw data below for an example of a Quarkus rule that informs us we are using a JMS topic and need to change. When we include this information in the prompt to an LLM, it then helps the LLM to know precisely what we want to change, thereby improving the results over a more naive approach of simply asking the LLM “Help me migrate this file to Quarkus” with no context. This technique gets more potent as we consider the custom rules an organization creates for its custom frameworks.

“JMS' Topic must be replaced with an Emitter” from run ruleID: jms-to-reactive-quarkus-00040.

incidents:

- uri: file:///src/main/....../service/InventoryNotificationMDB.java

message: "JMS `Topic`s should be replaced with Micrometer `Emitter`s feeding a Channel. See the following example of migrating\n a Topic to an Emitter:\n \n Before:\n ```\n @Resource(lookup = \"java:/topic/HELLOWORLDMDBTopic\")\n private Topic topic;\n ```\n \n After:\n ```\n @Inject\n @Channel(\"HELLOWORLDMDBTopic\")\n Emitter<String> topicEmitter;\n ```"

lineNumber: 60The example above depicts the RAG approach to "solved issues" and includes code snippets of similar solved problems.

Konveyor’s Hub component provides a view of an organization's entire application portfolio; this includes a history of analysis information over time as applications have been migrated and problems have been solved. Kai can tap into this information the organization has gathered in Konveyor in addition to looking at commits in each application’s git repo to form code snippets we call "solved issues" that potentially give the LLM an additional set of information to help it learn how the organization has addressed an analysis issue in the past.

The inclusion of "solved issues" is optional and not required to use Kai. However, it becomes a powerful addition to the approach as organizations proceed with large-scale modernization engagements involving hundreds to thousands of applications being migrated to new technologies.

Kai demonstration

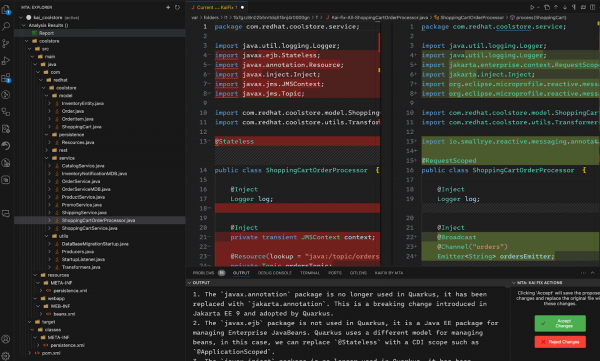

We have a demonstration of using Kai with an IDE plug-in for VS Code that aids the migration process of updating a traditional Java EE application, coolstore, to run with Quarkus. In the IDE we can run Konveyor’s static code analysis and view identified issues, then send requests to Kai to help generate fixes for the problems identified. See Figure 3.

Recap of Konveyor AI (Kai)

Kai:

- Works with an LLM to help update a source code file to a new technology.

- In the above demonstration, we focused on a legacy Java EE and Quarkus, yet this approach is independent of a specific technology. It is compatible with any languages/rules Konveyor knows about, assuming the used LLM also knows about that language.

- Uses a RAG approach based on:

- Static code analysis information to identify incidents to fix, along with potential hints of how to address.

- [optional] solved code snippets that show how the organization has solved a similar problem in the past.

- Is model agnostic.

- Users can bring a model of their choice to run against.

- Kai can tweak prompts for various models to conform to recommended model-specific prompting strategies.

- Does not require fine-tuning a model.

- Is an early project; we are working towards an alpha release in the summer.

Next steps

If you would like to learn more about Kai, check out our in-depth technical deep dive at konveyor.io.

Consider joining the Konveyor community.

We host bi-weekly community calls that are available on YouTube; we would love to see you there!