Red Hat OpenStack Services on OpenShift (RHOSO) service providers can scale faster and maximize their resources. By integrating Kubernetes with OpenStack, organizations see improved resource management and scalability, greater flexibility across the hybrid cloud, simplified development and DevOps practices, and more.

In this article, we’ll cover some of the finer points of OpenShift for OpenStack admins, including the three Red Hat OpenShift Container Platform operators that are prerequisites: cert-manager, MetalLB, and NMstate.

What is an Operator?

An Operator is a method of packaging, deploying, and managing a Kubernetes/OpenShift application. An OpenShift/Kubernetes application is both deployed on OpenShift/Kubernetes and managed using the OpenShift/Kubernetes APIs and oc or kubectl tooling. Operators implement and automate common tasks related to an application's lifecycle, such as deployment, scaling, and backup/restore, by extending Kubernetes's capabilities.

Operators work by watching for resource changes in an OpenShift/Kubernetes cluster and taking specific pre-programmed actions to achieve or maintain a desired state. They leverage Custom Resources (CRs) to introduce new resource types representing a specific application or service configuration. The Operator reacts to changes to these resources, as well as changes to their status, to manage the application.

The concept of an Operator was introduced by CoreOS and is built on three key principles:

- Application-specific knowledge encoded into software: Operators know how to deploy, configure, manage, and troubleshoot the applications they manage. This knowledge, typically acquired by human operators, is codified into the software.

- Declarative application management: Users interact with Operators through Kubernetes APIs and declarative specifications, using tools like kubectl and YAML or JSON manifests to manage applications.

- Level-based, not edge-based logic: Operators take actions to reconcile the current state of the application with the desired state expressed by the user, rather than responding to discrete events. This approach is more resilient and adaptive to changes in the environment.

Operators are particularly useful for stateful applications that require complex setup, scaling, configuration, and maintenance routines. They help to automate the operational complexity that would otherwise require manual intervention, making these tasks more manageable, reliable, and repeatable within the OpenShift/Kubernetes ecosystem.

For more information, see What are Red Hat OpenShift Operators?

OpenShift Container Platform prerequisites

On a base OpenShift Container Platform installation, RHOSO requires 3 operators to be added:

- cert-manager

- MetalLB

- NMstate

Cert-manager

With cert-manager, a Kubernetes add-on automates the management and issuance of TLS certificates within Kubernetes clusters. It simplifies the process of obtaining, renewing, and using SSL/TLS certificates from various certificate authorities (CAs), including public ones like Let's Encrypt, as well as private CAs. cert-manager enables users to ensure their applications are securely served over HTTPS without manually managing certificate lifecycles.

Key features of cert-manager include:

- Automated certificate management: Automatically issues and renews certificates based on Kubernetes resources and annotations. It handles the lifecycle of certificates, ensuring they are valid and up-to-date.

- Support for multiple issuers: Works with various CAs, including Let's Encrypt for free, automated certificates, as well as self-signed certificates and private CAs for more controlled environments. It allows for defining different issuers according to specific needs.

- Integration with DNS and HTTP: Automates the ACME (Automated Certificate Management Environment) challenge process required for issuing certificates, supporting both DNS01 and HTTP01 challenge mechanisms.

- Custom Resource Definitions (CRDs): Extends Kubernetes API with custom resources for managing certificates (

Certificate), issuers (IssuerandClusterIssuer), and more, allowing for declarative configuration of certificates and their properties. - Secures Ingress resources: Easily secures Kubernetes Ingress resources by automatically obtaining and renewing certificates and applying them to Ingresses for secure HTTP traffic.

cert-manager is designed to be used in environments where automated certificate provisioning is essential for operational efficiency and security compliance, making it a critical component for securing communication within OpenShift/Kubernetes clusters.

MetalLB

MetalLB is a load balancer implementation for bare metal Kubernetes clusters, providing a network load balancing solution that integrates with standard network equipment. In environments where external load balancers like those provided by cloud providers (AWS ELB, Google Cloud Load Balancer, etc.) are unavailable, MetalLB fills that gap by offering load-balancing services.

Kubernetes does not include a built-in network load balancer for bare metal clusters (non-cloud environments). When you create a Service of type LoadBalancer in a Kubernetes cluster running in a cloud environment, the cloud provider will automatically provision an external load balancer that directs traffic to the Service. However, in a bare metal cluster, without MetalLB, a Service of type LoadBalancer would remain in the "pending" state indefinitely, because there would be no default implementation to handle these types of Services.

MetalLB solves this by providing two major modes of operation:

- Layer 2 Mode: In this mode, MetalLB responds to Address Resolution Protocol (ARP) requests for the IP address of the Service, essentially "claiming" the IP address on the local network. When a client sends traffic to that IP address, MetalLB forwards the traffic to one of the Service's Pods. This mode is simpler but requires all cluster nodes to be on the same L2 network segment.

- BGP (Border Gateway Protocol) Mode: MetalLB can also integrate with BGP-capable routers in your network. In this mode, MetalLB dynamically advertises the Service IP addresses to the routers using BGP, allowing the traffic to be routed to the cluster dynamically. This more complex and powerful mode allows for more scalable and dynamic routing configurations.

With OSO, we use the L2 layer mode.

MetalLB file format

In our OSO DevPreview, our file is minimal to create a single instance of a metallb resource, as a Custom Resource Definition (CRD):

apiVersion: metallb.io/v1beta1

kind: MetalLB

metadata:

name: metallb

namespace: metallb-systemHere's a breakdown of what this MetalLB resource does:

apiVersion: metallb.io/v1beta1: This indicates the API version of the MetalLB resource.kind: MetalLB: Specifies that the resource is of type MetalLB, indicating that it's a custom resource definition (CRD) provided by MetalLB for configuring the load balancer within the Kubernetes cluster. This is part of MetalLB's move towards custom resources for configuration, allowing for more structured and Kubernetes-native configurations.metadata: Contains metadata about the MetalLB resource.name: metallb: The name of the MetalLB resource. This name is used to identify the resource within the Kubernetes namespace.namespace: metallb-system: Specifies the namespace where MetalLB is deployed. Themetallb-systemnamespace is typically used for MetalLB's components, isolating them from other workloads in the cluster.

This essentially declares an instance of MetalLB to be deployed in the metallb-system namespace. However, without the specification section (spec:), it doesn't detail how MetalLB should be configured (e.g., which IP address pools to use, whether to operate in Layer 2 or BGP mode, etc.).

When using MetalLB with the API version (apiVersion: metallb.io/v1beta1) and deploying it through a MetalLB custom resource (CR), you typically need to define IPAddressPool and L2Advertisement resources to configure MetalLB's operation in Layer 2 mode. You would have guessed that we will find these definitions, respectively, in the osp-ng-metal-lb-ip-address-pool and osp-ng-metal-lb-l2-advertisement yaml files.

NMState

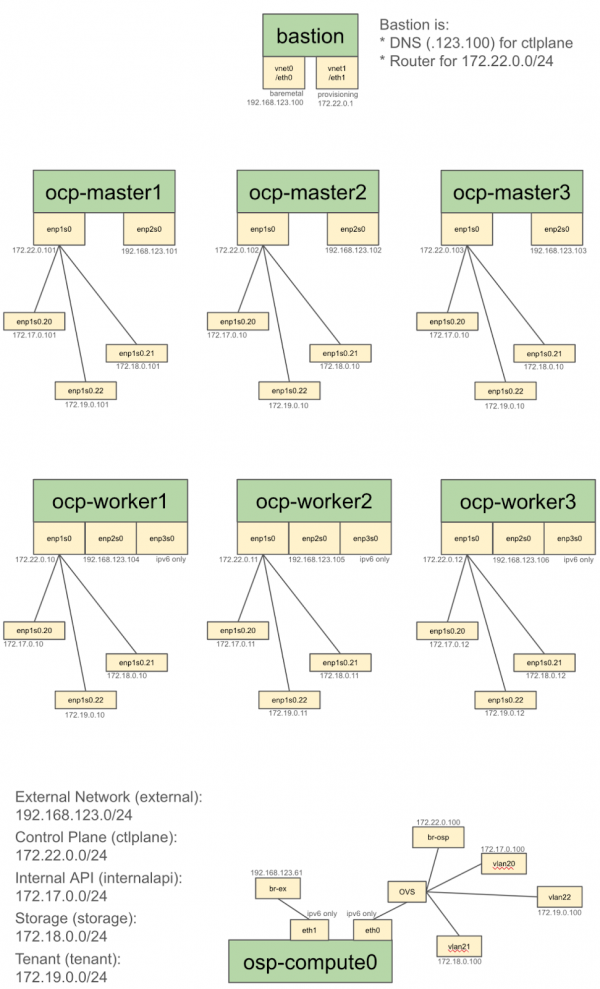

The NMState Operator in OpenShift is a Kubernetes Operator that provides a declarative way to manage node network configurations across an OpenShift cluster. It utilizes the Node Network Configuration Policy (NNCP) to drive the desired network state across cluster nodes, leveraging the NMState project under the hood.

NMState is an open source project that aims to provide a unified way to perform network configurations using declarative syntax. It allows users to describe network interfaces, IP routing, and other network settings in a declarative manner using YAML or JSON formats. The NMState project simplifies network management tasks, making them more consistent and easier to automate.

Key features of the NMState Operator:

- Declarative network configuration: Enables users to define the desired state of network interfaces, bridges, VLANs, and other network settings in a declarative manner, using YAML. This simplifies the process of configuring, managing, and maintaining network settings across nodes in the cluster.

- Cluster-wide network management: Provides a way to apply network configurations across all nodes in an OpenShift cluster or to a subset of nodes, ensuring consistency in network setups.

- Automated application: Once a Node Network Configuration Policy is defined and applied, the NMState Operator automatically enforces the desired network state on the specified nodes, handling the application and rollback of configurations as needed.

- Status reporting: Reports the current network configuration status and any errors encountered during the application of policies, aiding in troubleshooting and ensuring visibility into network configurations.

- Integration with OpenShift: Designed to integrate seamlessly with OpenShift, providing a Kubernetes-native experience for network configuration management.

- It can be used to ensure consistent network settings across all nodes, and is especially useful in large clusters or when applying standardized configurations. It can also help automate the setup of complex network configurations, such as when bonding interfaces, creating VLANs, or setting up bridges, which might otherwise require manual intervention on each node. Additionally, it can quickly apply changes to network configurations in response to evolving requirements or addressing issues without requiring manual adjustments on individual nodes.

Administrators define a NodeNetworkConfigurationPolicy resource that specifies the desired network state. The NMState Operator watches for these policies and applies the specified network configurations to the nodes, leveraging the Linux NetworkManager and the NMState library. Nodes report back the current state of their network configurations, which can be viewed through the policy status, helping administrators verify the applied configurations.

Conclusion

Now that we have overseen the required operators, their roles, and the format used to create the OpenStack control plane, let’s return to the deployment process. That is the subject of the next article: Red Hat OpenShift 101 for OpenStack admins: Configuration

Last updated: January 15, 2025