Page

Load and run an OpenVINO sample notebook

Load and run an OpenVINO sample notebook

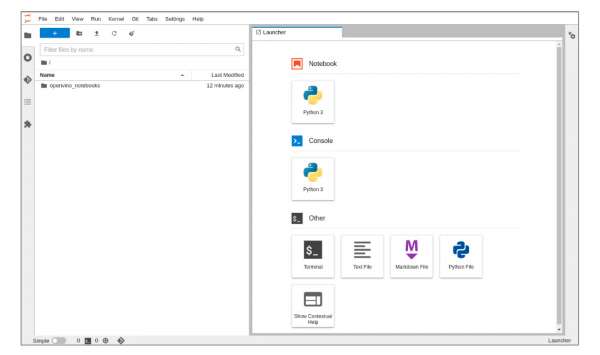

Welcome to JupyterLab!

After you start your server, three sections appear in JupyterLab's launcher:

- Notebook

- Console

- Other

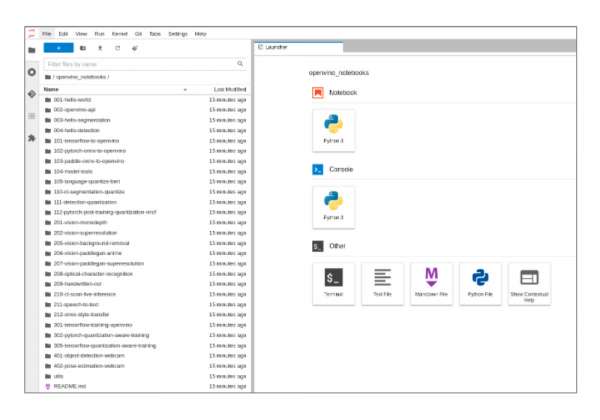

On the left side of the navigation pane, locate the Name explorer panel (Figure 2), where you can create and manage your project directories.

The openvino-notebooks directory contents

OpenVINO is deployed with a number of sample projects. Double click on the openvino_notebooks directory to view the sample projects (Figure 3).

From the sample projects, look for 001-hello-world and double-click the icon to view the directory’s contents.

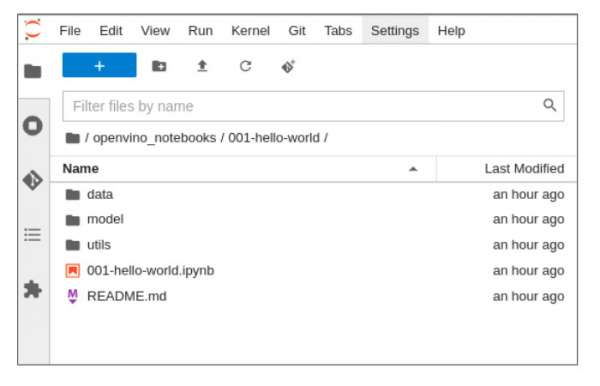

Opening the directory displays its files and subdirectories (Figure 4).

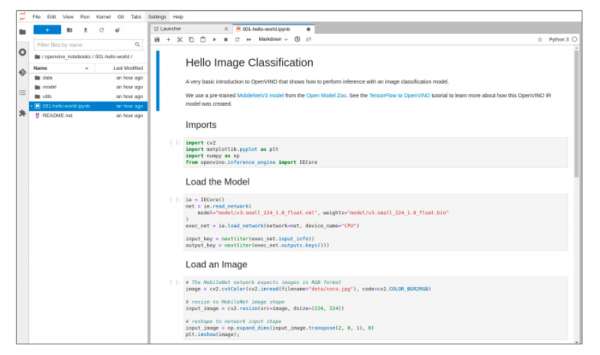

Double-click the 001-hello-world.ipynb file to open this Jupyter notebook. It looks like Figure 5.

In this hello world example, you will import some Python libraries, load a model, load an image, and then perform inference with the image classification model.

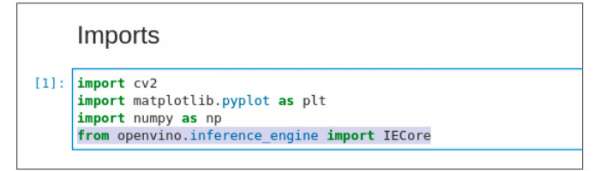

Start by importing the various Python packages along with the OpenVINO inference engine, IECore. These packages are shown in Figure 6.

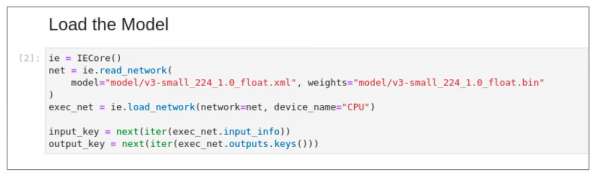

Once the inference engine is loaded, run the next cell to load the model. The cell’s code is shown in Figure 7.

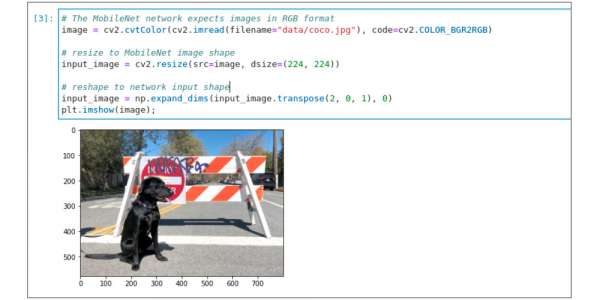

After the model is loaded, load an image by running the “Load an Image” notebook cell. Note that the MobileNet network expects images in RGB format. Therefore, you need to resize the image to the MobileNet image shape. Next you need to reshape the image to the network input shape. Why do you need a second reshape?

The cv2.resize line resizes the image to the width and height that is expected by the network: 224 by 224. After resizing input_image will be an array with shape (224,224,3). The source model that was used in this notebooks expects images in NCHW layout, so the input should be transposed to (1,3,224,224). That is what the transpose line does.

Since this is a TensorFlow model, it is not very intuitive that this network expects images in NCHW layout. In older OpenVINO versions this was the case for all models. From the 2022.1 release, this is no longer the case, but the model for this notebook was converted with an older version of OpenVINO. OpenVINO now by default uses the layout of the original model, which means that for PyTorch vision models this transpose is still needed, but not for TensorFlow models. See Figure 8.

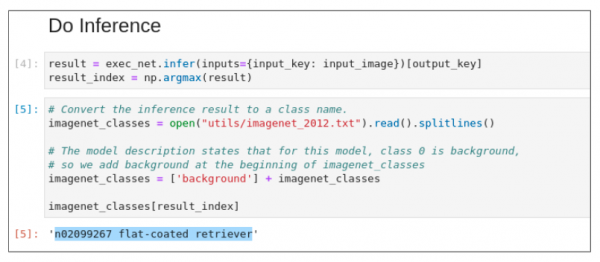

When your image is loaded, you can then perform inference on the image. Run the “Do Inference” notebook cell.

In this notebook example, the model correctly infers that the image contains a n02099267 flat-coated retriever. See Figure 9.

This concludes our Introduction to OpenVINO.