Chandra Shekhar Pandey's contributions

Article

Integrate Red Hat Fuse 7 on Apache Karaf with Red Hat AMQ 7

Chandra Shekhar Pandey

Keep reading for instructions on using Apache Camel applications in Red Hat Fuse 7.8 to produce and consume messages from Red Hat AMQ 7 or ActiveMQ Artemis.

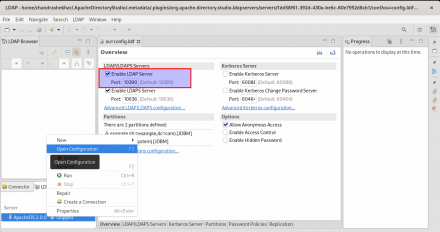

Article

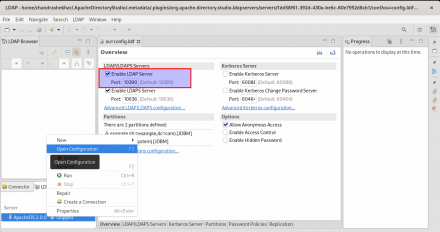

Secure authentication with Red Hat AMQ 7.7 and ApacheDS LDAP server

Chandra Shekhar Pandey

Learn how to integrate Red Hat AMQ 7.7 with Apache Directory Studio, which is an LDAP browser and directory client for ApacheDS.

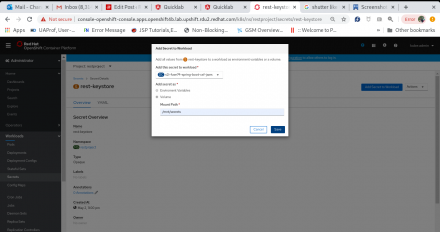

Article

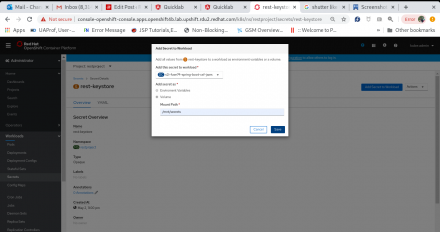

Adding keystores and truststores to microservices in Red Hat OpenShift

Chandra Shekhar Pandey

Configure a keystore for a Java-based web service or HTTP endpoint, and a truststore for a web service client, HTTP client, or messaging client.

Article

Autoscaling Red Hat Fuse applications with OpenShift

Chandra Shekhar Pandey

We demonstrate how to set up and use Red Hat OpenShift's horizontal autoscaling feature with Red Hat Fuse applications.

Article

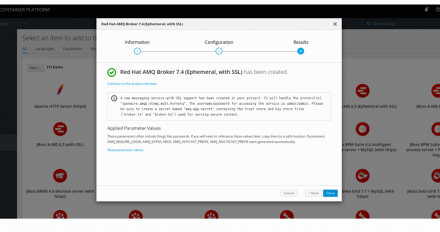

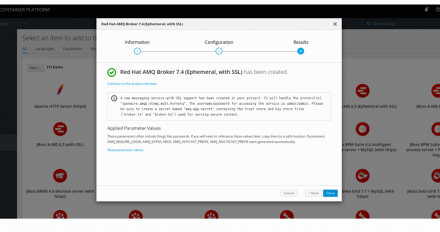

4 steps to set up the MQTT secure client for Red Hat AMQ 7.4 on OpenShift

Chandra Shekhar Pandey

We show how to set up Red Hat AMQ 7.4 on Red Hat OpenShift and how to connect the external MQTT secure client to the AMQ 7.4 platform.

Article

Red Hat AMQ 6.3 on OpenShift: Set up, connect SSL client, and configure logging

Chandra Shekhar Pandey

This article describes how to set up Red Hat AMQ 6.3 on OpenShift. It also shows how to set up an external Camel-based SSL client to connect to the AMQ Broker, which is a pure-Java multiprotocol message broker, and how to configure a debug-level log configuration to have more verbose logging so you can analyze runtime issues.

Article

How to configure a JDBC Appender for Red Hat Fuse 7 with Karaf

Chandra Shekhar Pandey

This article shows how to configure a JDBC Appender for Red Hat Fuse 7 running in a Karaf environment. Application log messages for your integration projects can be persisted to an Oracle 11g database.

Article

Logging incoming and outgoing messages for Red Hat AMQ 7

Chandra Shekhar Pandey

How to capture incoming and outgoing messages for Red Hat AMQ 7, which can aid in development/testing. Interceptors which can modify messages are also shown.

Integrate Red Hat Fuse 7 on Apache Karaf with Red Hat AMQ 7

Keep reading for instructions on using Apache Camel applications in Red Hat Fuse 7.8 to produce and consume messages from Red Hat AMQ 7 or ActiveMQ Artemis.

Secure authentication with Red Hat AMQ 7.7 and ApacheDS LDAP server

Learn how to integrate Red Hat AMQ 7.7 with Apache Directory Studio, which is an LDAP browser and directory client for ApacheDS.

Adding keystores and truststores to microservices in Red Hat OpenShift

Configure a keystore for a Java-based web service or HTTP endpoint, and a truststore for a web service client, HTTP client, or messaging client.

Autoscaling Red Hat Fuse applications with OpenShift

We demonstrate how to set up and use Red Hat OpenShift's horizontal autoscaling feature with Red Hat Fuse applications.

4 steps to set up the MQTT secure client for Red Hat AMQ 7.4 on OpenShift

We show how to set up Red Hat AMQ 7.4 on Red Hat OpenShift and how to connect the external MQTT secure client to the AMQ 7.4 platform.

Red Hat AMQ 6.3 on OpenShift: Set up, connect SSL client, and configure logging

This article describes how to set up Red Hat AMQ 6.3 on OpenShift. It also shows how to set up an external Camel-based SSL client to connect to the AMQ Broker, which is a pure-Java multiprotocol message broker, and how to configure a debug-level log configuration to have more verbose logging so you can analyze runtime issues.

How to configure a JDBC Appender for Red Hat Fuse 7 with Karaf

This article shows how to configure a JDBC Appender for Red Hat Fuse 7 running in a Karaf environment. Application log messages for your integration projects can be persisted to an Oracle 11g database.

Logging incoming and outgoing messages for Red Hat AMQ 7

How to capture incoming and outgoing messages for Red Hat AMQ 7, which can aid in development/testing. Interceptors which can modify messages are also shown.